Chat Completions

The Chat Completions API builds a conversation based on the provided list of messages and then generates a reply using the specified model.

On a PC or the AI Pyramid desktop, you can pass a list of messages through the OpenAI API to construct a conversation. Before running the program, modify the IP portion of base_url below to the actual IP address of the device, and ensure that the corresponding model package is installed on the device. For model package installation instructions, refer to the Model List section.

For detailed model descriptions, refer to the Model Introduction section.

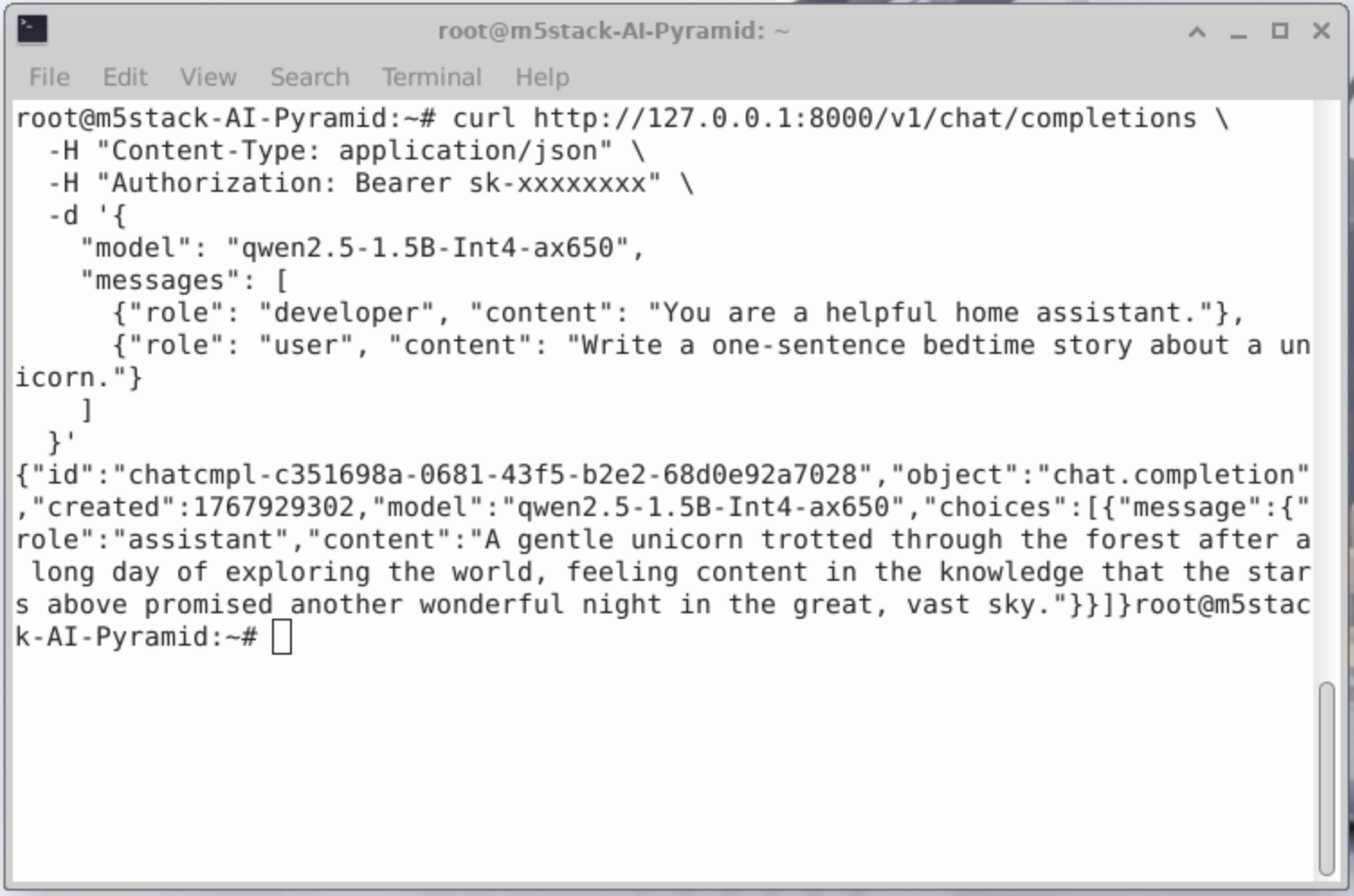

Curl Call

curl http://192.168.20.186:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer sk-xxxxxxxx" \

-d '{

"model": "qwen2.5-1.5B-Int4-ax650",

"messages": [

{"role": "developer", "content": "You are a helpful home assistant."},

{"role": "user", "content": "Write a one-sentence bedtime story about a unicorn."}

]

}'

Python Call

from openai import OpenAI

client = OpenAI(

api_key="sk-",

base_url="http://192.168.20.186:8000/v1"

)

completion = client.chat.completions.create(

model="qwen2.5-0.5B-p256-ax630c",

messages=[

{"role": "developer", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello!"}

]

)

print(completion.choices[0].message)Request Parameters

| Parameter Name | Type | Required | Example Value | Description |

|---|---|---|---|---|

| messages | array | Yes | [{"role": "user", "content": "Hello"}] | Conversation history composed of multiple messages. Text, image, audio, and other modalities are supported (depending on the model). |

| model | string | Yes | qwen2.5-0.5B-p256-ax630c | The model ID used to generate the reply. Multiple models are supported. Refer to the Model Introduction for selection. |

| audio | - | No | - | Audio output is not currently supported. |

| function_call | - | No | - | Function calling is not currently supported. |

| max_tokens | integer | No | 1024 | The maximum number of tokens the model is allowed to generate. Output will be truncated if exceeded. |

| response_format | object | No | "json_object" | Specifies the output format of the model. Currently, only "json_object" is supported. |

Response Example

ChatCompletionMessage(content='Hello! How can I assist you today?', refusal=None, role='assistant', annotations=None, audio=None, function_call=None, tool_calls=None)