AI Pyramid - Home Assistant

Home Assistant is an open-source smart home platform that supports local device management and automation control, featuring privacy protection, high security, reliability, and extensive customization capabilities.

1. Preparation

2. Install Image

Refer to the Home Assistant Official Documentation or follow the steps below to deploy the Docker container.

- Pull the Home Assistant Docker image

- /PATH_TO_YOUR_CONFIG points to the folder where you want to store the configuration and run Home Assistant. Make sure to keep the

:/configpart. - MY_TIME_ZONE is a tz database name, for example

TZ=America/Los_Angeles.

docker run -d \

--name homeassistant \

--privileged \

--restart=unless-stopped \

-e TZ=MY_TIME_ZONE \

-v /PATH_TO_YOUR_CONFIG:/config \

-v /run/dbus:/run/dbus:ro \

--network=host \

ghcr.io/home-assistant/home-assistant:stable3. HAOS Initialization

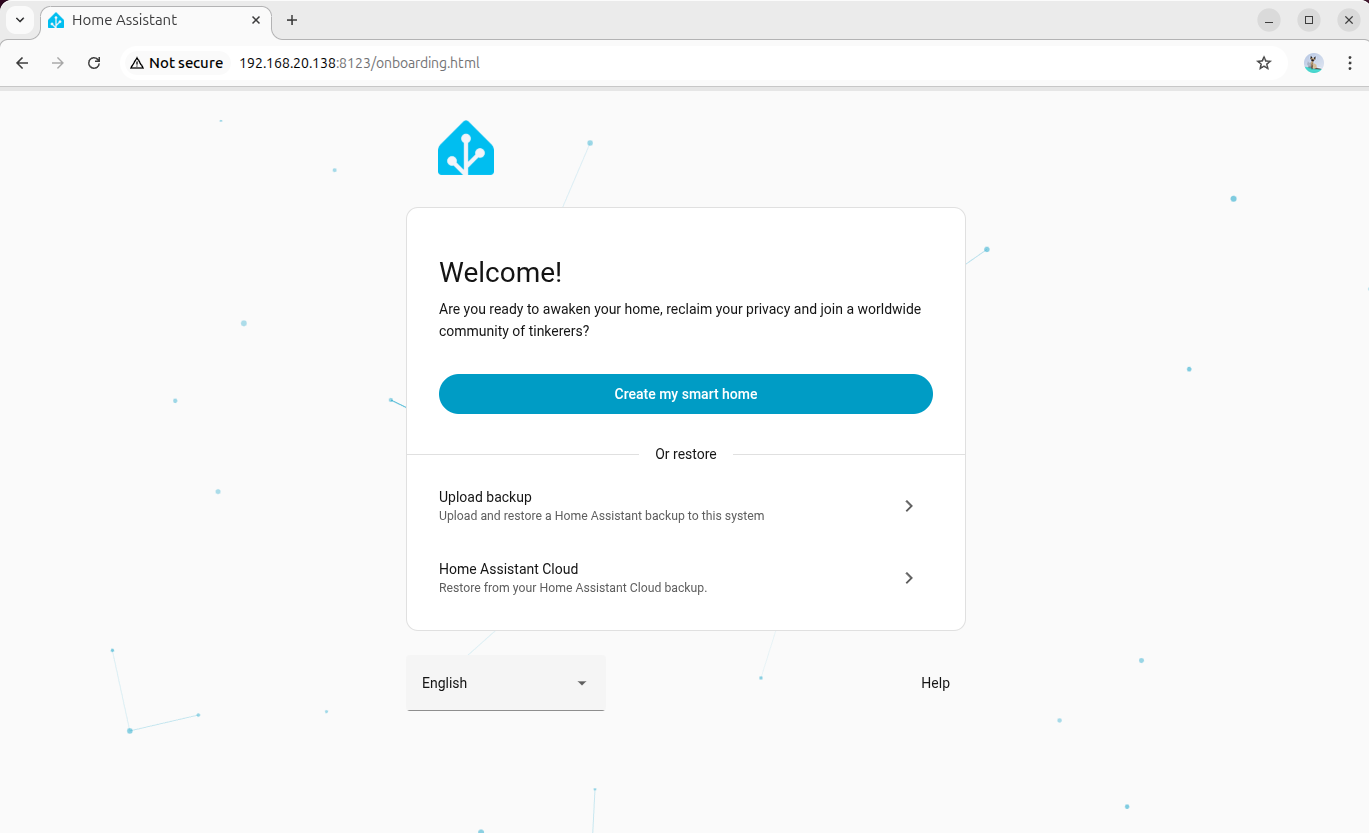

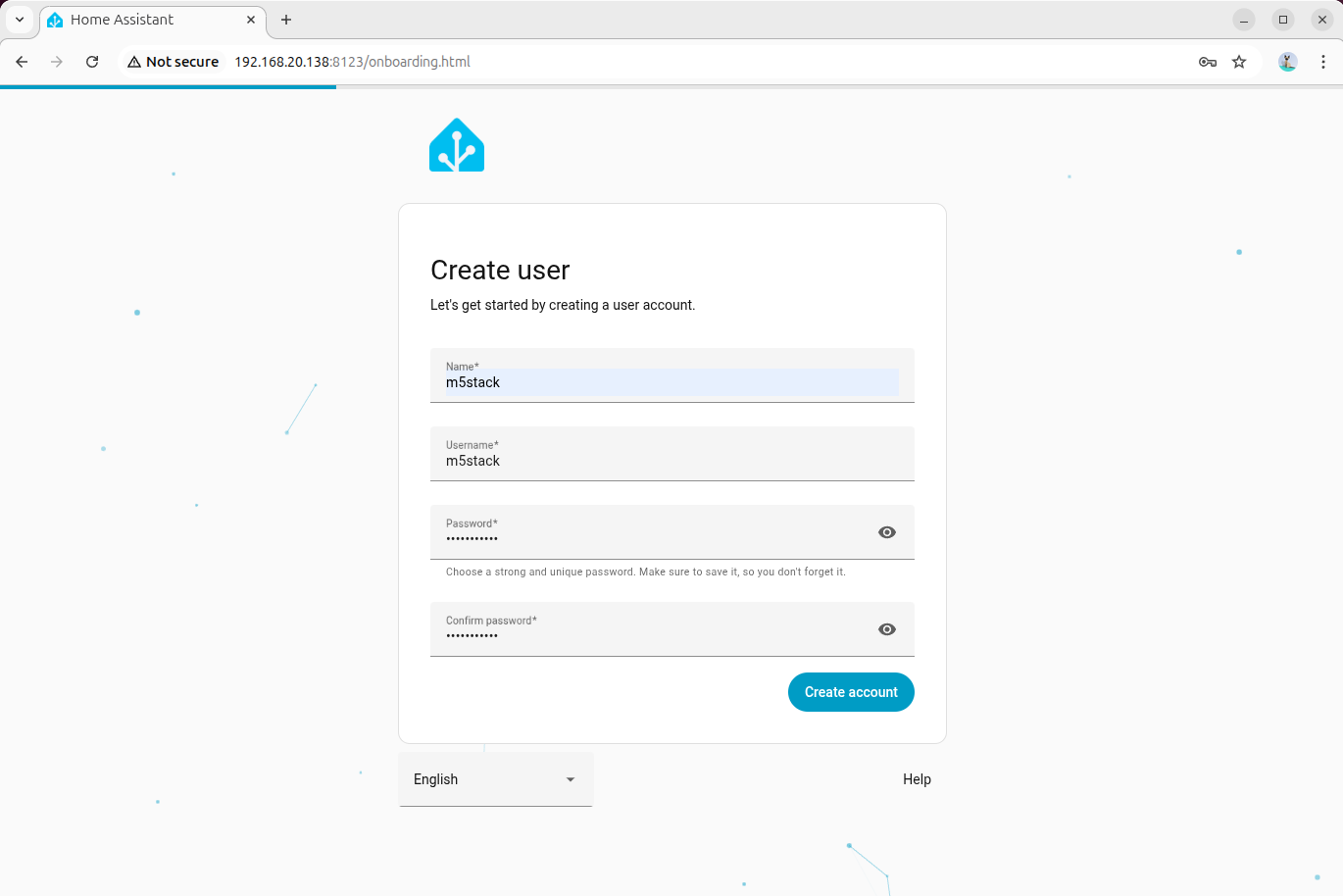

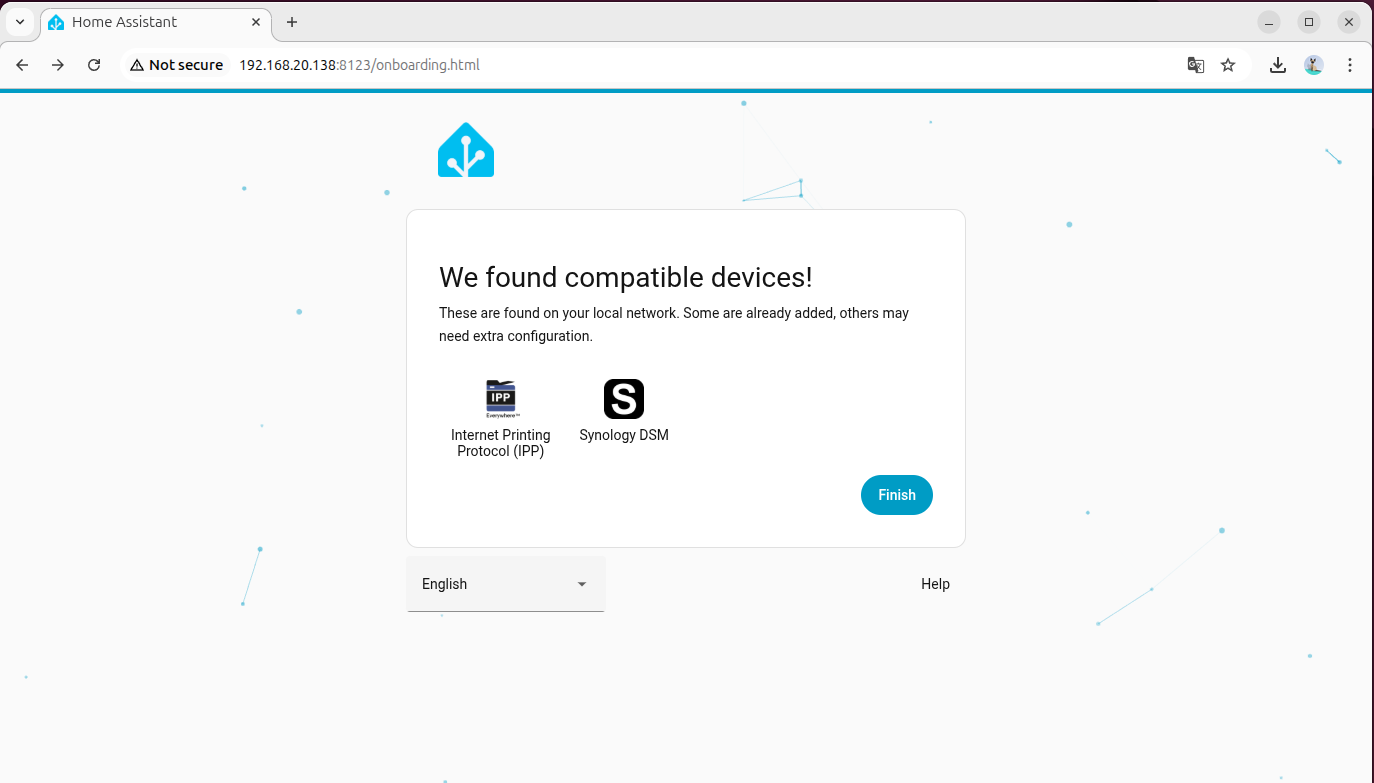

- Access the Home Assistant Web interface via a browser: local access

http://homeassistant.local:8123/, remote accesshttp://DEVICE_IP:8123/

- Follow the on-screen instructions to create an administrator account and complete the system initialization configuration.

4. Device Firmware Compilation

- Refer to the ESPHome Official Installation Guide to deploy the ESPHome development environment on the development host.

This document is written based on ESPHome version 2025.12.5. There are significant differences between versions, so please select the corresponding version according to the project YAML configuration file requirements.

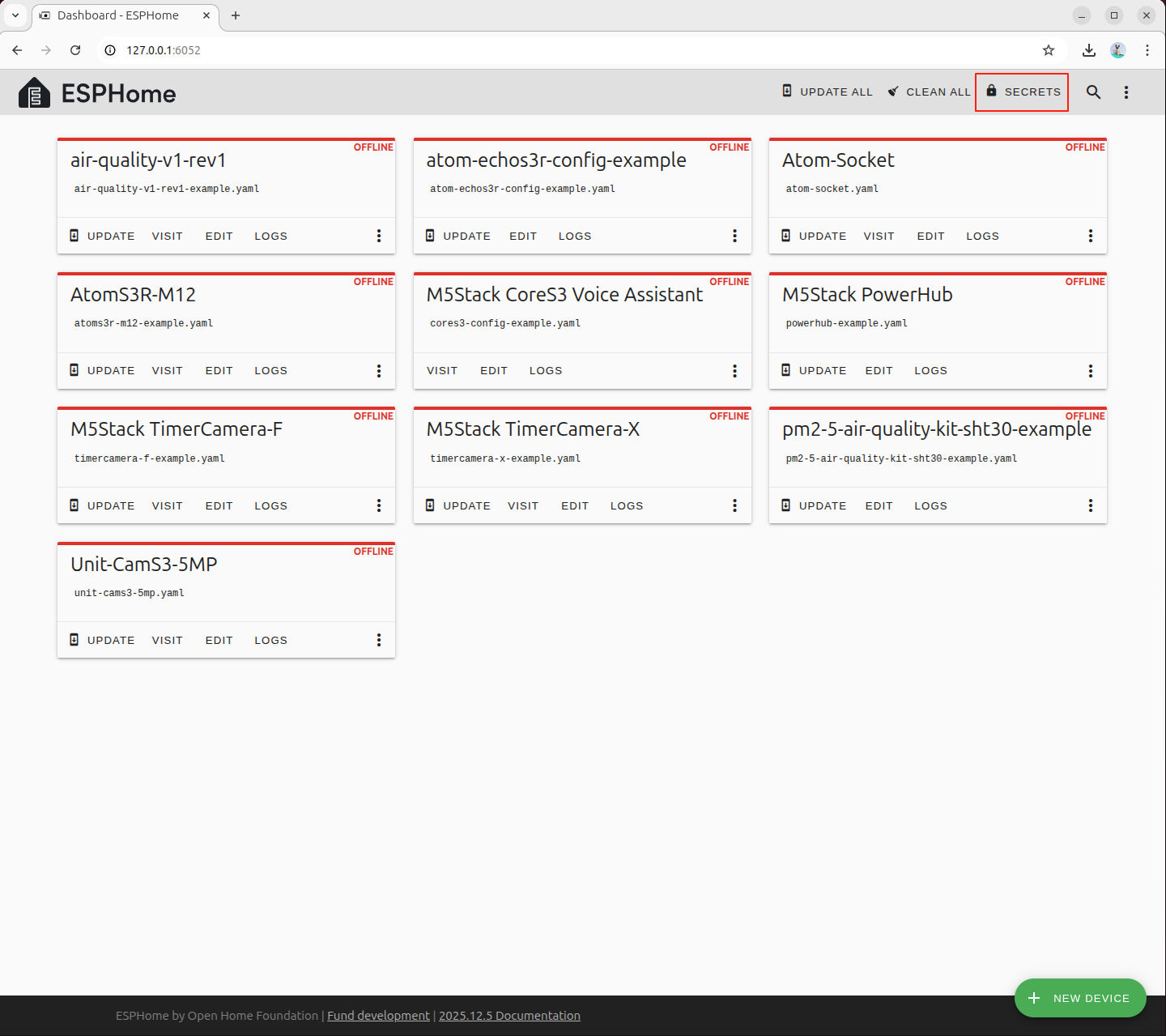

pip install esphome==2025.12.5- Clone the M5Stack ESPHome Configuration Repository

git clone https://github.com/m5stack/esphome-yaml.git- Start the ESPHome Dashboard service

esphome dashboard esphome-yaml/- Access 127.0.0.1:6052 via a browser

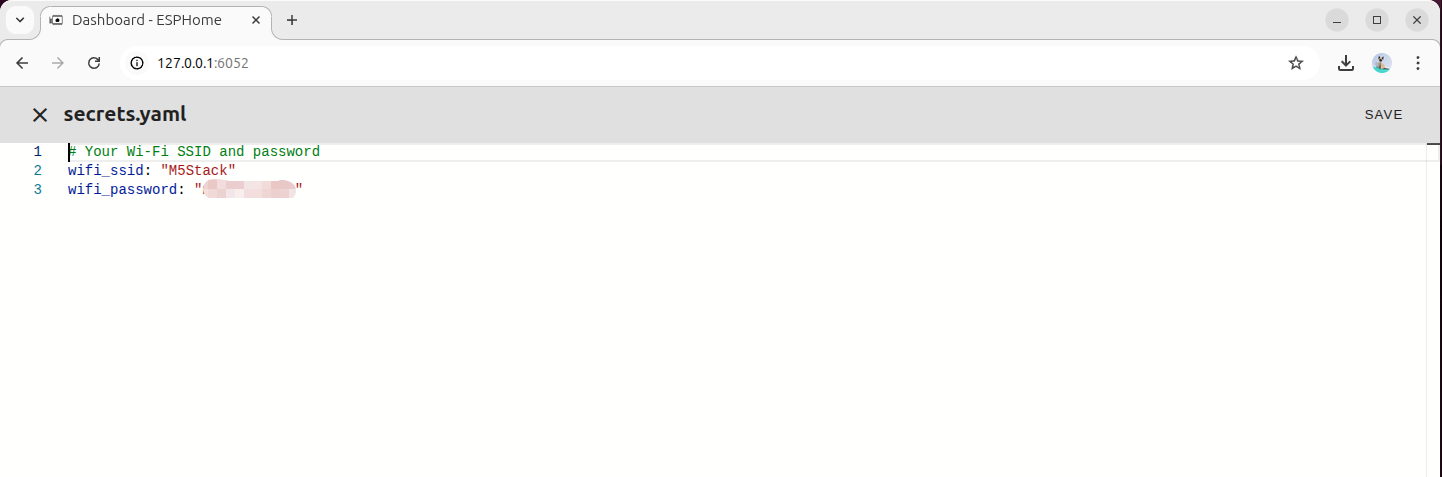

- Configure Wi-Fi connection parameters

# Your Wi-Fi SSID and password

wifi_ssid: "your_wifi_name"

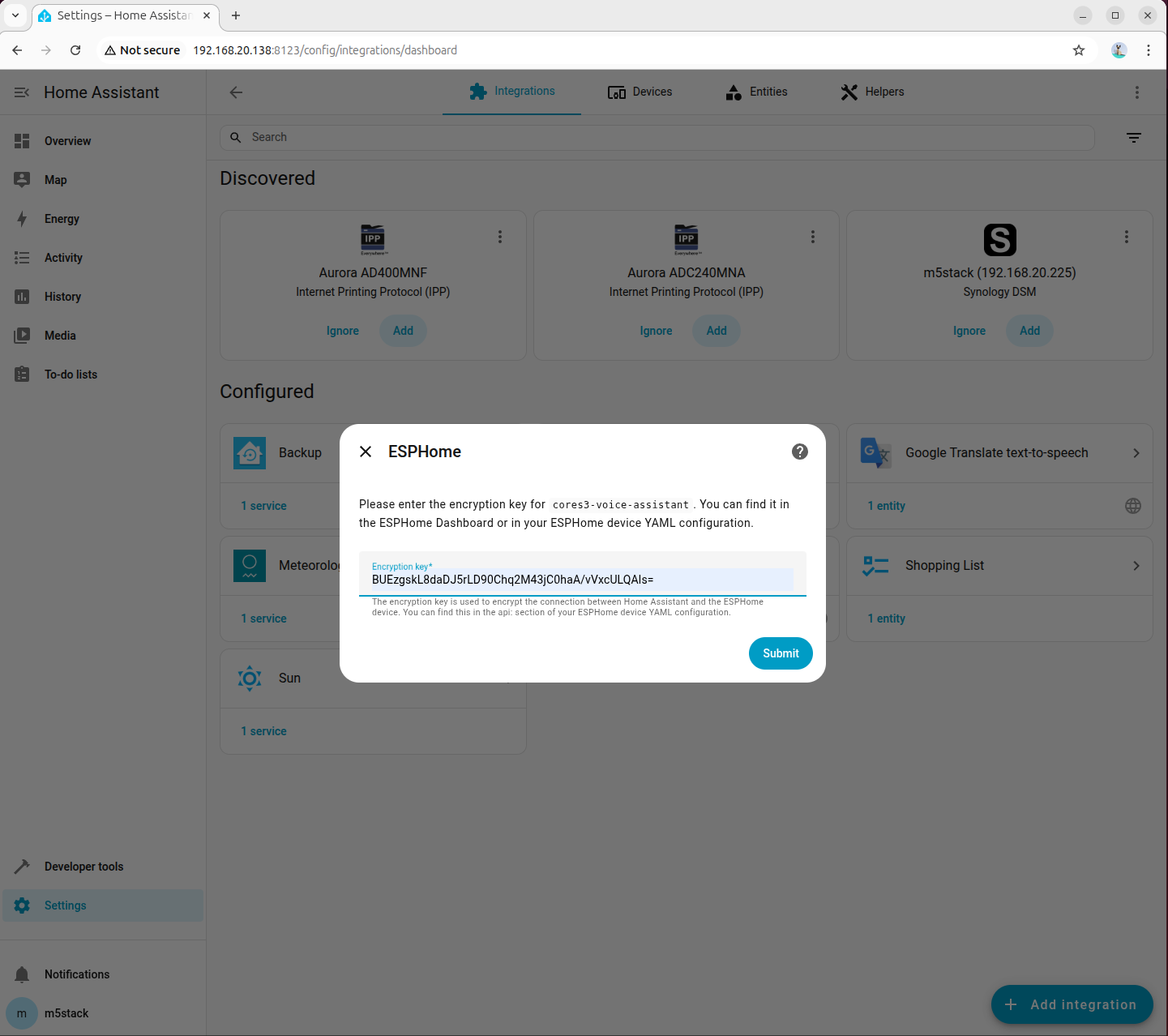

wifi_password: "your_wifi_password"- Generate an encryption key using OpenSSL

openssl rand -base64 32Example output of key generation:

(base) m5stack@MS-7E06:~$ openssl rand -base64 32

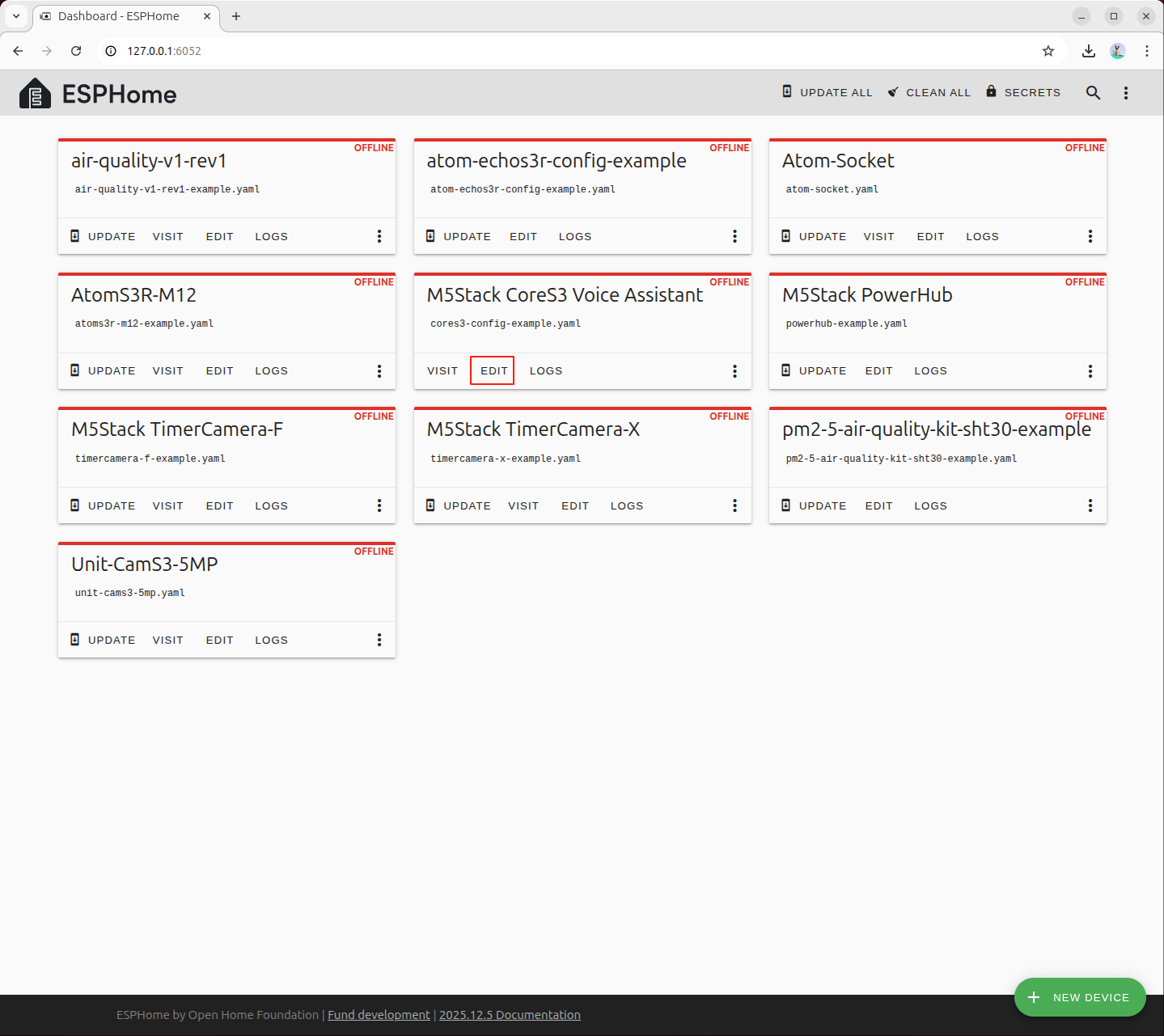

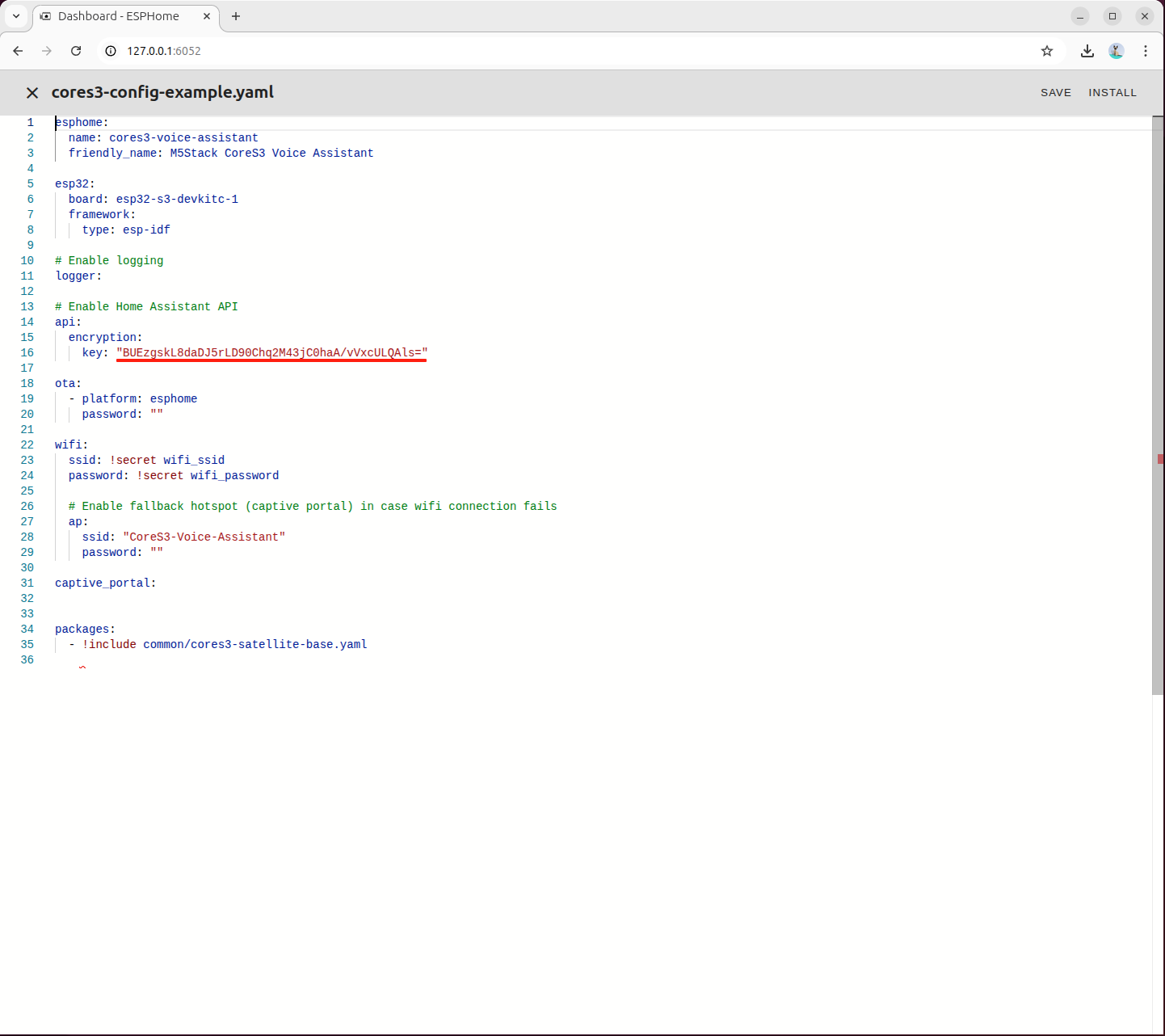

BUEzgskL8daDJ5rLD90Chq2M43jC0haA/vVxcULQAls=- Edit the

cores3-config-example.yamlconfiguration file and fill in the generated encryption key into the corresponding field

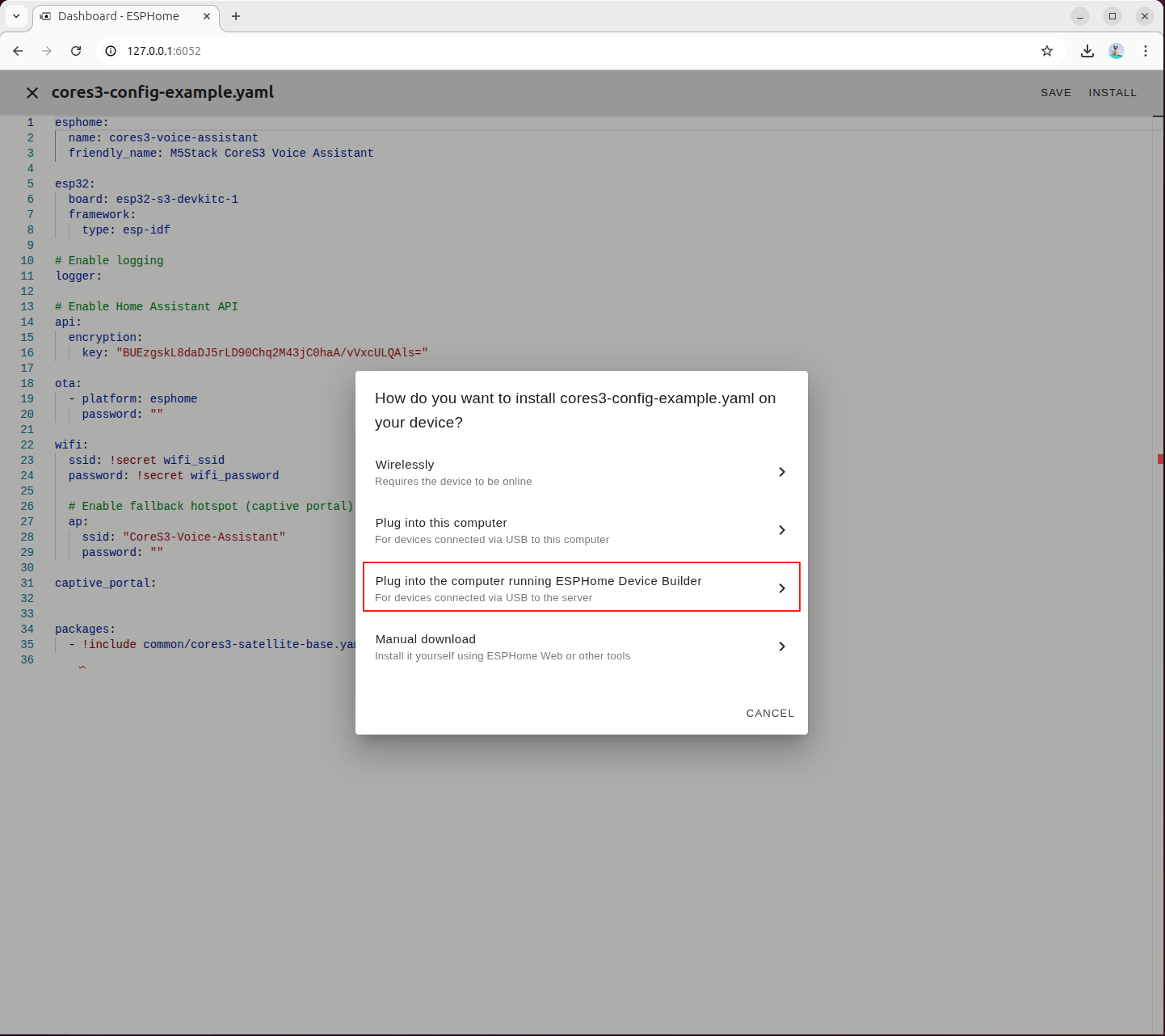

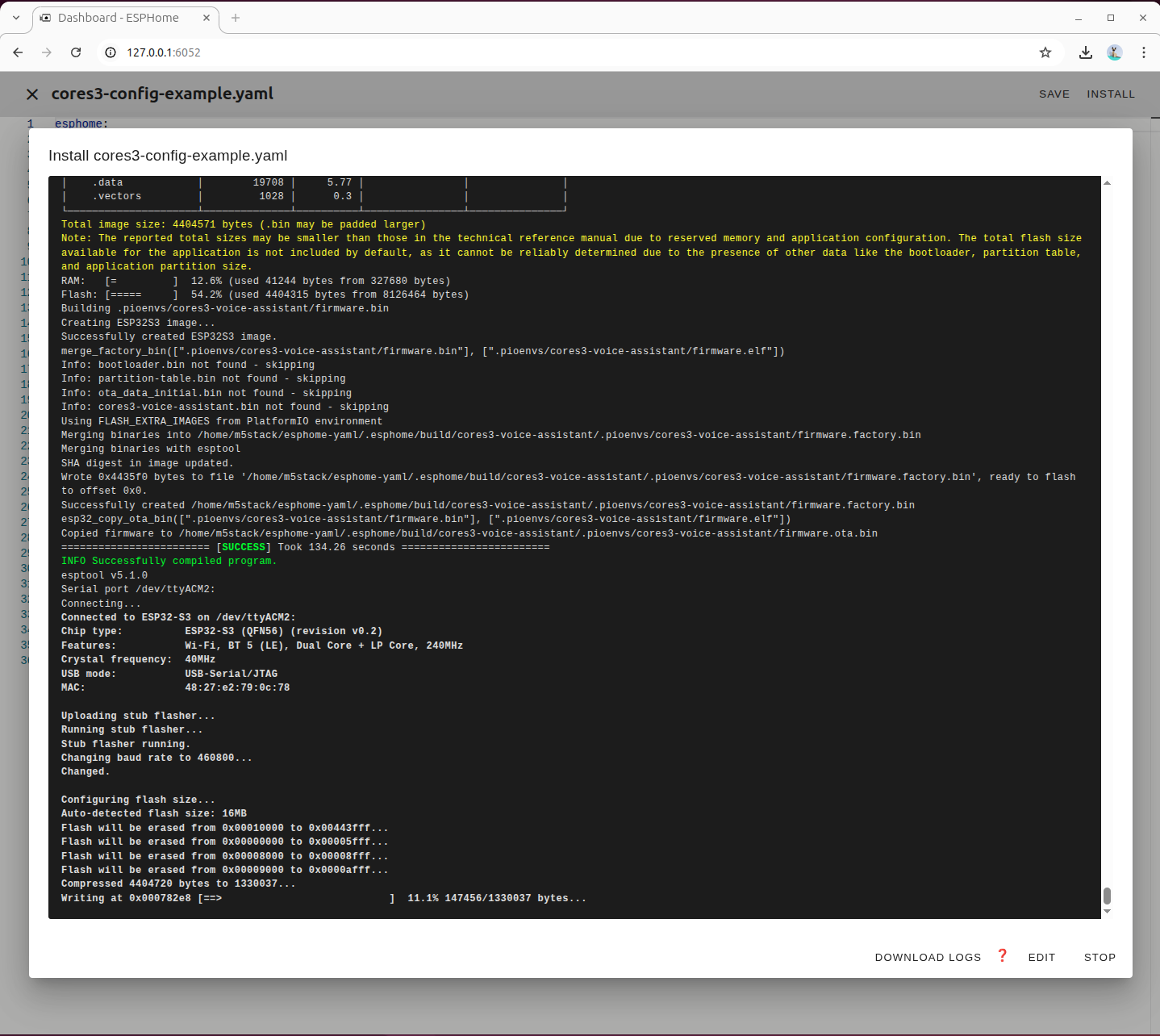

Click the INSTALL button in the upper-left corner to start compilation

Select the third option to view the compilation output in real time via the terminal

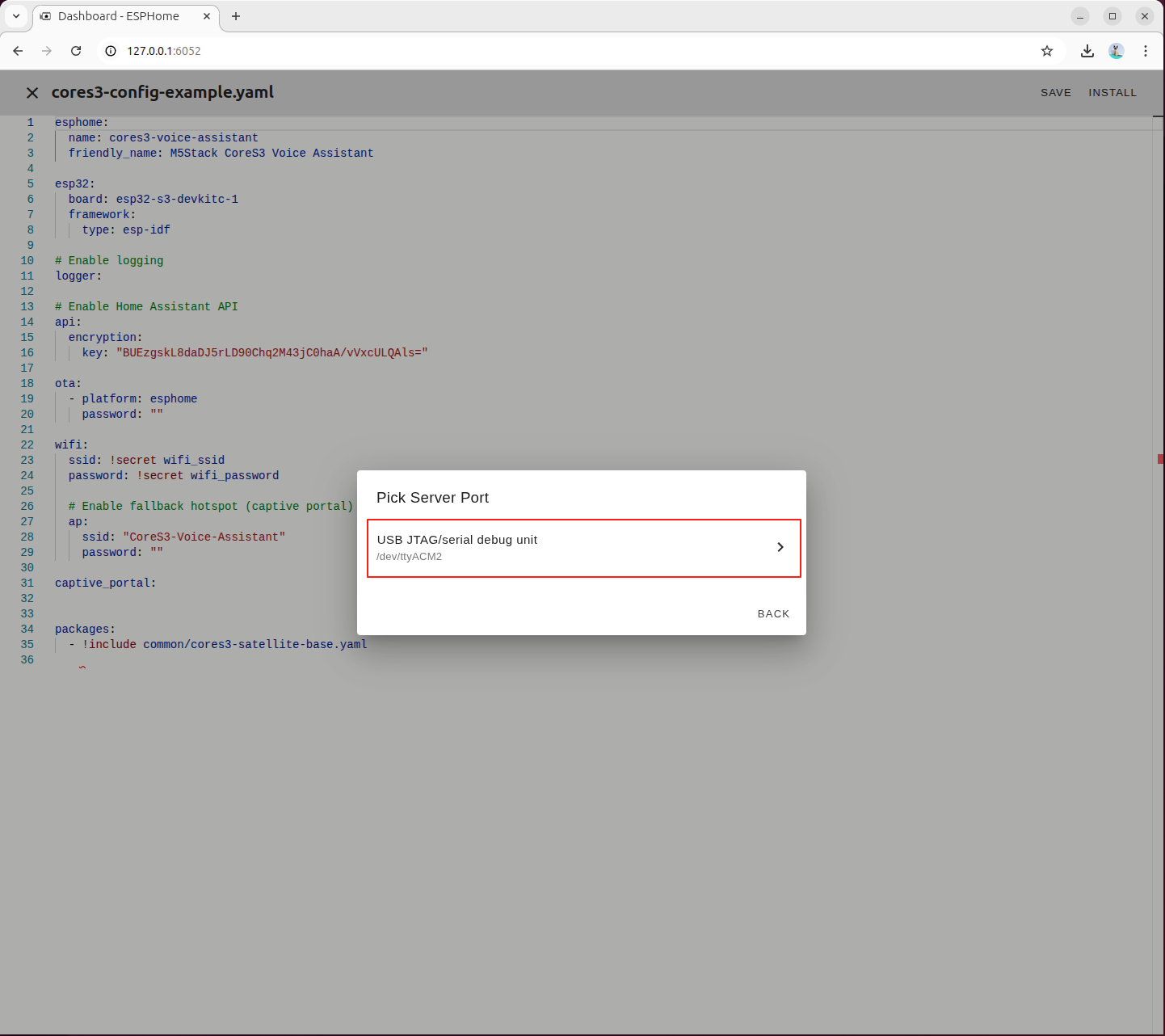

Select the serial port device corresponding to CoreS3

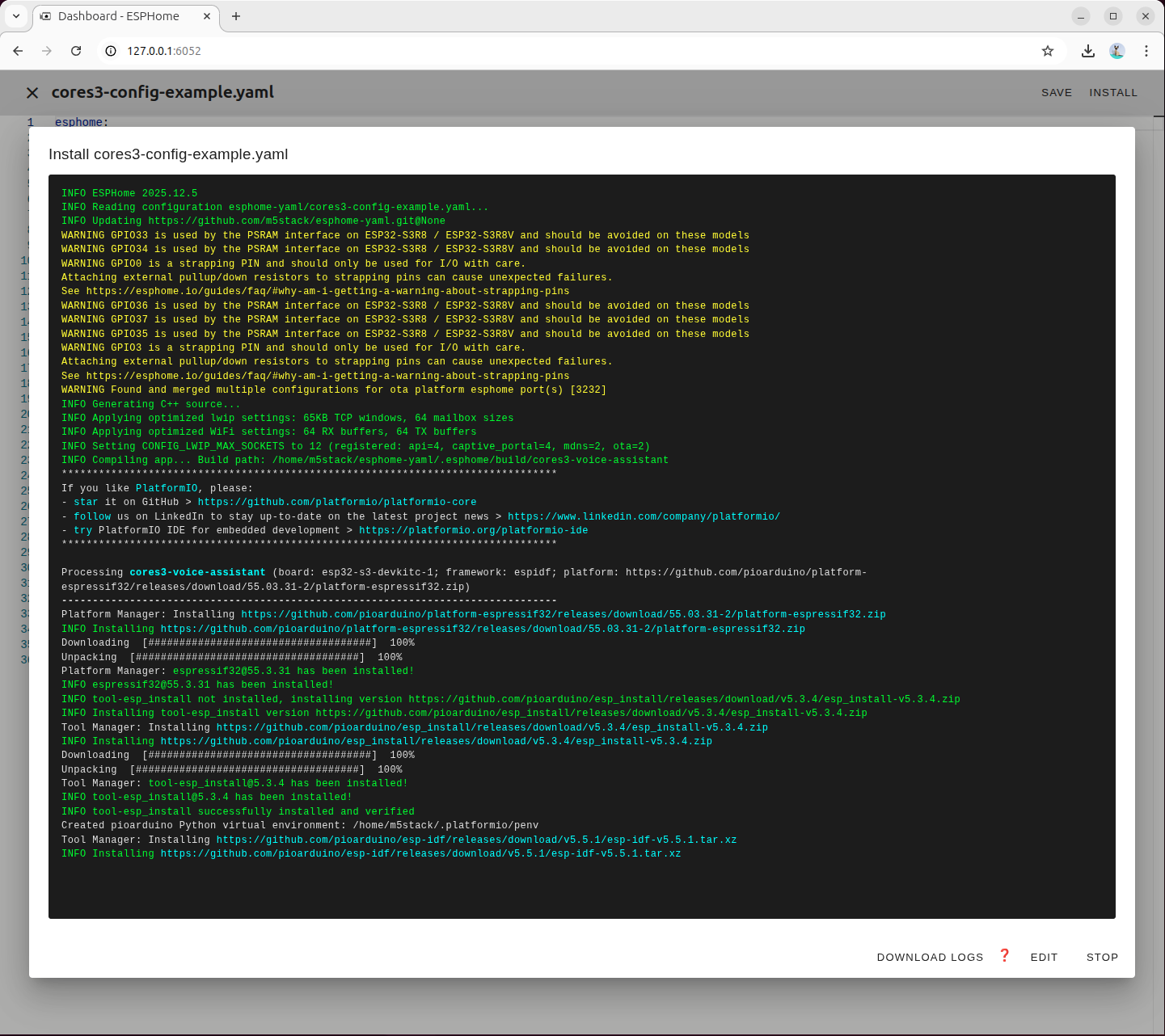

During the first compilation, the required dependencies will be downloaded automatically

Wait for the firmware compilation and flashing process to complete

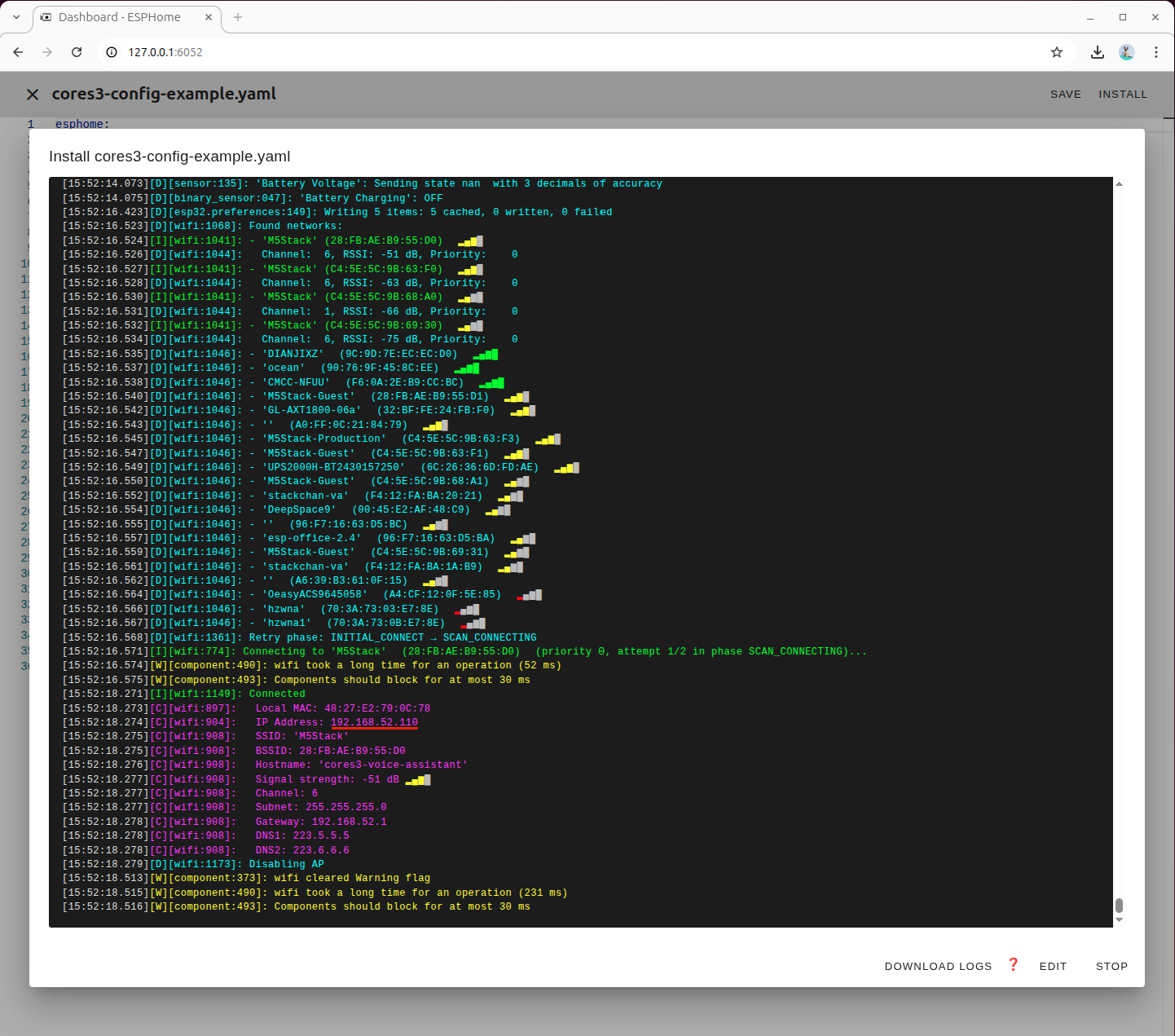

After the device restarts, record the IP address it obtains, which will be required later when integrating the device into Home Assistant

5. Add Device

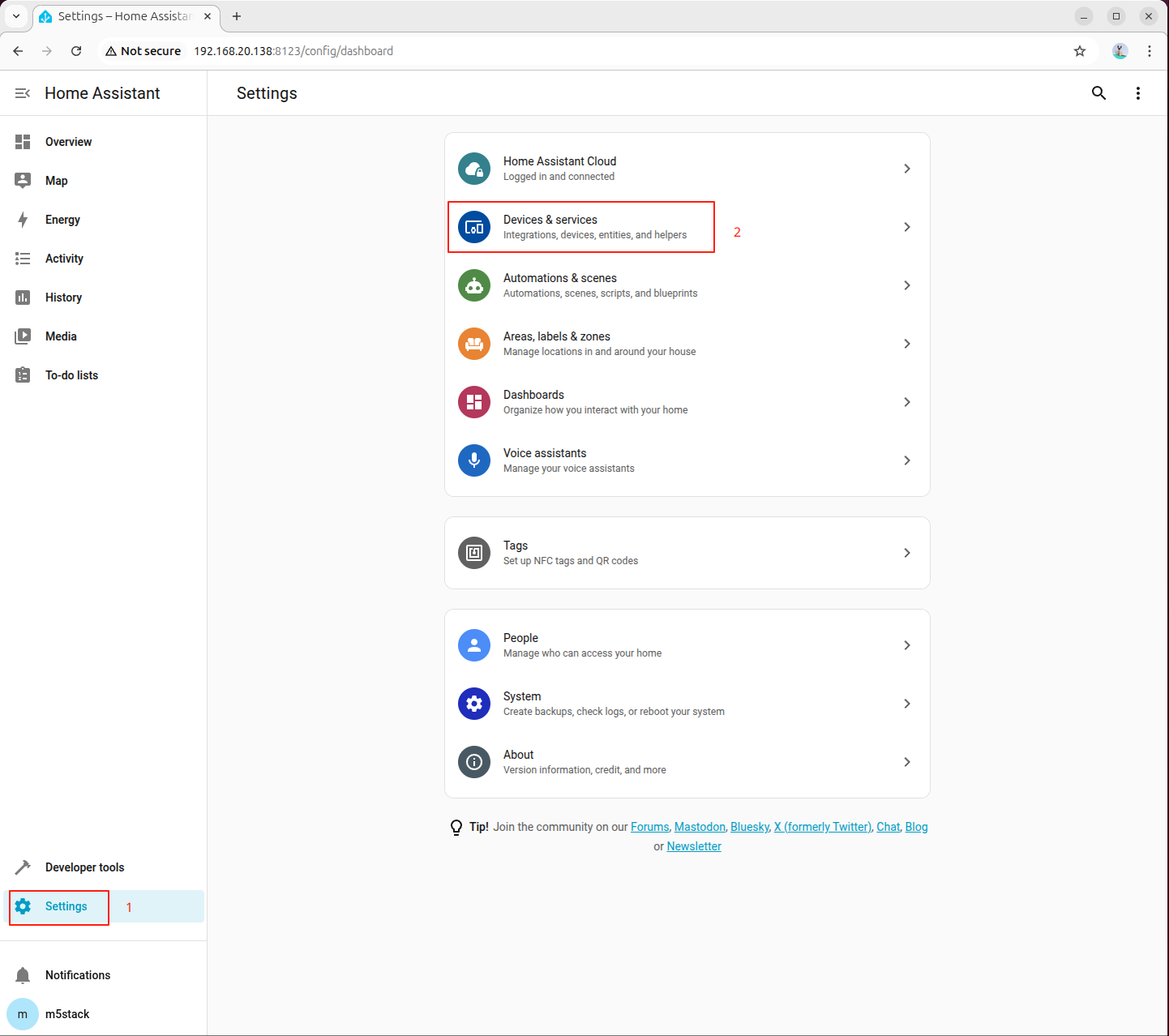

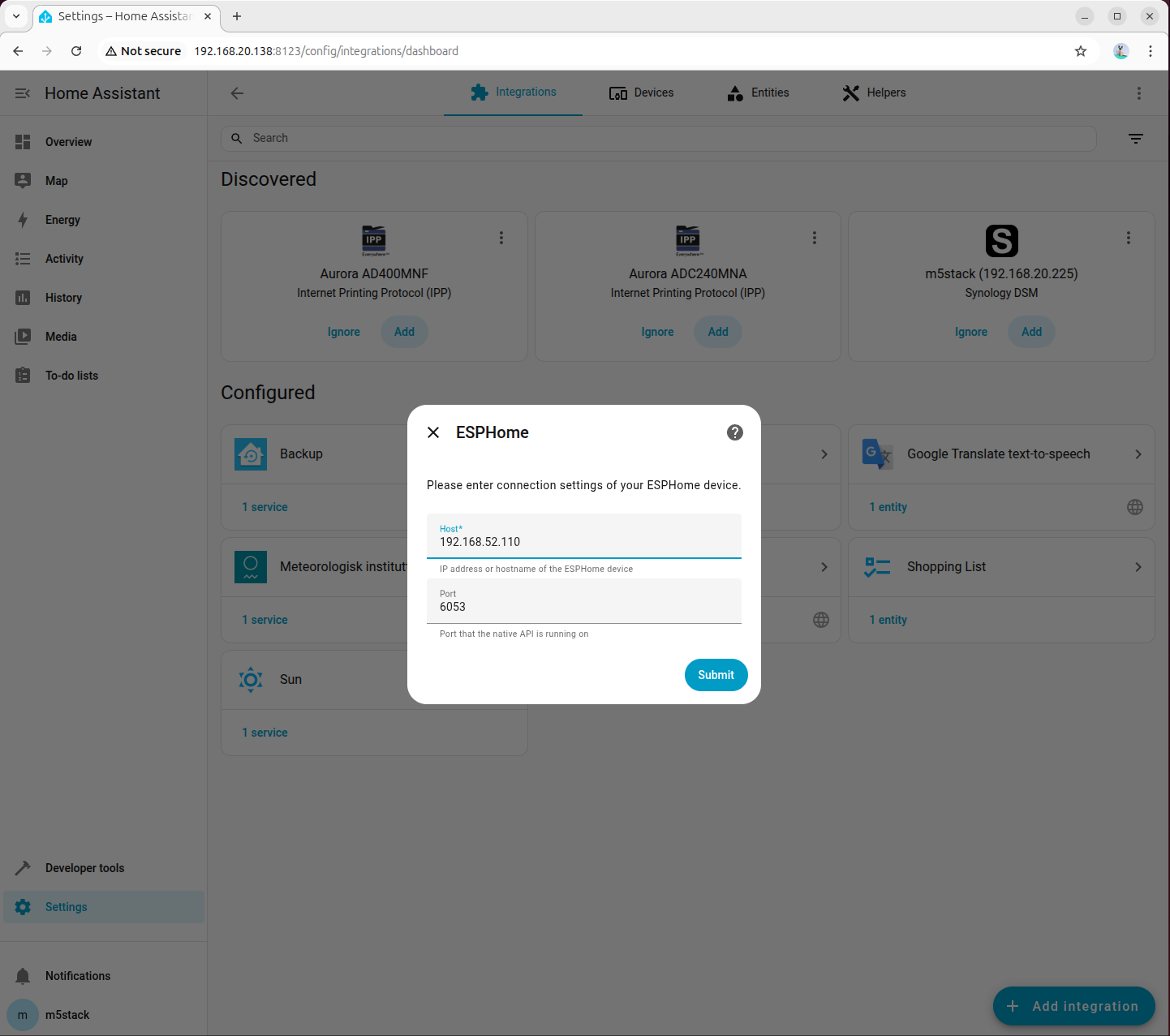

- Enter the Home Assistant Settings interface and select Add Device

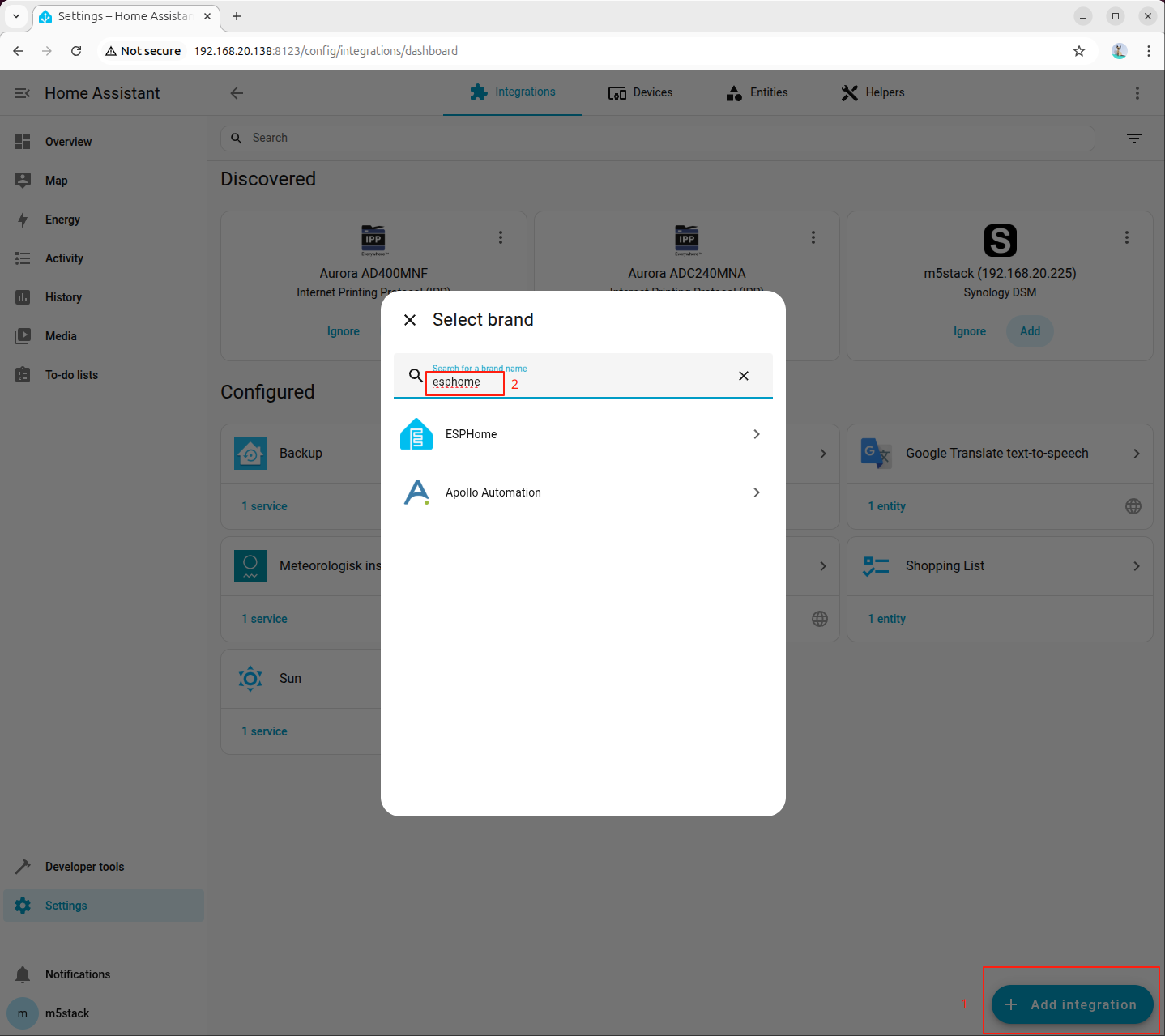

- Search for ESPHome in the integration list

- Enter the device IP address in the Host field, and enter the port number defined in the YAML configuration file in the Port field

- Enter the encryption key defined in the YAML configuration file

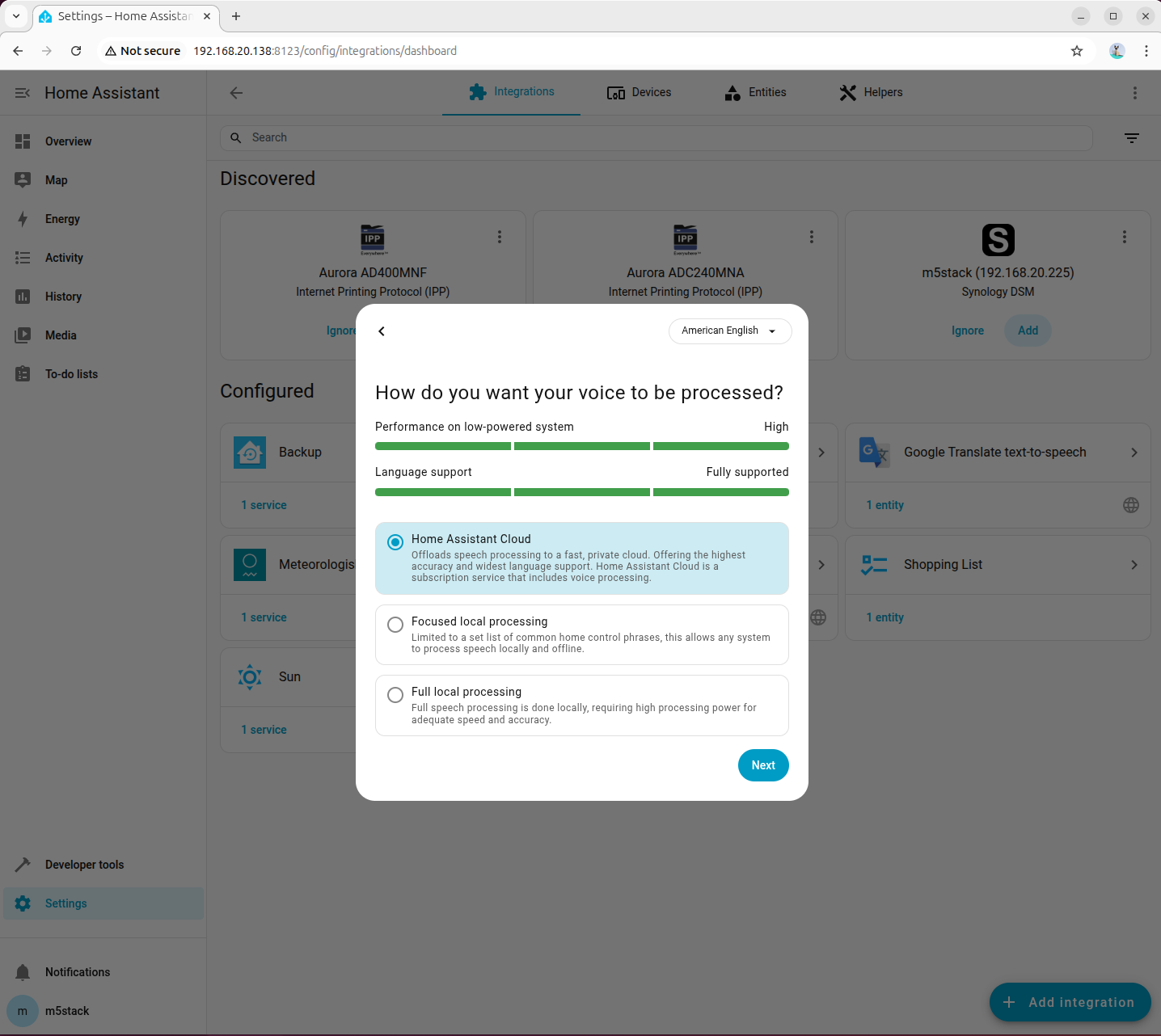

- For now, select Cloud Processing for the voice processing mode

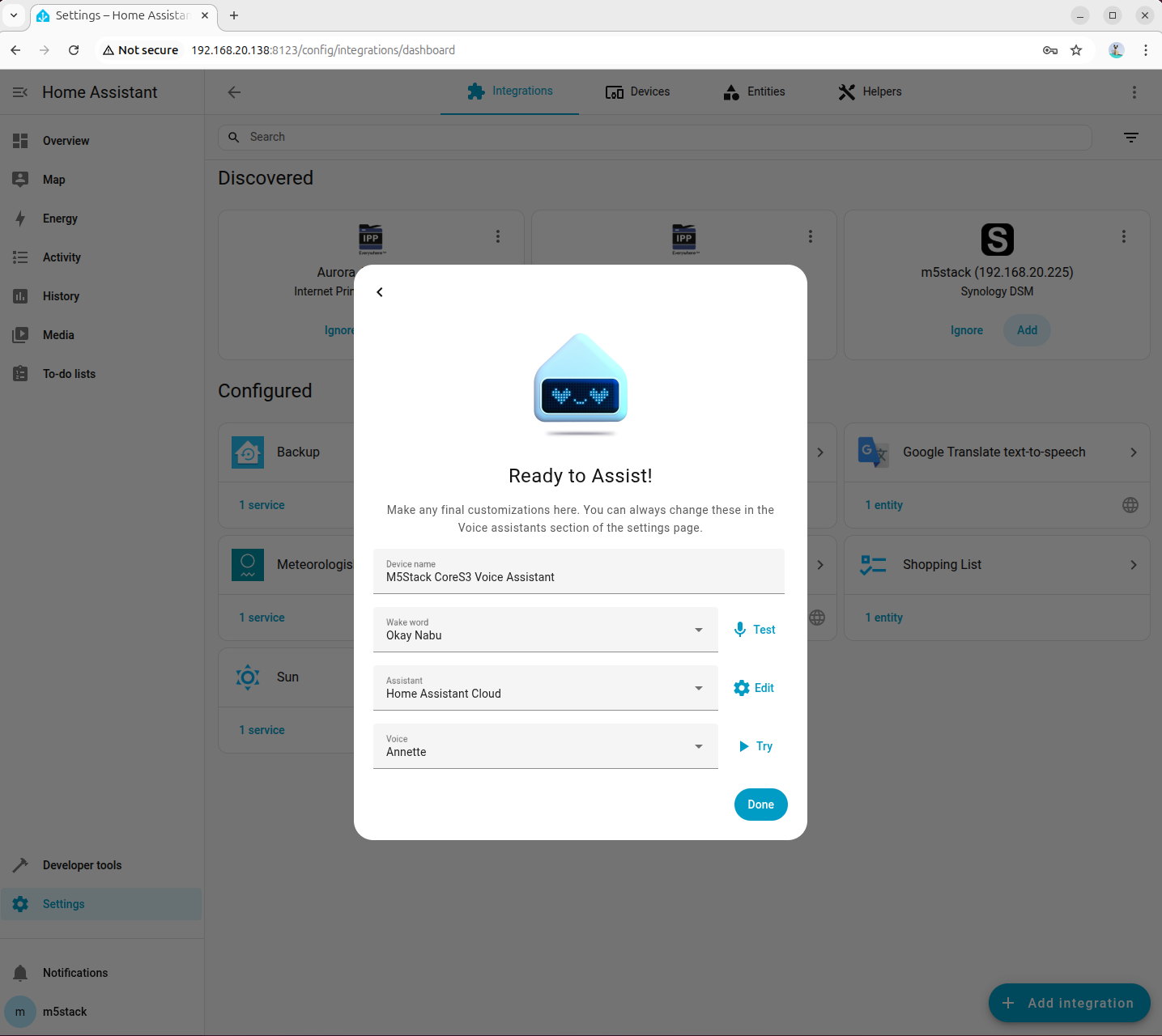

- Configure the voice wake word and TTS engine parameters

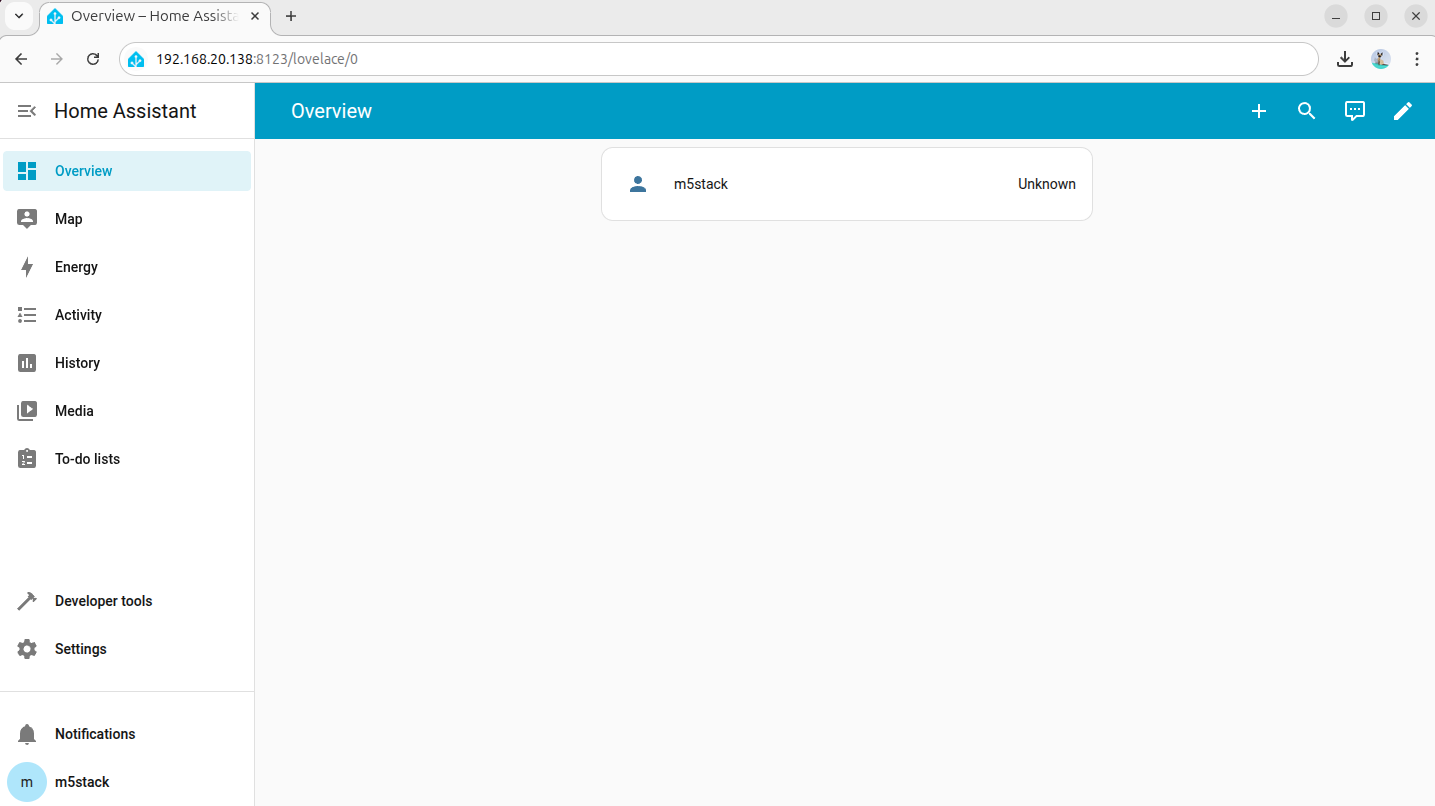

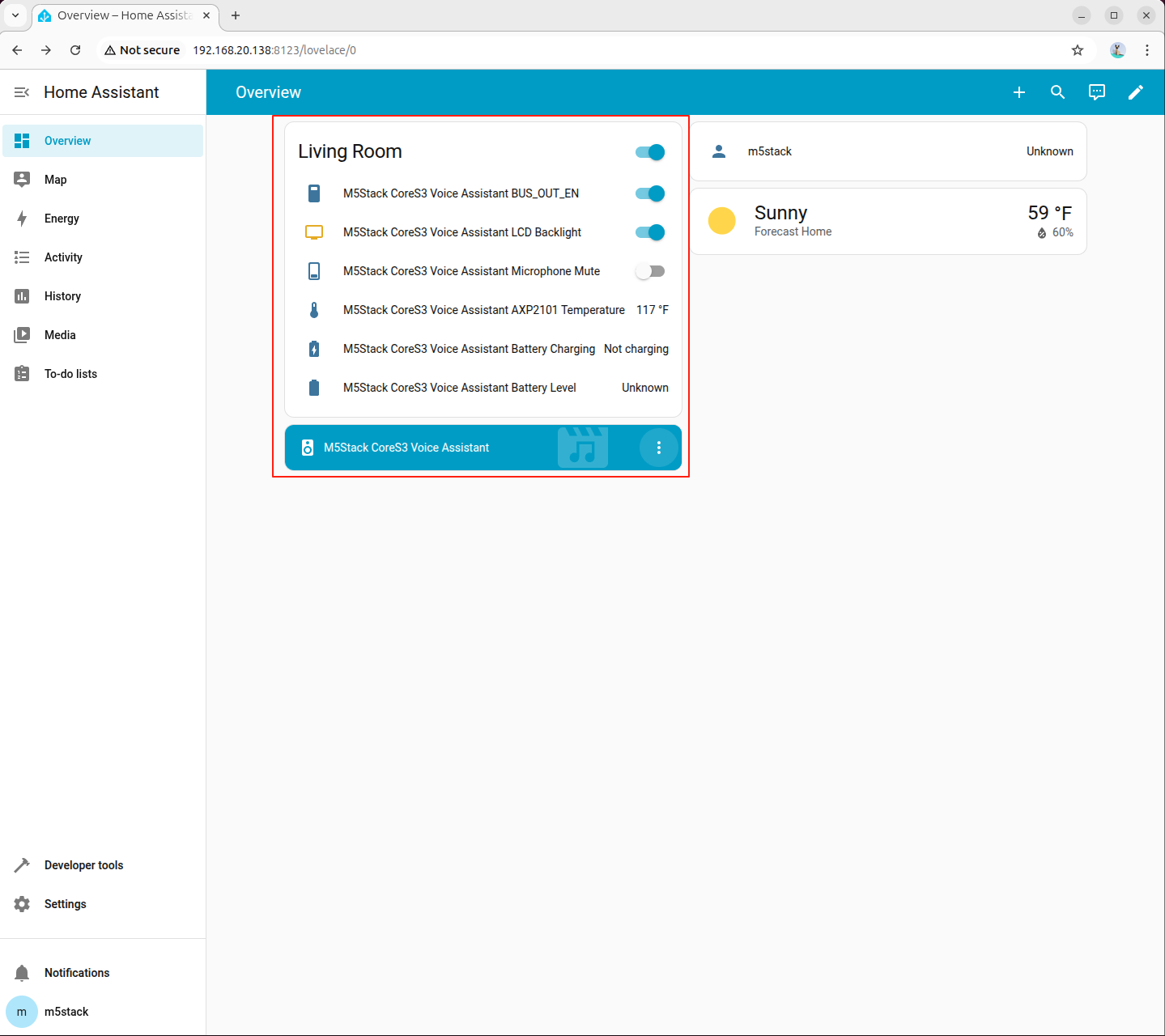

- After completing the configuration, the device will be displayed on the Home Assistant Overview page

6. Configure Local Voice Assistant

Using the Wyoming Protocol, local speech recognition and synthesis can be integrated into Home Assistant to achieve a fully offline voice assistant experience.

6.1 Configure Speech-to-Text (ASR)

Step 1: Install Dependencies and Models

Ensure that the system has installed the required dependencies and models for speech recognition:

apt install lib-llm llm-sys llm-asr llm-openai-api llm-model-sense-voice-small-10s-ax650pip install openai wyomingStep 2: Create the Wyoming Speech-to-Text Service

Create a new wyoming_whisper_service.py file on the AI Pyramid and copy the following code:

#!/usr/bin/env python3

# SPDX-FileCopyrightText: 2026 M5Stack Technology CO LTD

#

# SPDX-License-Identifier: MIT

"""

Wyoming protocol server for an OpenAI-compatible SenseVoice API.

Compatible with Wyoming protocol 1.8.0 for SenseVoice transcription.

"""

import argparse

import asyncio

import io

import logging

import wave

from functools import partial

from typing import Optional

from openai import OpenAI

from wyoming.asr import Transcribe, Transcript

from wyoming.audio import AudioChunk, AudioStart, AudioStop

from wyoming.event import Event

from wyoming.info import AsrModel, AsrProgram, Attribution, Info

from wyoming.server import AsyncServer, AsyncEventHandler

_LOGGER = logging.getLogger(__name__)

class SenseVoiceEventHandler(AsyncEventHandler):

"""Handle Wyoming protocol audio transcription requests."""

def __init__(

self,

wyoming_info: Info,

client: OpenAI,

model: str,

language: Optional[str] = None,

*args,

**kwargs,

) -> None:

super().__init__(*args, **kwargs)

self.client = client

self.wyoming_info_event = wyoming_info.event()

self.model = model

self.language = language

# Audio buffer state for a single transcription request.

self.audio_buffer: Optional[io.BytesIO] = None

self.wav_file: Optional[wave.Wave_write] = None

_LOGGER.info("Handler initialized with model: %s", model)

async def handle_event(self, event: Event) -> bool:

"""Handle Wyoming protocol events."""

# Service info request.

if event.type == "describe":

_LOGGER.debug("Received describe request")

await self.write_event(self.wyoming_info_event)

_LOGGER.info("Sent info response")

return True

# Transcription request.

if Transcribe.is_type(event.type):

transcribe = Transcribe.from_event(event)

_LOGGER.info("Transcribe request: language=%s", transcribe.language)

# Reset audio buffers for the new request.

self.audio_buffer = None

self.wav_file = None

return True

# Audio stream starts.

if AudioStart.is_type(event.type):

_LOGGER.debug("Audio start")

return True

# Audio stream chunk.

if AudioChunk.is_type(event.type):

chunk = AudioChunk.from_event(event)

# Initialize WAV writer on the first chunk.

if self.wav_file is None:

_LOGGER.debug("Creating WAV buffer")

self.audio_buffer = io.BytesIO()

self.wav_file = wave.open(self.audio_buffer, "wb")

self.wav_file.setframerate(chunk.rate)

self.wav_file.setsampwidth(chunk.width)

self.wav_file.setnchannels(chunk.channels)

# Append raw audio frames.

self.wav_file.writeframes(chunk.audio)

return True

# Audio stream ends; perform transcription.

if AudioStop.is_type(event.type):

_LOGGER.info("Audio stop - starting transcription")

if self.wav_file is None:

_LOGGER.warning("No audio data received")

return False

try:

# Finalize WAV payload.

self.wav_file.close()

# Extract audio bytes.

self.audio_buffer.seek(0)

audio_data = self.audio_buffer.getvalue()

# Build in-memory file for the API client.

audio_file = io.BytesIO(audio_data)

audio_file.name = "audio.wav"

# Call the transcription API.

_LOGGER.info("Calling transcription API")

transcription_params = {

"model": self.model,

"file": audio_file,

}

# Add language if explicitly set.

if self.language:

transcription_params["language"] = self.language

result = self.client.audio.transcriptions.create(**transcription_params)

# Extract transcript text.

if hasattr(result, "text"):

transcript_text = result.text

else:

transcript_text = str(result)

_LOGGER.info("Transcription result: %s", transcript_text)

# Send transcript back to the client.

await self.write_event(Transcript(text=transcript_text).event())

_LOGGER.info("Sent transcript")

except Exception as e:

_LOGGER.error("Transcription error: %s", e, exc_info=True)

# Send empty transcript on error to keep protocol flow.

await self.write_event(Transcript(text="").event())

finally:

# Release buffers for the next request.

self.audio_buffer = None

self.wav_file = None

return True

return True

async def main() -> None:

"""Program entrypoint."""

parser = argparse.ArgumentParser(

description="Wyoming protocol server for OpenAI-compatible SenseVoice API"

)

parser.add_argument(

"--uri",

default="tcp://0.0.0.0:10300",

help="URI to listen on (default: tcp://0.0.0.0:10300)",

)

parser.add_argument(

"--api-key",

default="sk-",

help="OpenAI API key (default: sk-)",

)

parser.add_argument(

"--base-url",

default="http://127.0.0.1:8000/v1",

help="API base URL (default: http://127.0.0.1:8000/v1)",

)

parser.add_argument(

"--model",

default="sense-voice-small-10s-ax650",

help="Model name (default: sense-voice-small-10s-ax650)",

)

parser.add_argument(

"--language",

help="Language code (e.g., en, zh, auto)",

)

parser.add_argument(

"--debug",

action="store_true",

help="Enable debug logging",

)

args = parser.parse_args()

# Configure logging.

logging.basicConfig(

level=logging.DEBUG if args.debug else logging.INFO,

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

)

_LOGGER.info("Starting Wyoming SenseVoice service")

_LOGGER.info("API Base URL: %s", args.base_url)

_LOGGER.info("Model: %s", args.model)

_LOGGER.info("Language: %s", args.language or "auto")

# Initialize OpenAI client.

client = OpenAI(

api_key=args.api_key,

base_url=args.base_url,

)

# Build Wyoming service metadata (protocol 1.8.0 compatible).

wyoming_info = Info(

asr=[

AsrProgram(

name=args.model,

description=f"OpenAI-compatible SenseVoice API ({args.model})",

attribution=Attribution(

name="SenseVoice",

url="https://github.com/FunAudioLLM/SenseVoice",

),

version="1.0.0",

installed=True,

models=[

AsrModel(

name=args.model,

description=f"SenseVoice model: {args.model}",

attribution=Attribution(

name="SenseVoice",

url="https://github.com/FunAudioLLM/SenseVoice",

),

installed=True,

languages=(

["zh", "en", "yue", "ja", "ko"]

if not args.language

else [args.language]

),

version="1.0.0",

)

],

)

],

)

_LOGGER.info("Service info created")

# Create server.

server = AsyncServer.from_uri(args.uri)

_LOGGER.info("Server listening on %s", args.uri)

# Run server loop.

try:

await server.run(

partial(

SenseVoiceEventHandler,

wyoming_info,

client,

args.model,

args.language,

)

)

except KeyboardInterrupt:

_LOGGER.info("Server stopped by user")

except Exception as e:

_LOGGER.error("Server error: %s", e, exc_info=True)

if __name__ == "__main__":

asyncio.run(main())Step 3: Start the Speech-to-Text Service

Run the following command to start the service (replace the IP address with the actual AI Pyramid address):

python wyoming_whisper_service.py --base-url http://192.168.20.138:8000/v1192.168.20.138 with the actual IP address of your AI Pyramid device.Example output after a successful startup:

root@m5stack-AI-Pyramid:~/wyoming-openai-stt# python wyoming_whisper_service.py --base-url http://192.168.20.138:8000/v1

2026-02-04 16:29:45,121 - __main__ - INFO - Starting Wyoming Whisper service

2026-02-04 16:29:45,122 - __main__ - INFO - API Base URL: http://192.168.20.138:8000/v1

2026-02-04 16:29:45,122 - __main__ - INFO - Model: sense-voice-small-10s-ax650

2026-02-04 16:29:45,123 - __main__ - INFO - Language: auto

2026-02-04 16:29:46,098 - __main__ - INFO - Service info created

2026-02-04 16:29:46,099 - __main__ - INFO - Server listening on tcp://0.0.0.0:10300Step 4: Add Wyoming Protocol in Home Assistant

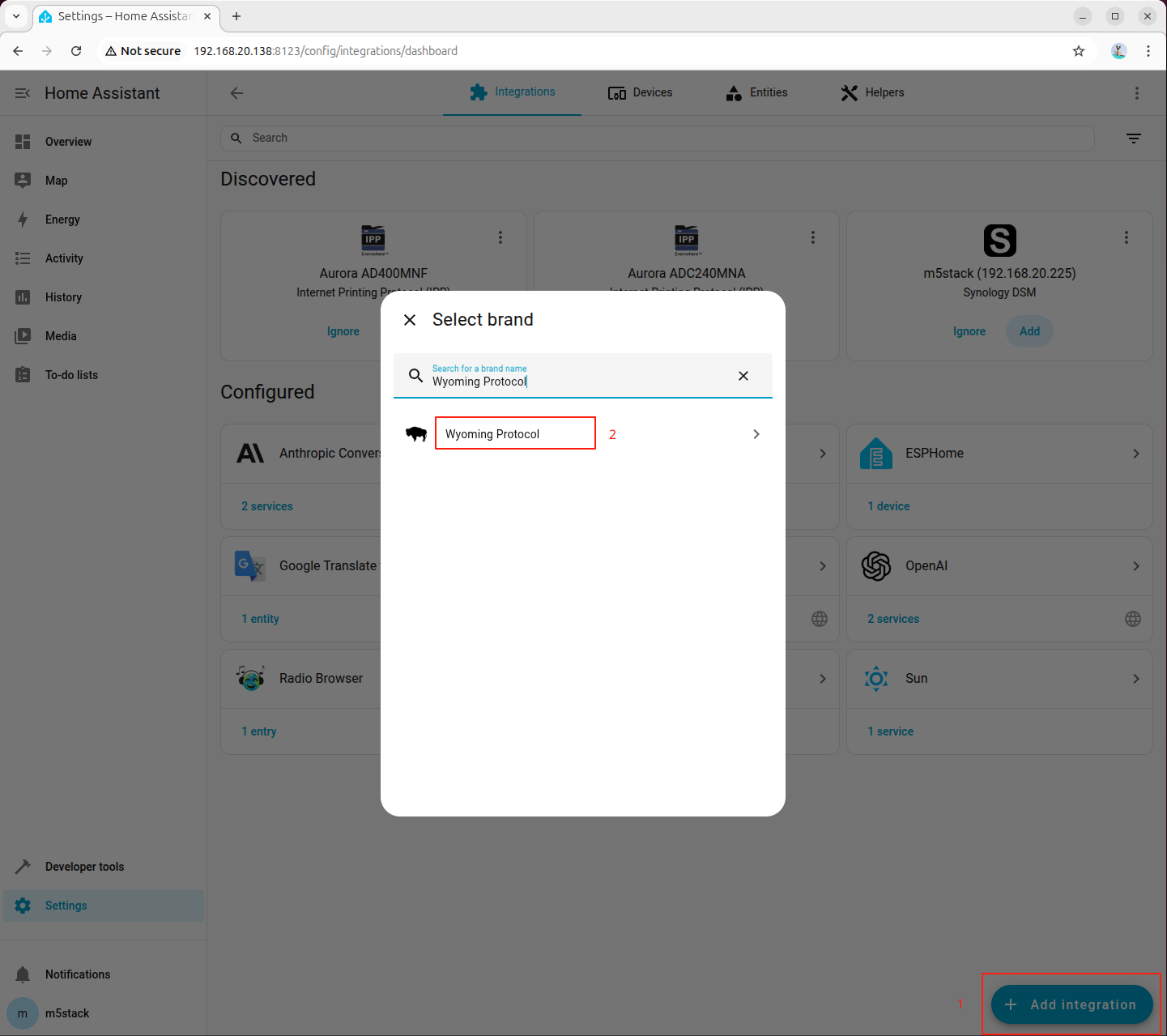

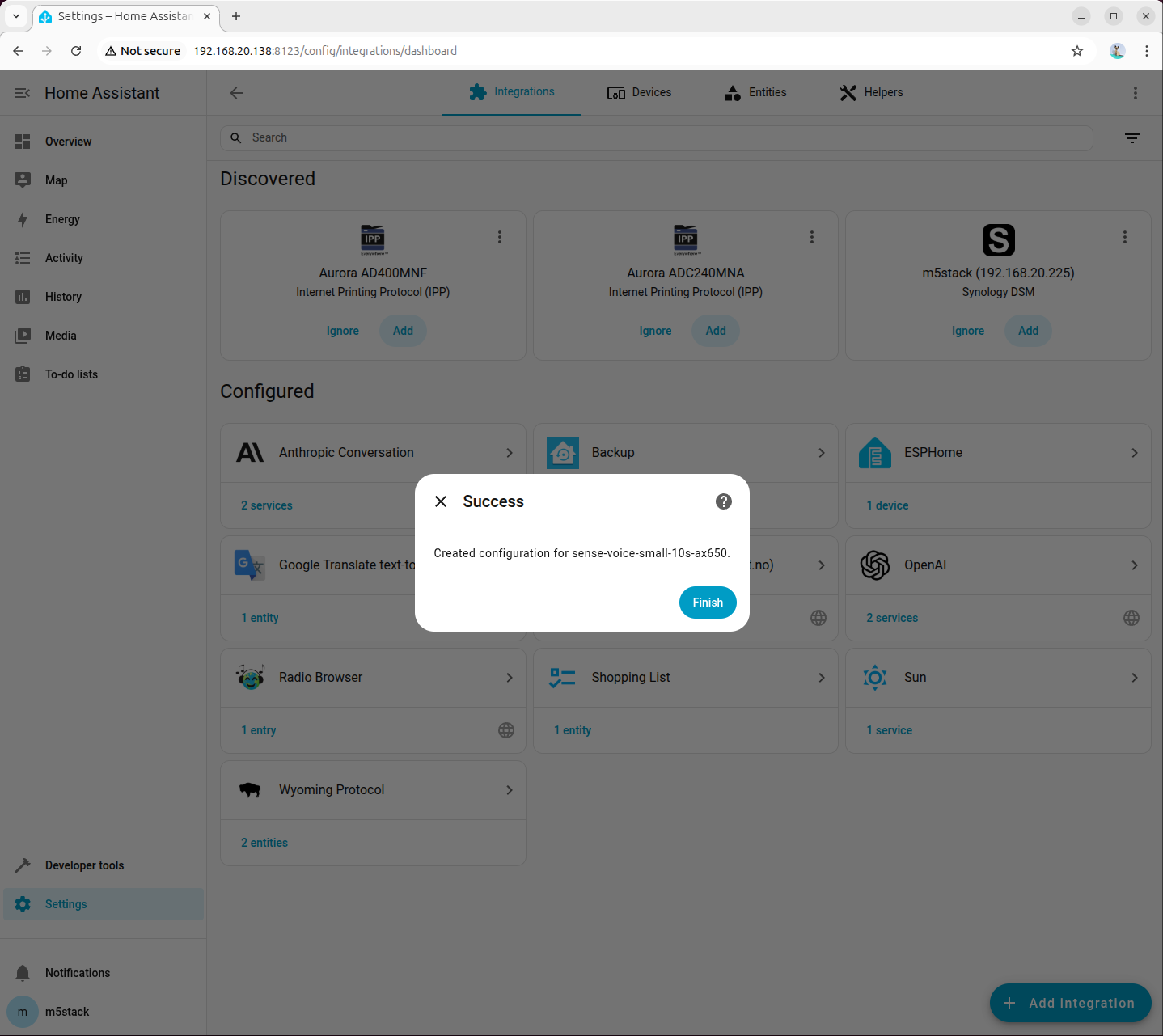

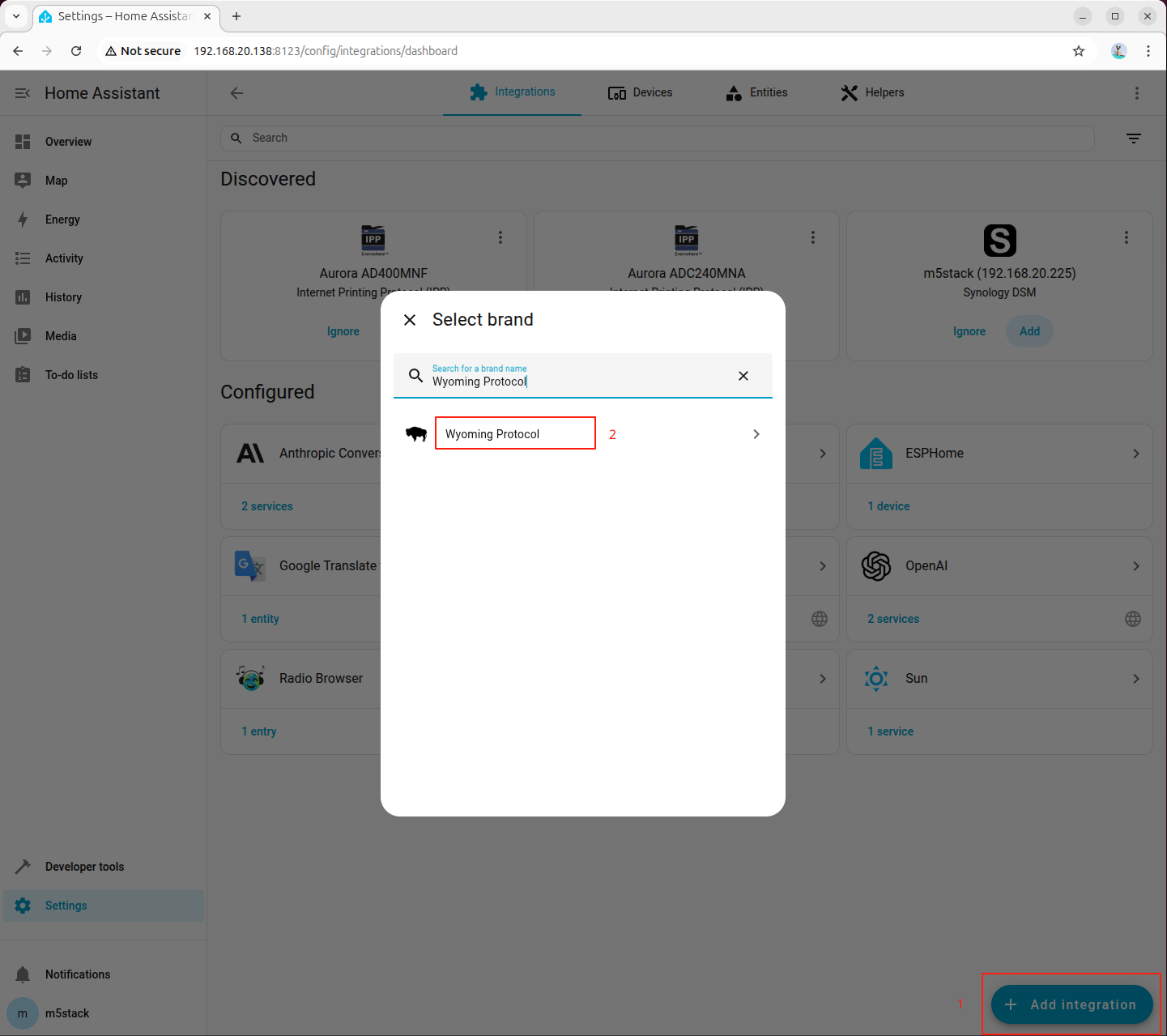

Open the Home Assistant settings, search for and add the Wyoming Protocol integration:

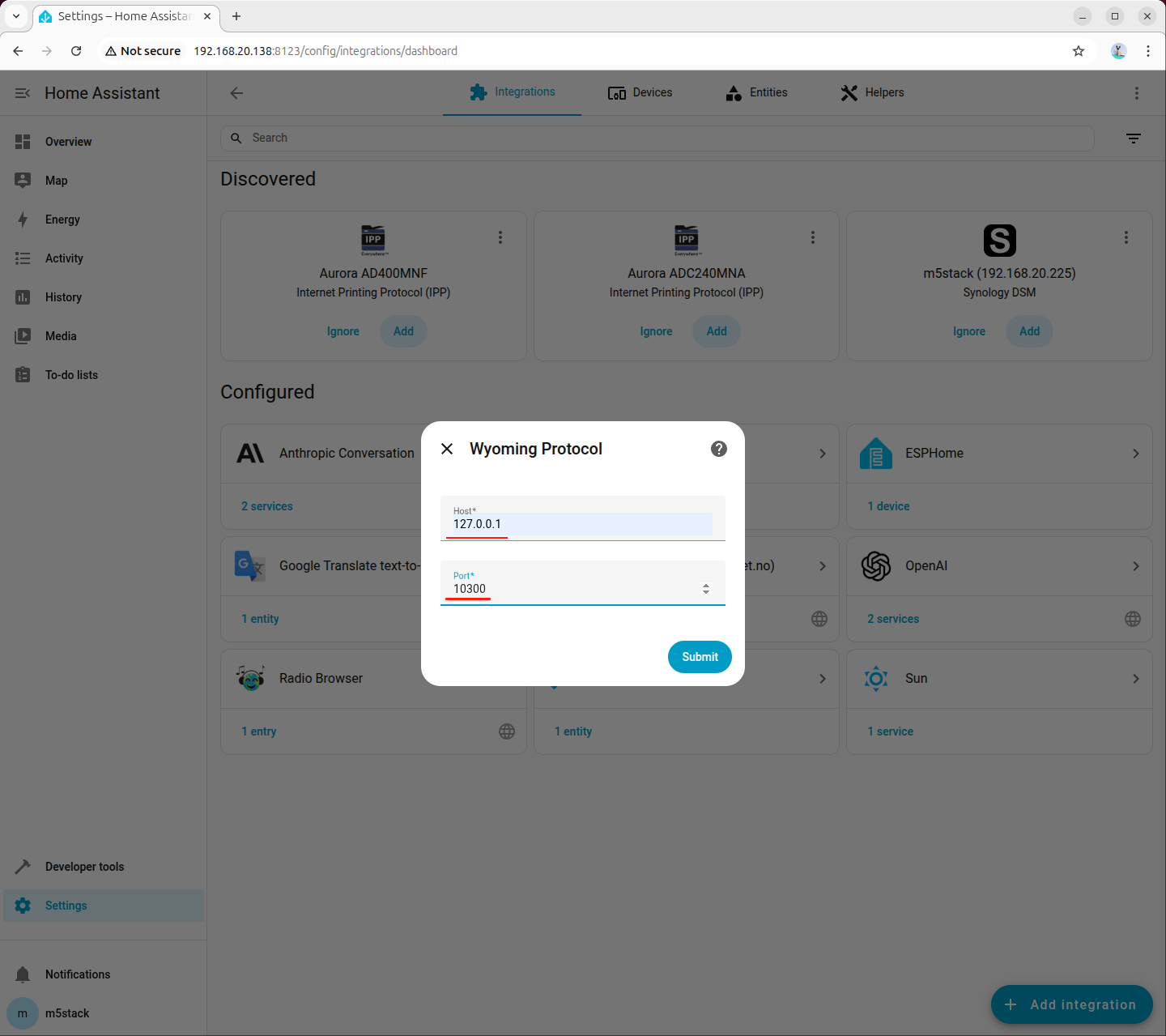

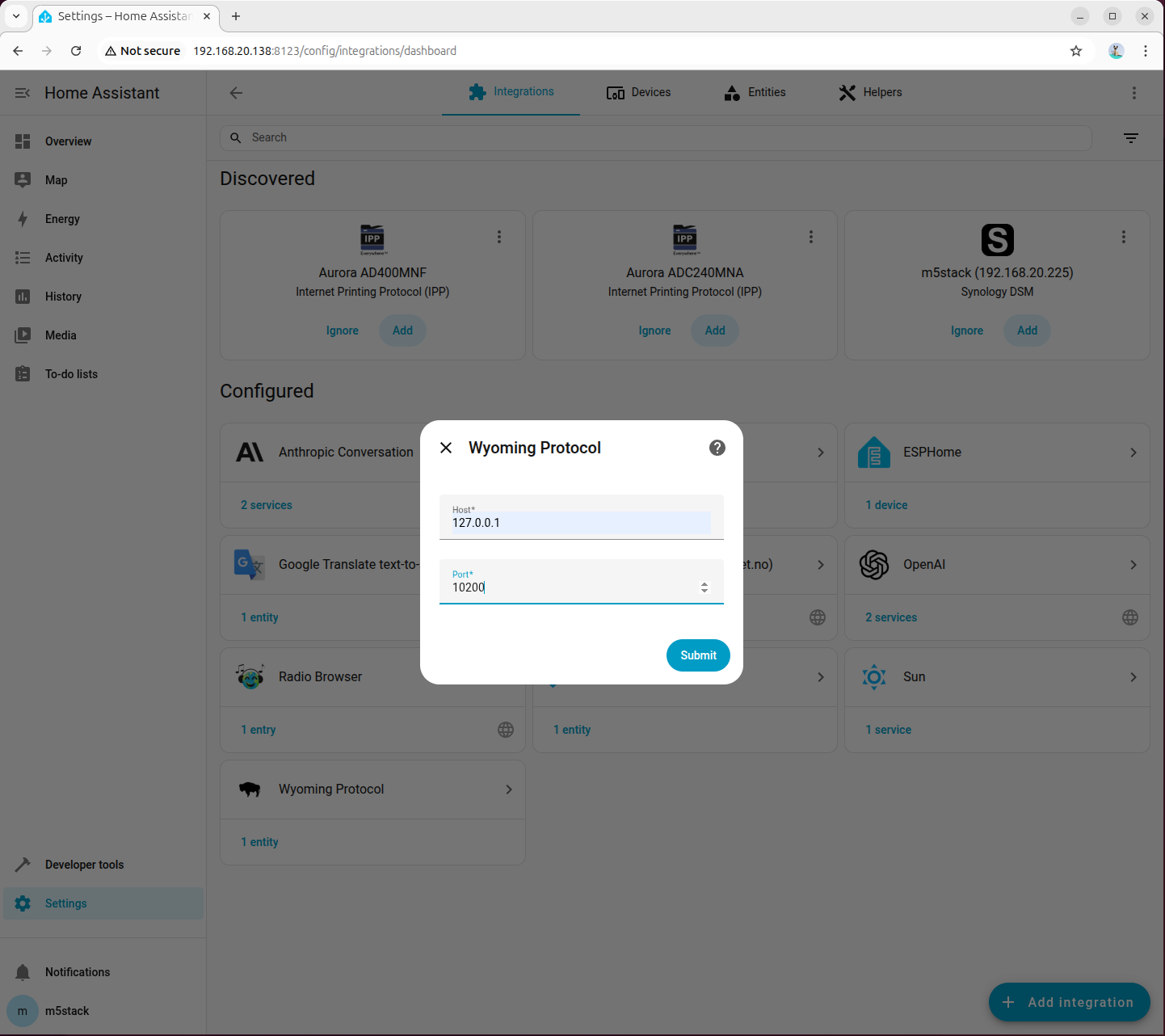

Step 5: Configure Connection Parameters

Configure the Wyoming Protocol connection parameters:

- Host: 127.0.0.1

- Port: 10300

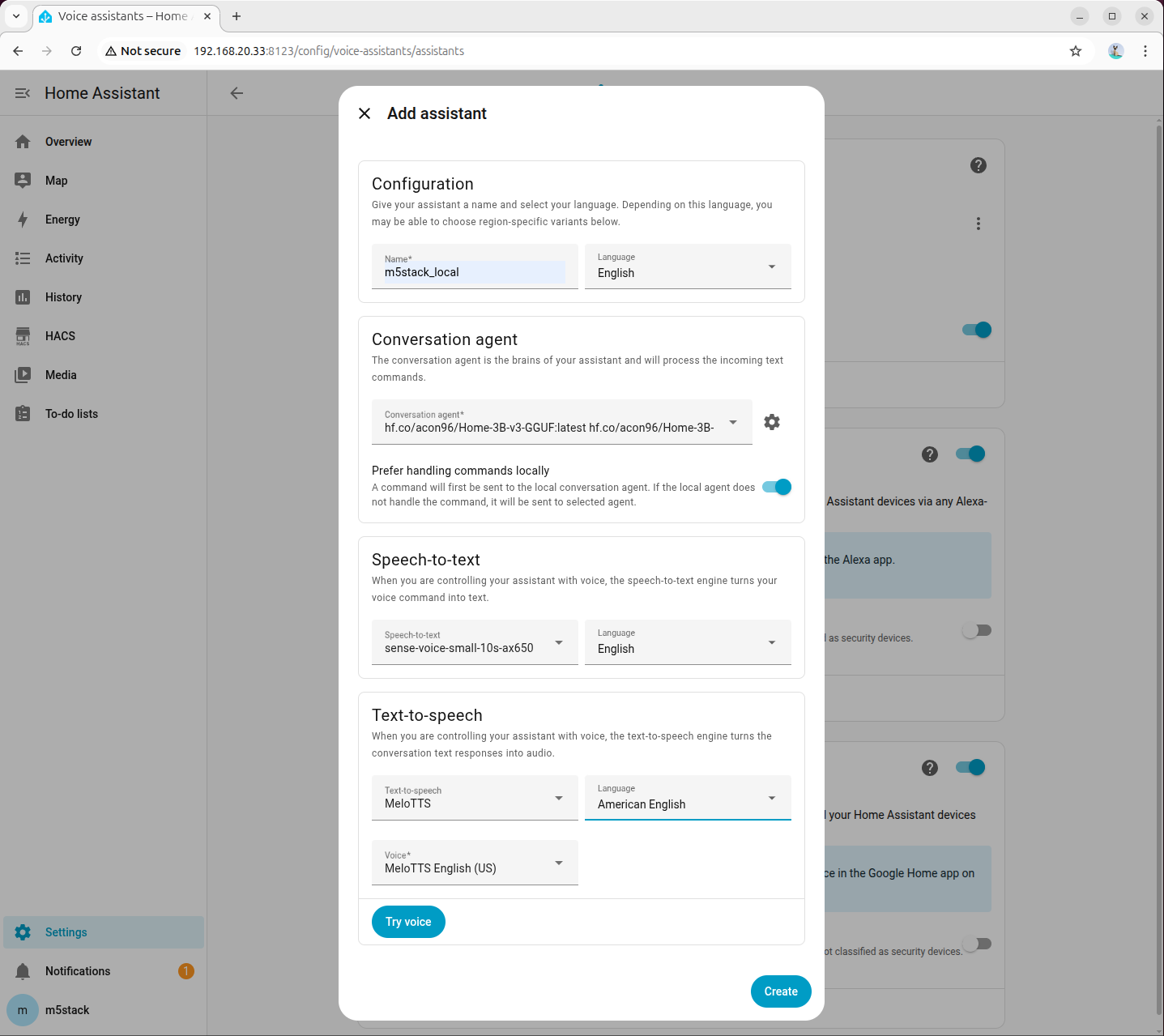

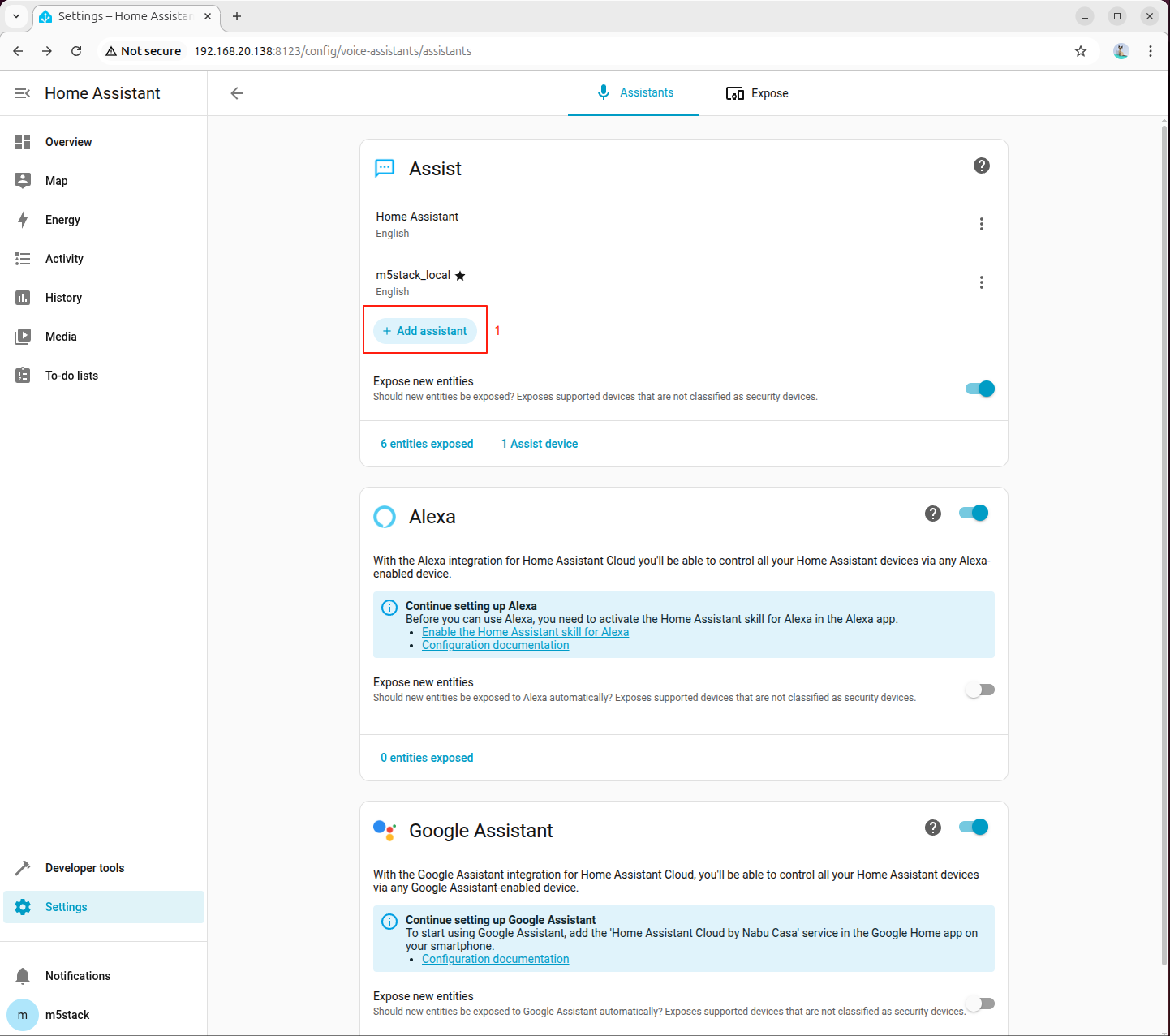

Step 6: Create a Voice Assistant

In Home Assistant settings, navigate to the Voice Assistants module and click to create a new voice assistant:

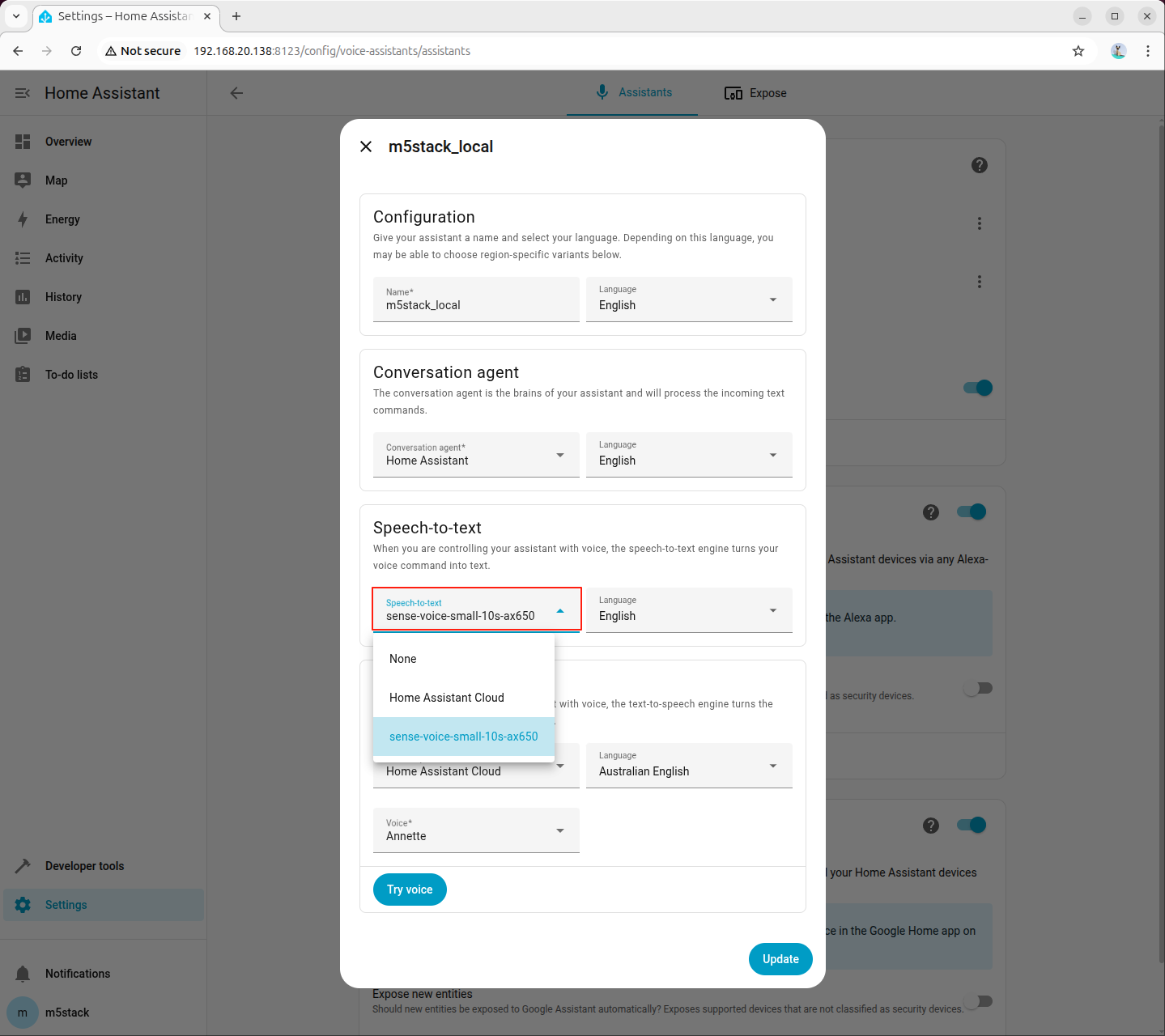

Step 7: Configure the ASR Model

Select the speech recognition model you just added, sense-voice-small-10s-ax650. Keep the language setting as default:

6.2 Configure Text-to-Speech (TTS)

Step 1: Install Dependencies and Models

Ensure that the system has installed the dependencies and models required for speech synthesis:

apt install lib-llm llm-sys llm-melotts llm-openai-api llm-model-melotts-en-us-ax650pip install openai wyomingllm-model-melotts-zh-cn-ax650, llm-model-melotts-ja-jp-ax650, etc. Install them as needed.Step 2: Create the Wyoming Text-to-Speech Service

Create a new file named wyoming_openai_tts.py on the AI Pyramid and copy the following code into it:

#!/usr/bin/env python3

# SPDX-FileCopyrightText: 2024 M5Stack Technology CO LTD

#

# SPDX-License-Identifier: MIT

"""

Wyoming protocol server for OpenAI API TTS service.

Connects local OpenAI-compatible TTS API to Home Assistant.

"""

import argparse

import asyncio

import logging

import wave

import io

from pathlib import Path

from typing import Optional

from openai import OpenAI

from wyoming.audio import AudioChunk, AudioStart, AudioStop

from wyoming.event import Event

from wyoming.info import Attribution, Info, TtsProgram, TtsVoice

from wyoming.server import AsyncEventHandler, AsyncServer

from wyoming.tts import Synthesize

_LOGGER = logging.getLogger(__name__)

# Default configuration

DEFAULT_HOST = "0.0.0.0"

DEFAULT_PORT = 10200

DEFAULT_API_BASE_URL = "http://192.168.20.138:8000/v1"

DEFAULT_MODEL = "melotts-zh-cn-ax650"

DEFAULT_VOICE = "melotts-zh-cn-ax650"

DEFAULT_RESPONSE_FORMAT = "wav"

# Available voices for Wyoming protocol

AVAILABLE_VOICES = [

TtsVoice(

name="melotts-en-au-ax650",

description="MeloTTS English (AU)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

languages=["en-au"],

),

TtsVoice(

name="melotts-en-default-ax650",

description="MeloTTS English (Default)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

languages=["en"],

),

TtsVoice(

name="melotts-en-us-ax650",

description="MeloTTS English (US)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

languages=["en-us"],

),

TtsVoice(

name="melotts-en-br-ax650",

description="MeloTTS English (BR)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

languages=["en-br"],

),

TtsVoice(

name="melotts-en-india-ax650",

description="MeloTTS English (India)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

languages=["en-in"],

),

TtsVoice(

name="melotts-ja-jp-ax650",

description="MeloTTS Japanese (JP)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-Japanese",

),

version="1.0.0",

installed=True,

languages=["ja-jp"],

),

TtsVoice(

name="melotts-es-es-ax650",

description="MeloTTS Spanish (ES)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-Spanish",

),

version="1.0.0",

installed=True,

languages=["es-es"],

),

TtsVoice(

name="melotts-zh-cn-ax650",

description="MeloTTS Chinese (CN)",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-Chinese",

),

version="1.0.0",

installed=True,

languages=["zh-cn"],

),

]

# Map voice name -> model name for automatic switching

VOICE_MODEL_MAP = {voice.name: voice.name for voice in AVAILABLE_VOICES}

class OpenAITTSEventHandler:

"""Event handler for Wyoming protocol with OpenAI TTS."""

def __init__(

self,

api_key: str,

base_url: str,

model: str,

default_voice: str,

response_format: str,

):

"""Initialize the event handler."""

self.api_key = api_key

self.base_url = base_url

self.model = model

self.default_voice = default_voice

self.response_format = response_format

self.voice_model_map = VOICE_MODEL_MAP

# Initialize OpenAI client

self.client = OpenAI(

api_key=api_key,

base_url=base_url,

)

_LOGGER.info(

"Initialized OpenAI TTS handler with base_url=%s, model=%s",

base_url,

model,

)

async def handle_event(self, event: Event) -> Optional[Event]:

"""Handle a Wyoming protocol event."""

if Synthesize.is_type(event.type):

synthesize = Synthesize.from_event(event)

_LOGGER.info("Synthesizing text: %s", synthesize.text)

# Use specified voice or default

voice = synthesize.voice.name if synthesize.voice else self.default_voice

model = self.voice_model_map.get(voice, self.model)

try:

# Generate speech using OpenAI API

audio_data = await asyncio.to_thread(

self._synthesize_speech,

synthesize.text,

voice,

model,

)

# Read WAV file properties

with wave.open(io.BytesIO(audio_data), "rb") as wav_file:

sample_rate = wav_file.getframerate()

sample_width = wav_file.getsampwidth()

channels = wav_file.getnchannels()

audio_bytes = wav_file.readframes(wav_file.getnframes())

_LOGGER.info(

"Generated audio: %d bytes, %d Hz, %d channels",

len(audio_bytes),

sample_rate,

channels,

)

# Send audio start event

yield AudioStart(

rate=sample_rate,

width=sample_width,

channels=channels,

).event()

# Send audio in chunks

chunk_size = 8192

for i in range(0, len(audio_bytes), chunk_size):

chunk = audio_bytes[i:i + chunk_size]

yield AudioChunk(

audio=chunk,

rate=sample_rate,

width=sample_width,

channels=channels,

).event()

# Send audio stop event

yield AudioStop().event()

except Exception as err:

_LOGGER.exception("Error during synthesis: %s", err)

raise

def _synthesize_speech(self, text: str, voice: str, model: str) -> bytes:

"""Synthesize speech using OpenAI API (blocking call)."""

with self.client.audio.speech.with_streaming_response.create(

model=model,

voice=voice,

response_format=self.response_format,

input=text,

) as response:

# Read all audio data

audio_data = b""

for chunk in response.iter_bytes(chunk_size=8192):

audio_data += chunk

return audio_data

async def main():

"""Run the Wyoming protocol server."""

parser = argparse.ArgumentParser(description="Wyoming OpenAI TTS Server")

parser.add_argument(

"--uri",

default=f"tcp://{DEFAULT_HOST}:{DEFAULT_PORT}",

help="URI to bind the server (default: tcp://0.0.0.0:10200)",

)

parser.add_argument(

"--api-key",

default="sk-your-key",

help="OpenAI API key (default: sk-your-key)",

)

parser.add_argument(

"--base-url",

default=DEFAULT_API_BASE_URL,

help=f"OpenAI API base URL (default: {DEFAULT_API_BASE_URL})",

)

parser.add_argument(

"--model",

default=DEFAULT_MODEL,

help=f"TTS model name (default: {DEFAULT_MODEL})",

)

parser.add_argument(

"--voice",

default=DEFAULT_VOICE,

help=f"Default voice name (default: {DEFAULT_VOICE})",

)

parser.add_argument(

"--response-format",

default=DEFAULT_RESPONSE_FORMAT,

choices=["mp3", "opus", "aac", "flac", "wav", "pcm"],

help=f"Audio response format (default: {DEFAULT_RESPONSE_FORMAT})",

)

parser.add_argument(

"--debug",

action="store_true",

help="Enable debug logging",

)

args = parser.parse_args()

# Setup logging

logging.basicConfig(

level=logging.DEBUG if args.debug else logging.INFO,

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

)

_LOGGER.info("Starting Wyoming OpenAI TTS Server")

_LOGGER.info("URI: %s", args.uri)

_LOGGER.info("Model: %s", args.model)

_LOGGER.info("Default voice: %s", args.voice)

# Create Wyoming info

wyoming_info = Info(

tts=[

TtsProgram(

name="MeloTTS",

description="OpenAI compatible TTS service",

attribution=Attribution(

name="MeloTTS",

url="https://huggingface.co/myshell-ai/MeloTTS-English",

),

version="1.0.0",

installed=True,

voices=AVAILABLE_VOICES,

)

],

)

# Create event handler

event_handler = OpenAITTSEventHandler(

api_key=args.api_key,

base_url=args.base_url,

model=args.model,

default_voice=args.voice,

response_format=args.response_format,

)

# Start server

server = AsyncServer.from_uri(args.uri)

_LOGGER.info("Server started, waiting for connections...")

await server.run(

partial(

OpenAITtsHandler,

wyoming_info=wyoming_info,

event_handler=event_handler,

)

)

class OpenAITtsHandler(AsyncEventHandler):

"""Wyoming async event handler for OpenAI TTS."""

def __init__(

self,

reader: asyncio.StreamReader,

writer: asyncio.StreamWriter,

wyoming_info: Info,

event_handler: OpenAITTSEventHandler,

) -> None:

super().__init__(reader, writer)

self._wyoming_info = wyoming_info

self._event_handler = event_handler

self._sent_info = False

async def handle_event(self, event: Event) -> bool:

if not self._sent_info:

await self.write_event(self._wyoming_info.event())

self._sent_info = True

_LOGGER.info("Client connected")

_LOGGER.debug("Received event: %s", event.type)

try:

async for response_event in self._event_handler.handle_event(event):

await self.write_event(response_event)

except Exception as err:

_LOGGER.exception("Error handling connection: %s", err)

return False

return True

async def disconnect(self) -> None:

_LOGGER.info("Client disconnected")

if __name__ == "__main__":

from functools import partial

asyncio.run(main())步骤 3:启动文本转语音服务

使用以下命令启动 Wyoming 文本转语音服务,注意替换为你的 AI Pyramid IP 地址:

python wyoming_openai_tts.py --base-url=http://192.168.20.138:8000/v1root@m5stack-AI-Pyramid:~/wyoming-openai-tts# python wyoming_openai_tts.py --base_url=http://192.168.20.138:8000/v1

2026-02-04 17:03:18,152 - __main__ - INFO - Starting Wyoming OpenAI TTS Server

2026-02-04 17:03:18,153 - __main__ - INFO - URI: tcp://0.0.0.0:10200

2026-02-04 17:03:18,153 - __main__ - INFO - Model: melotts-zh-cn-ax650

2026-02-04 17:03:18,153 - __main__ - INFO - Default voice: melotts-zh-cn-ax650

2026-02-04 17:03:19,081 - __main__ - INFO - Initialized OpenAI TTS handler with base_url=http://192.168.20.138:8000/v1, model=melotts-zh-cn-ax650

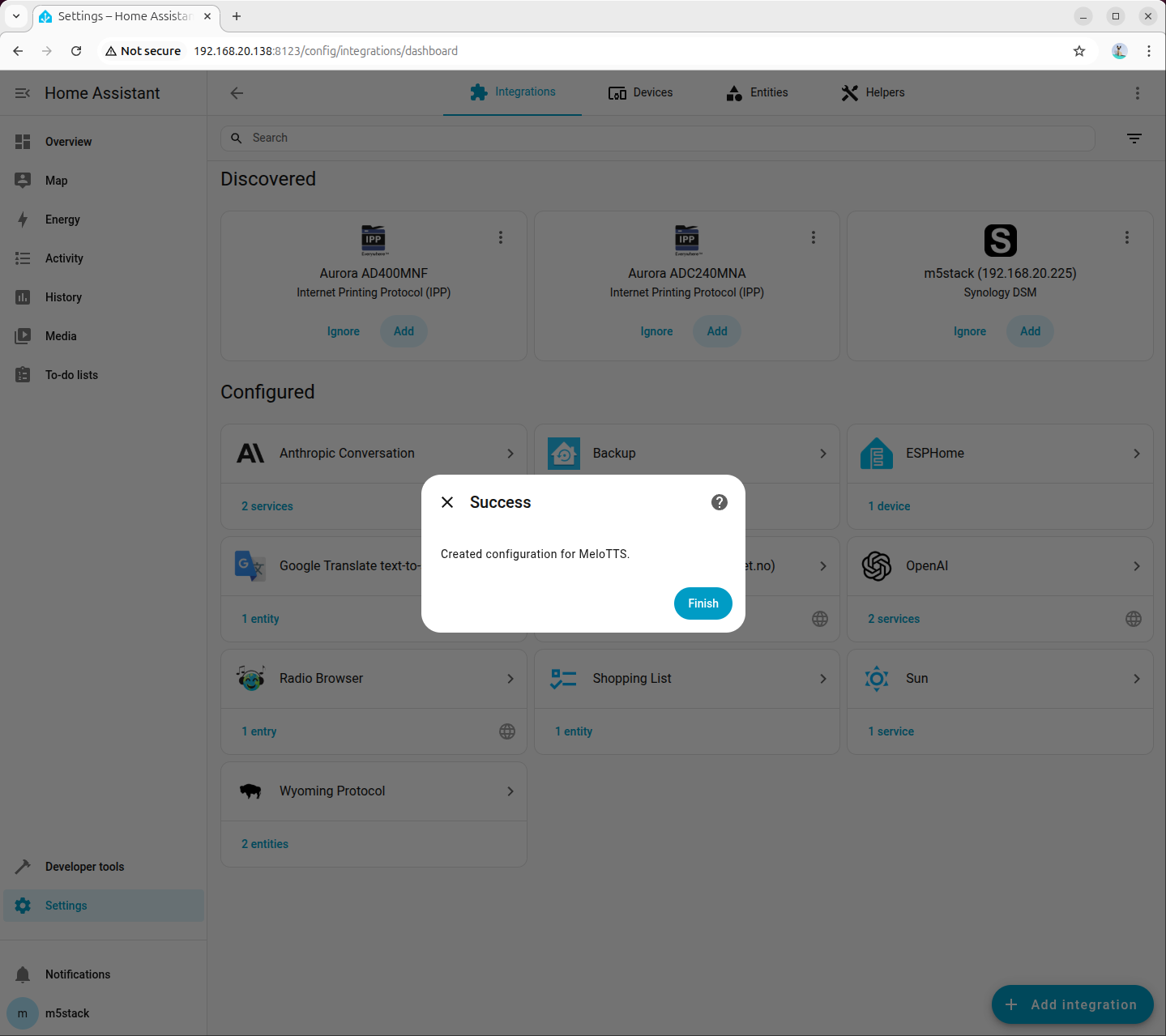

2026-02-04 17:03:19,082 - __main__ - INFO - Server started, waiting for connections...步骤 4:在 Home Assistant 中添加 Wyoming Protocol

打开 Home Assistant 设置,搜索并添加 'Wyoming Protocol' 集成:

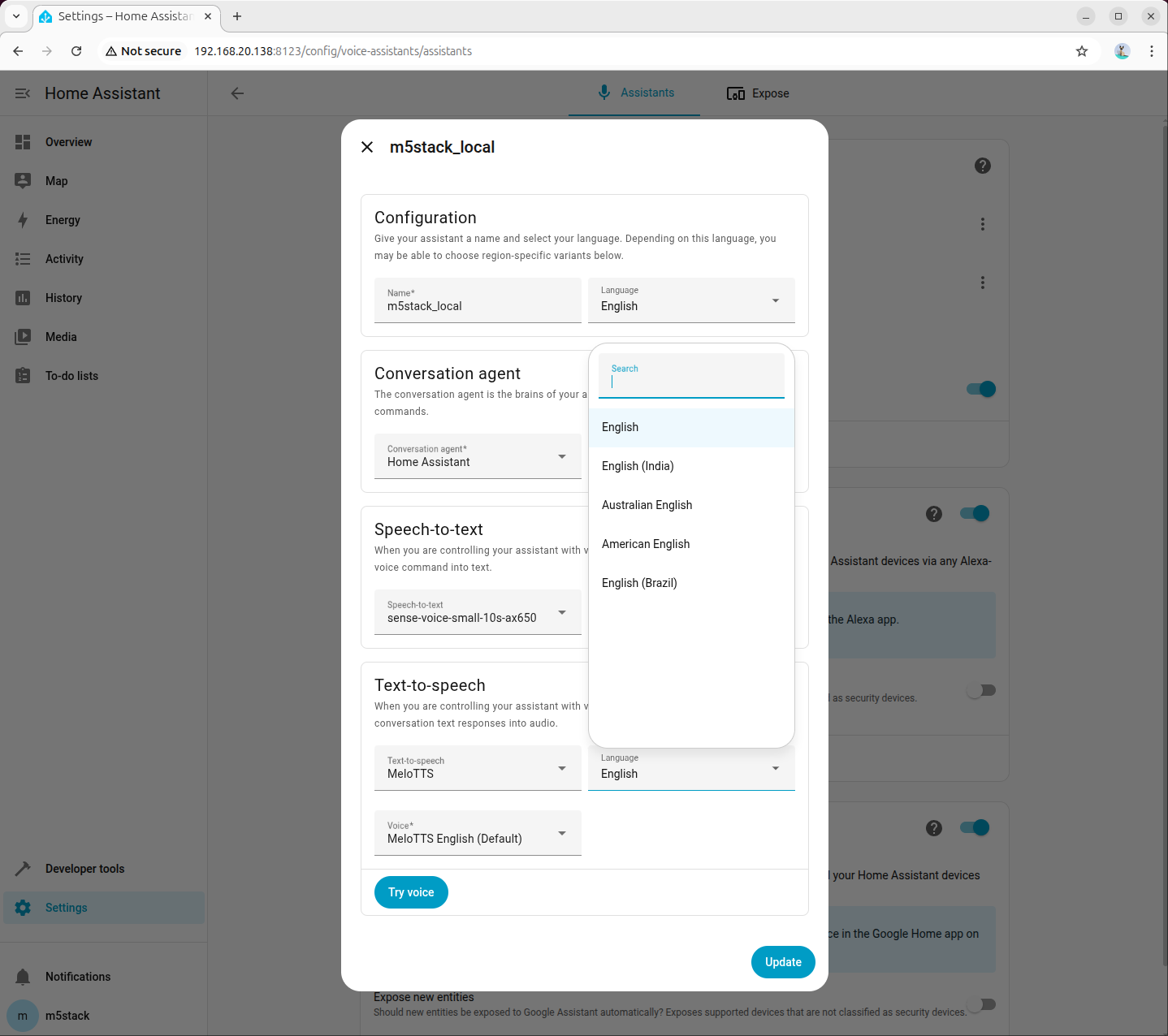

步骤 5:配置语音助手

在「设置 - 语音助手」中创建或编辑助手配置。将文本转语音(TTS)选项设置为刚才添加的「MeloTTS」,然后根据需求选择合适的语言和音色。请确保已安装对应语言的文本转语音模型。此处以 American English 为例演示:

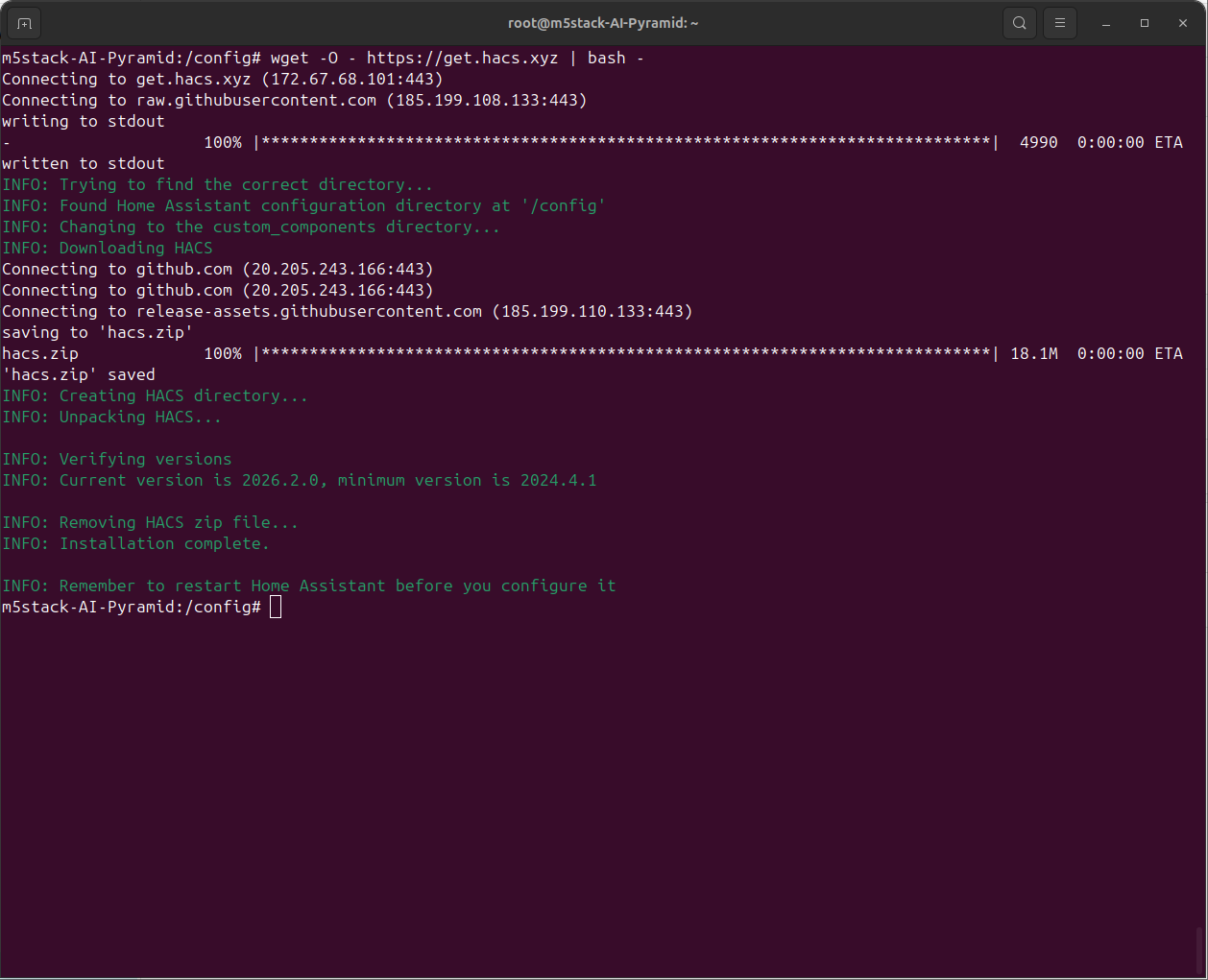

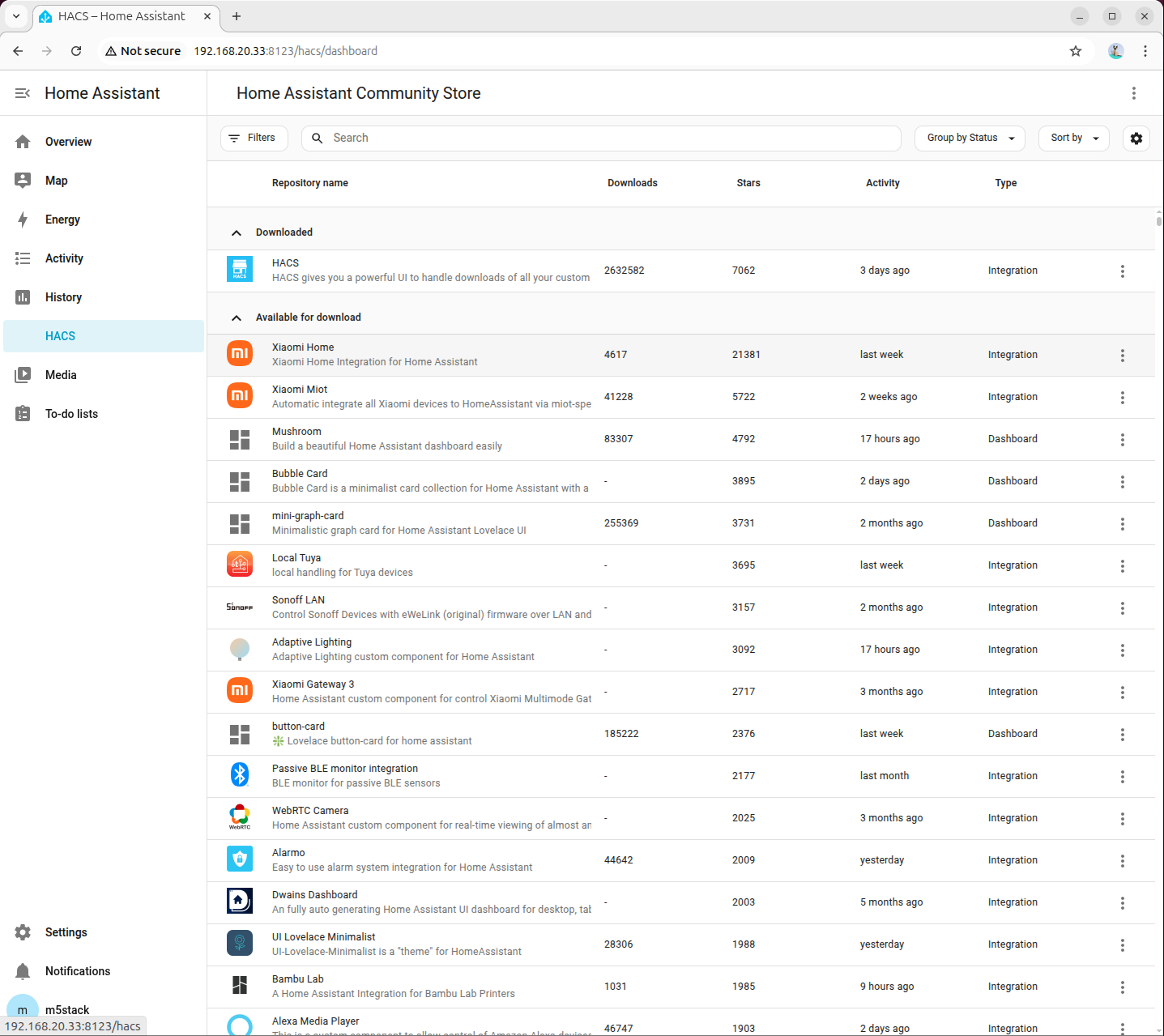

7. 配置 HACS

- 进入 homeassistant 容器

docker exec -it homeassistant bash- 安装 hacs

wget -O - https://get.hacs.xyz | bash -

- Ctrl + D 退出容器,并重启 homeassistant 容器

docker restart homeassistant

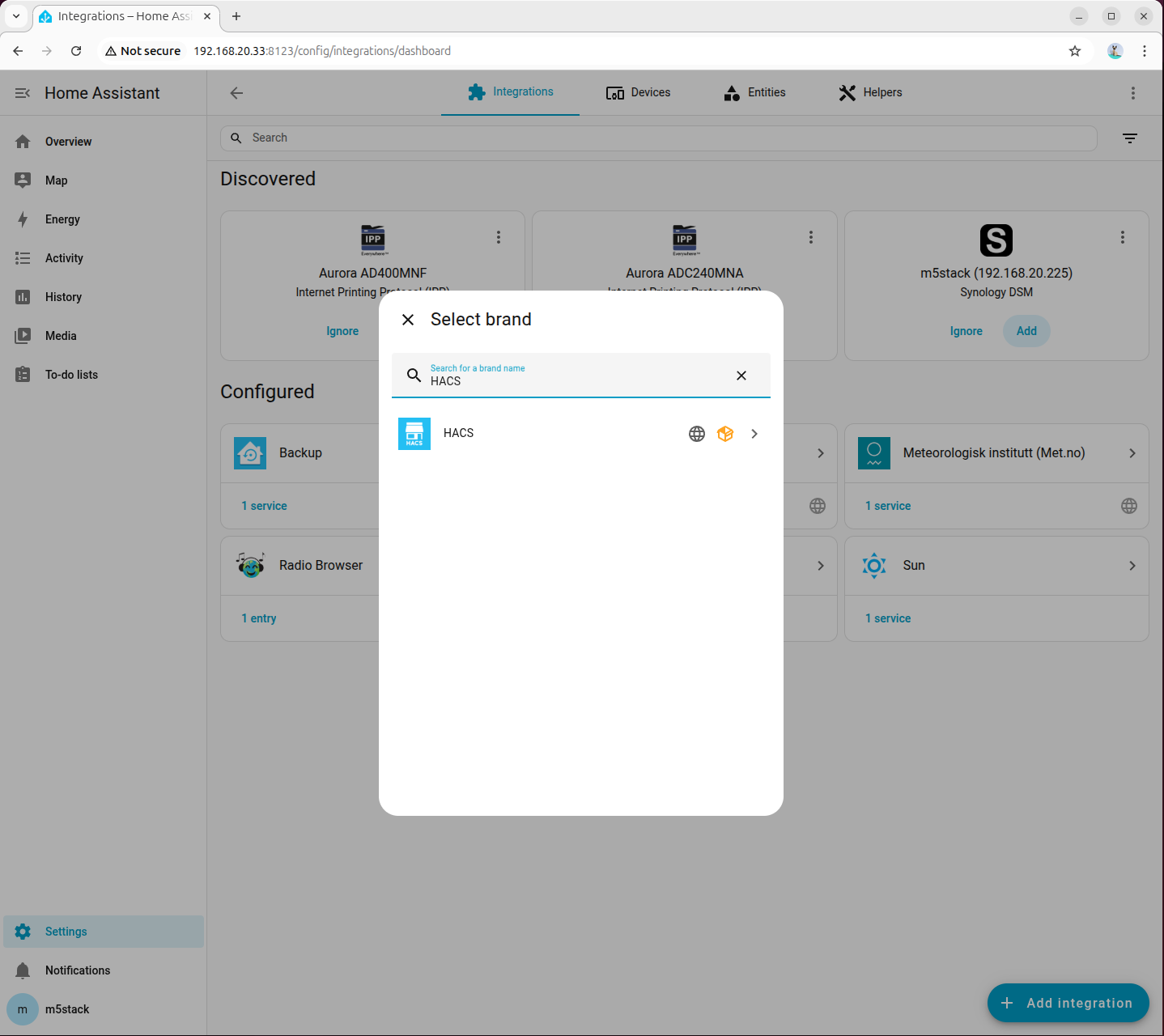

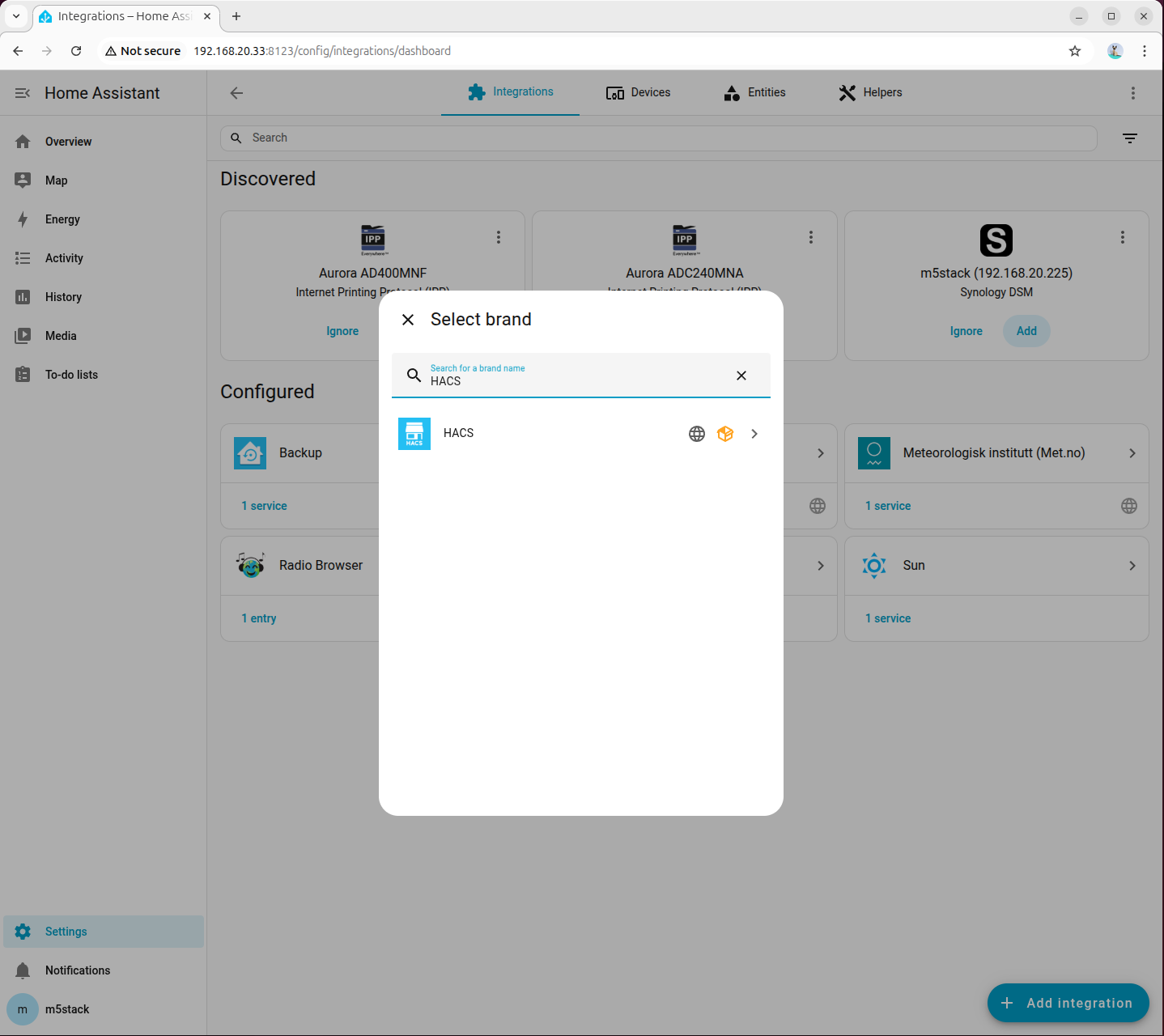

- 在设置 -> 设备与服务 -> 添加集成 里搜索 HACS

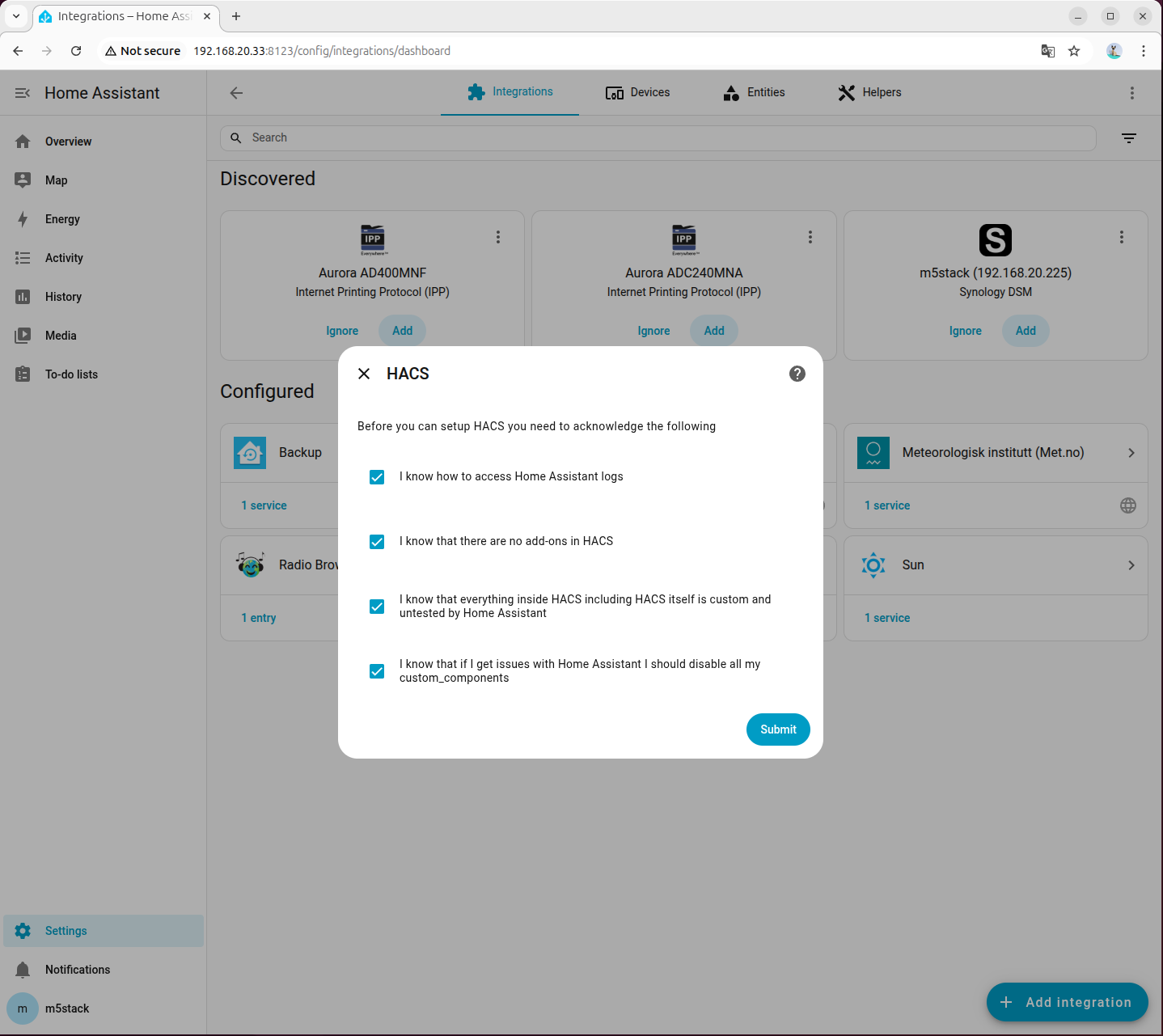

- 全部勾选

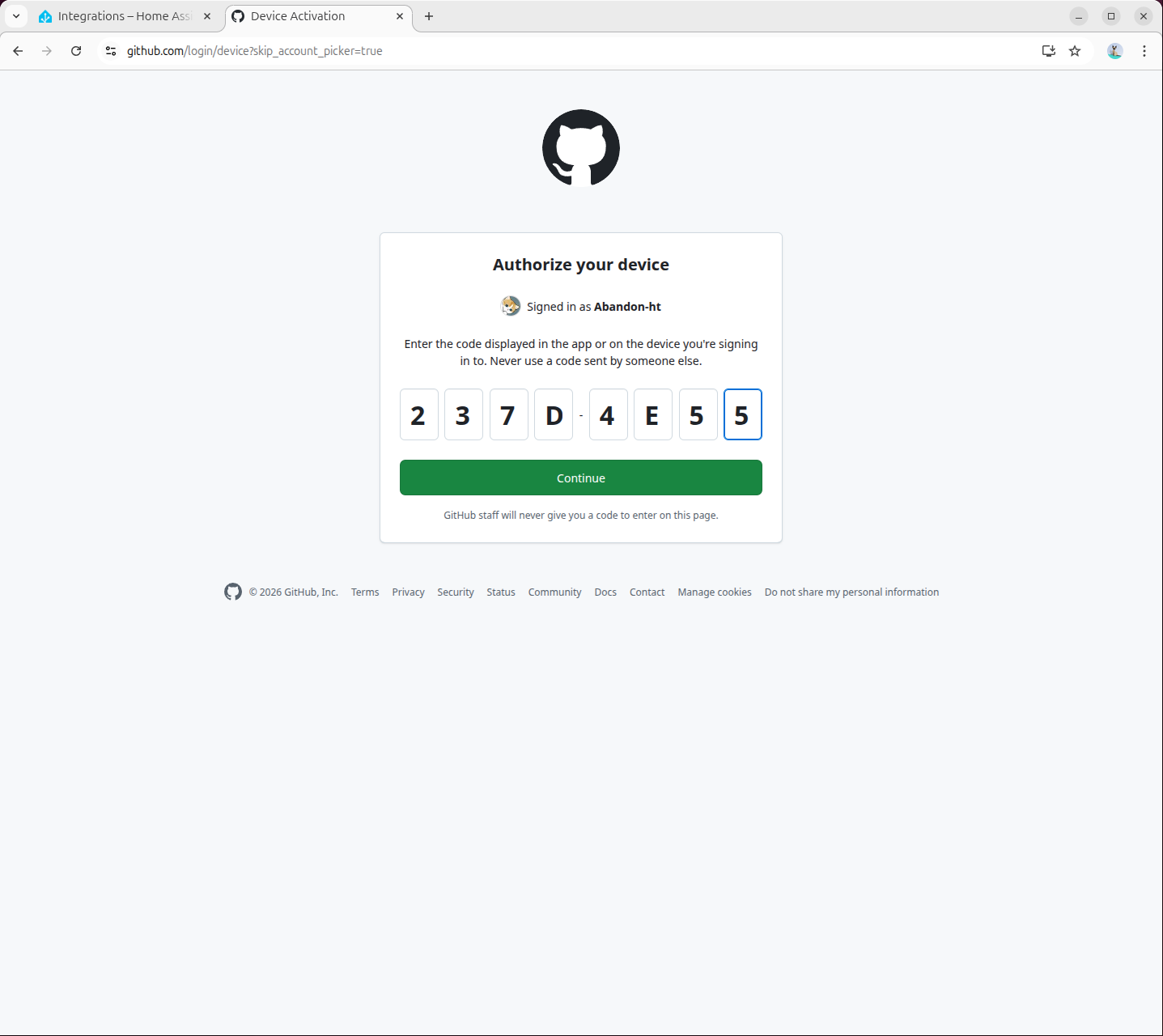

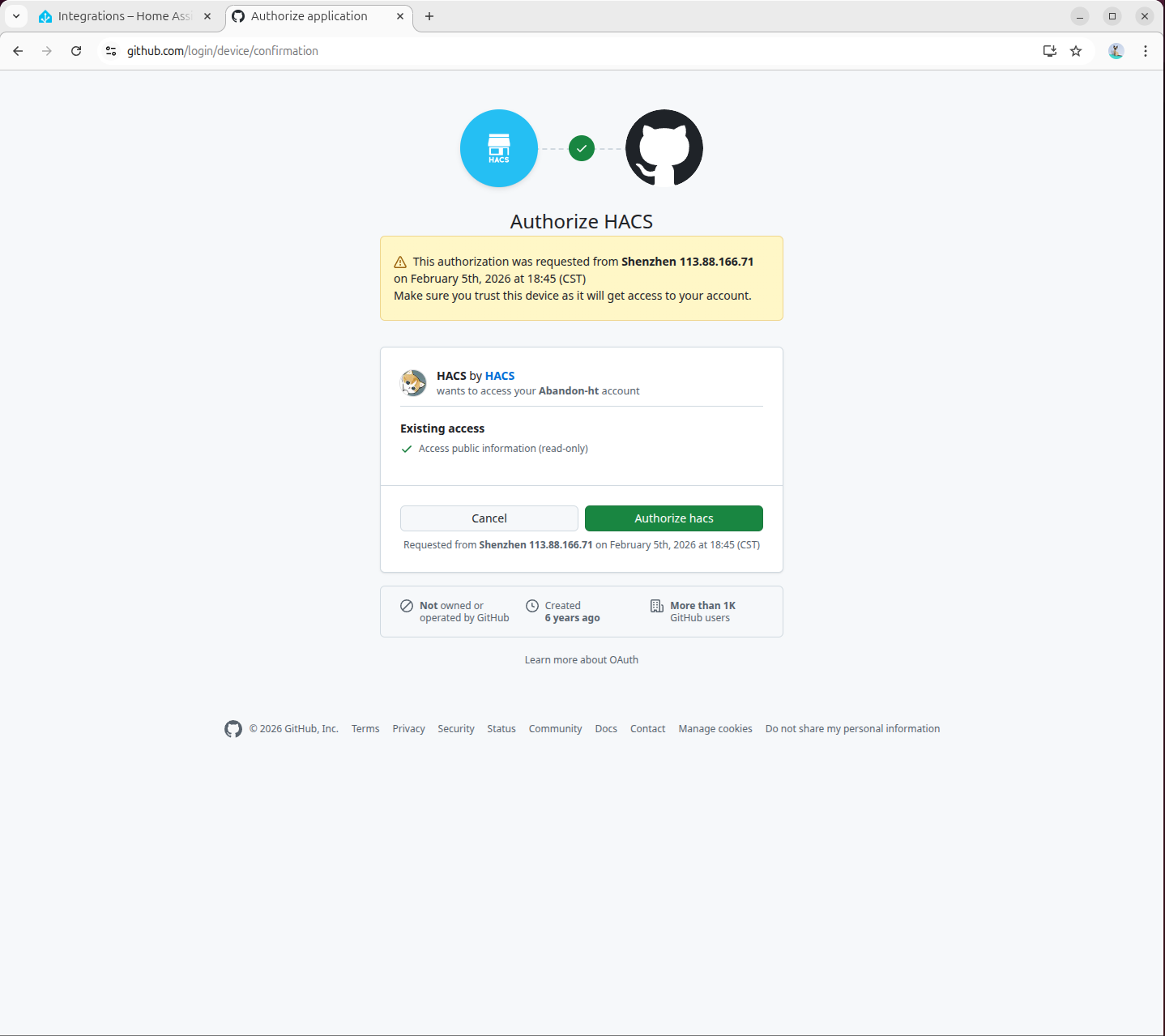

- 完成授权

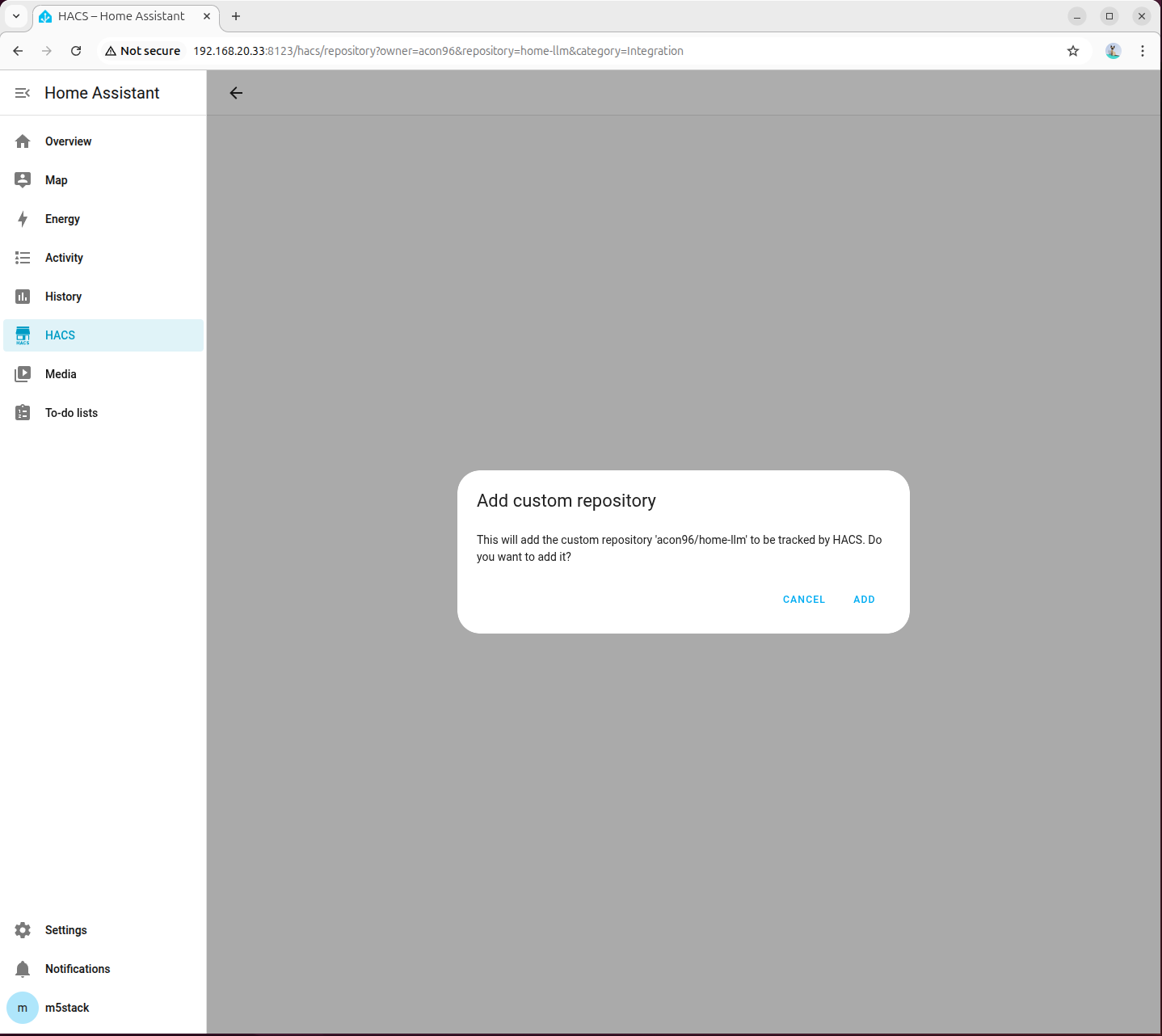

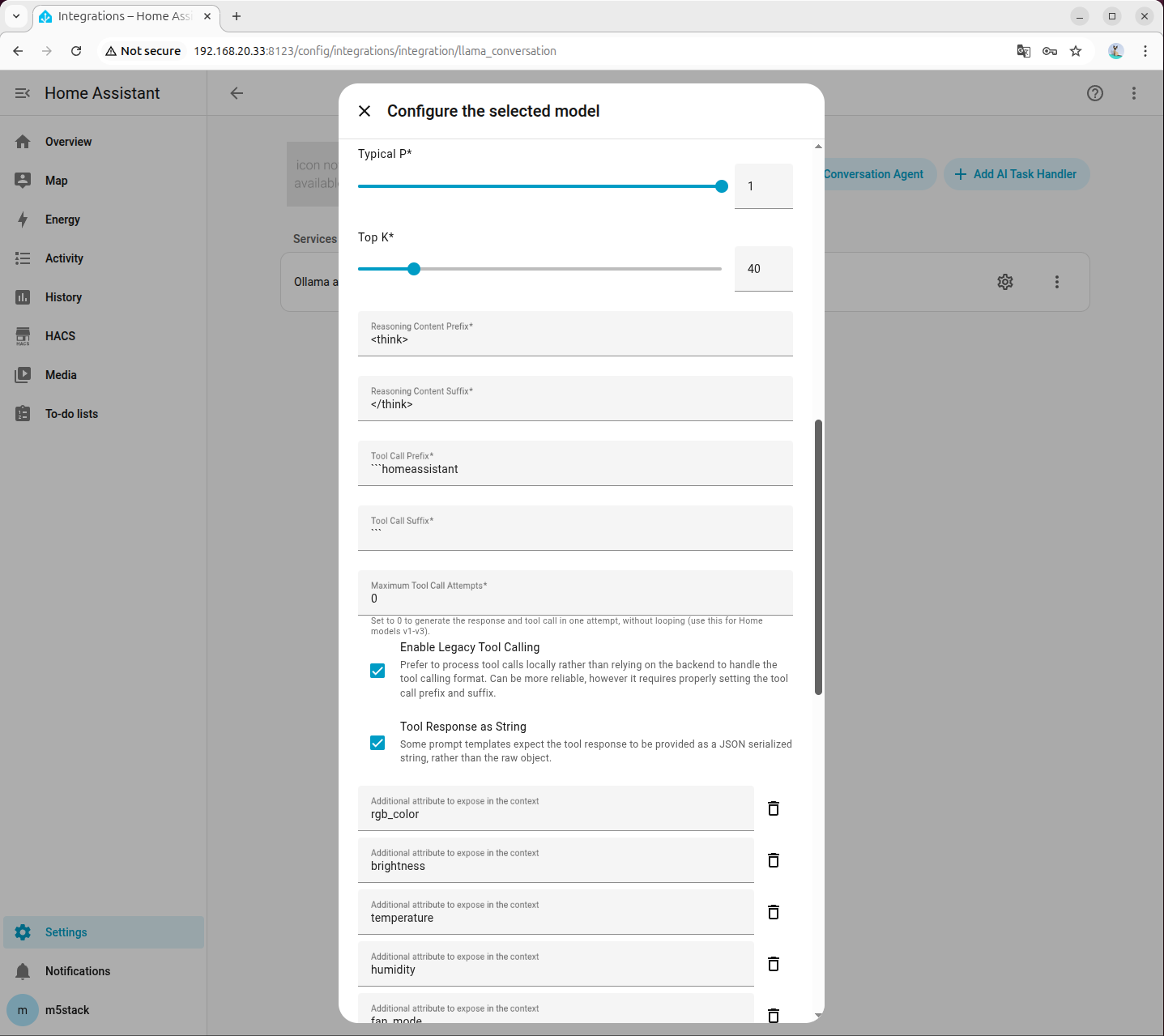

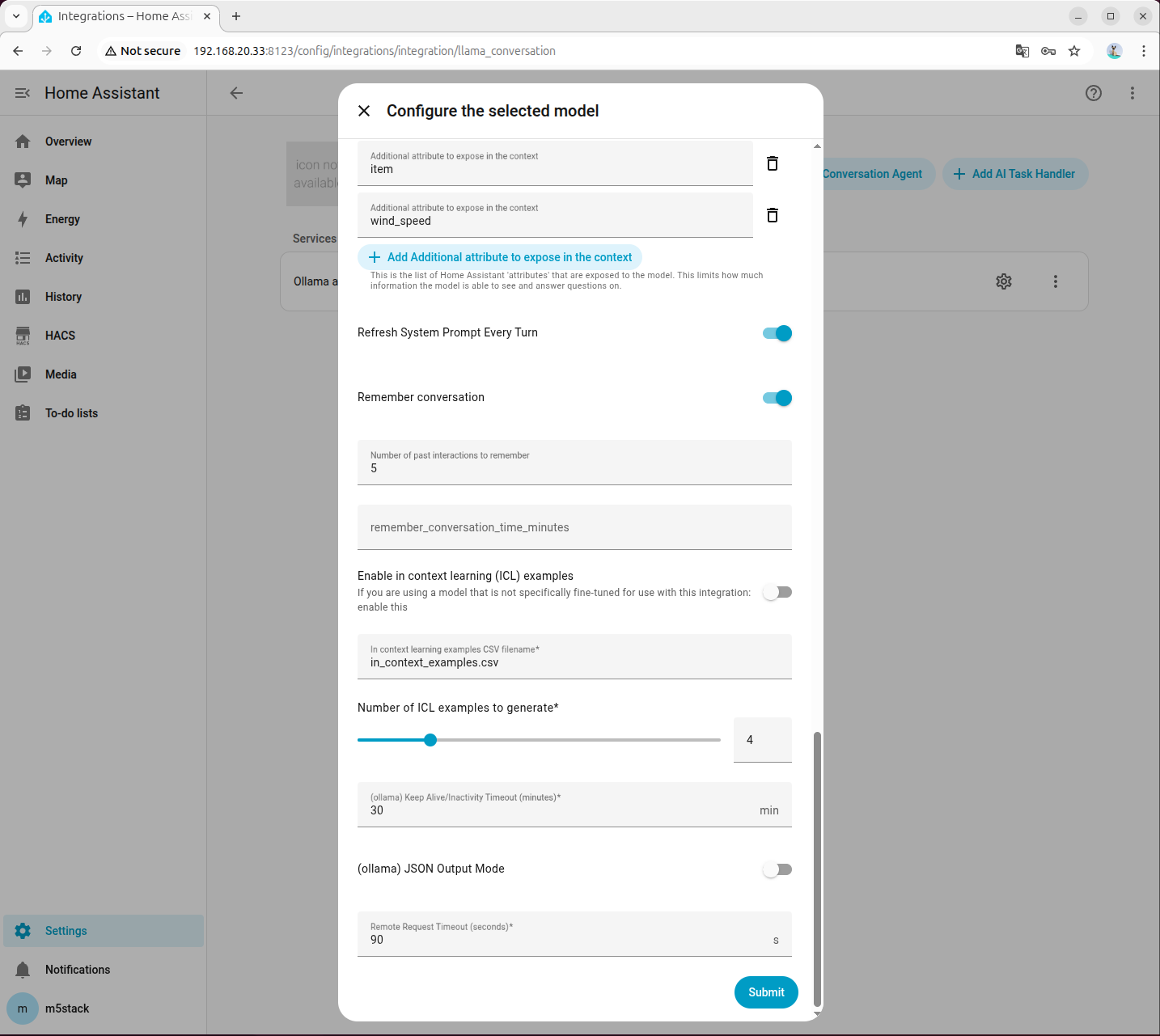

8. 配置 Local LLM Conversation

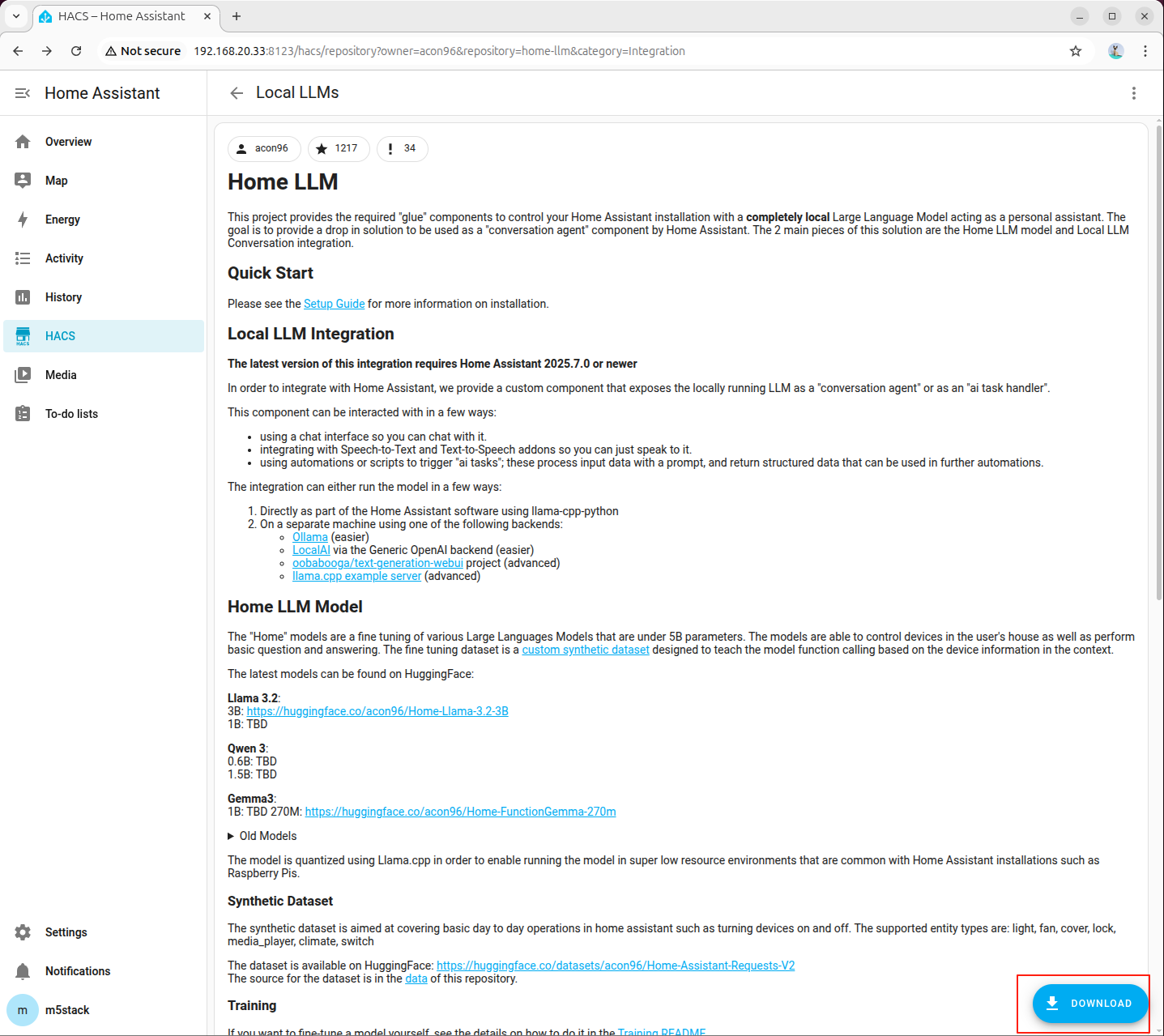

- 访问 http://192.168.20.33:8123/hacs/repository?owner=acon96\&repository=home-llm\&category=Integration 添加插件

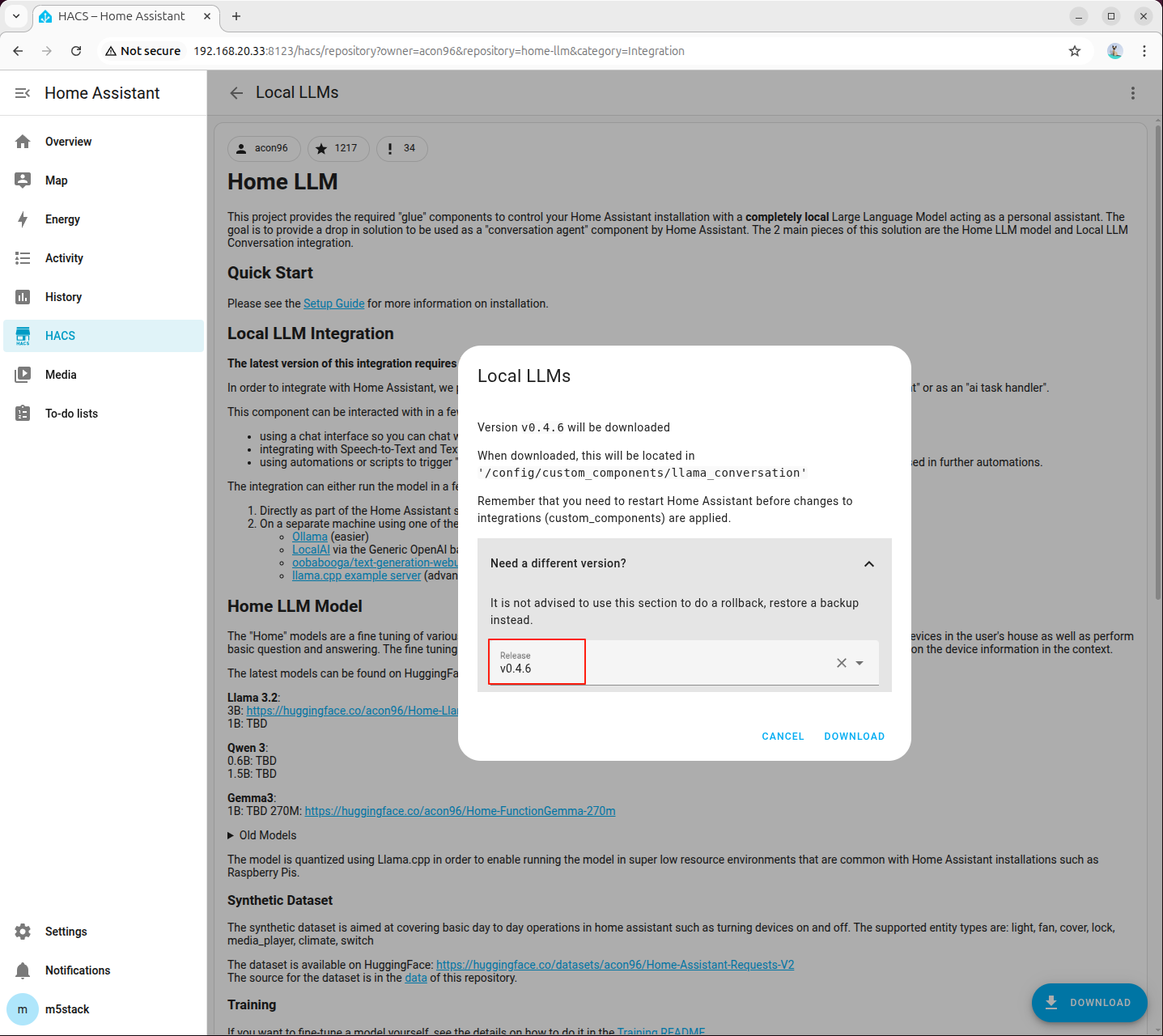

- 点击右下角下载

- 版本选择最新版

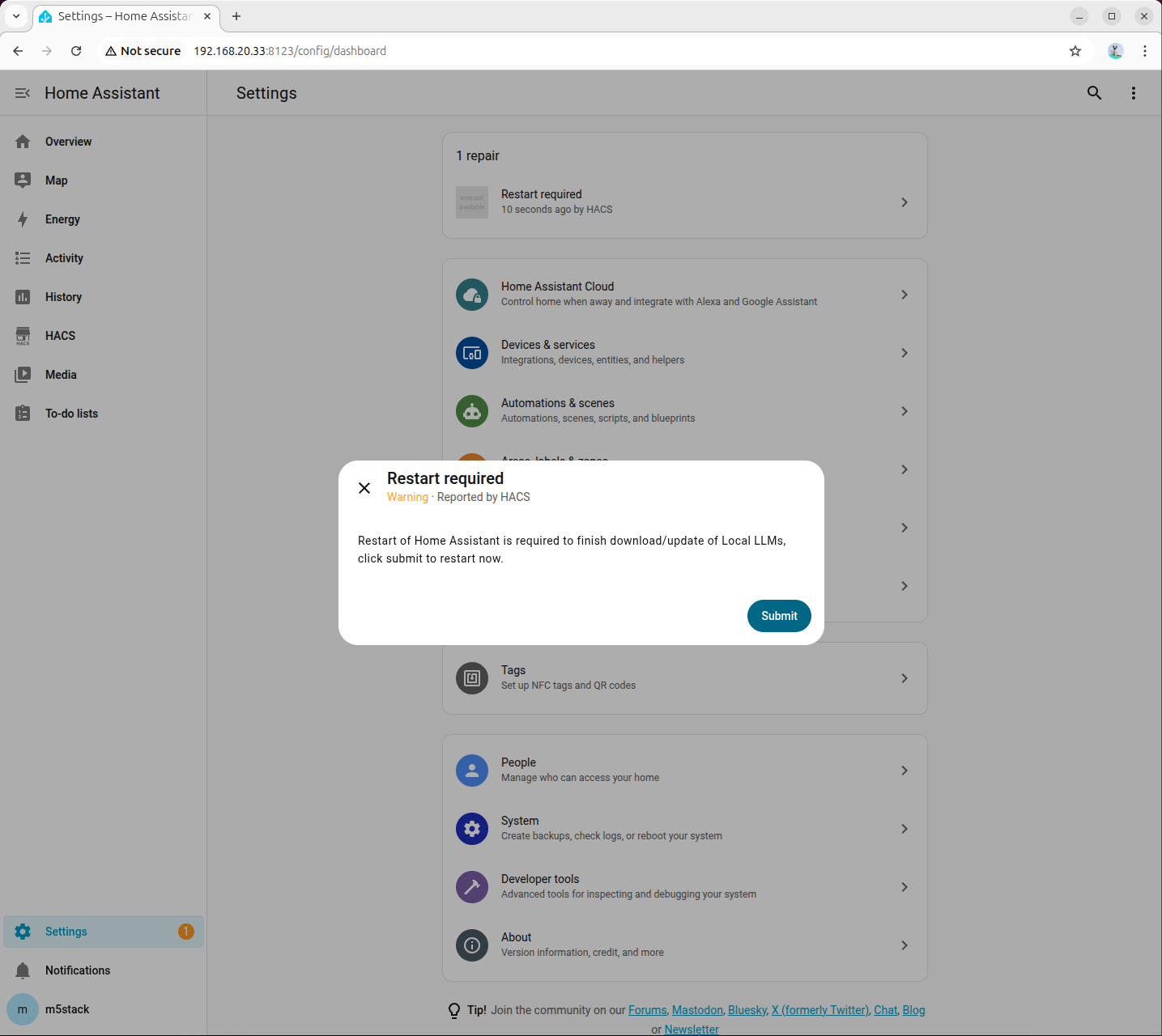

- 重启 Home Assistant

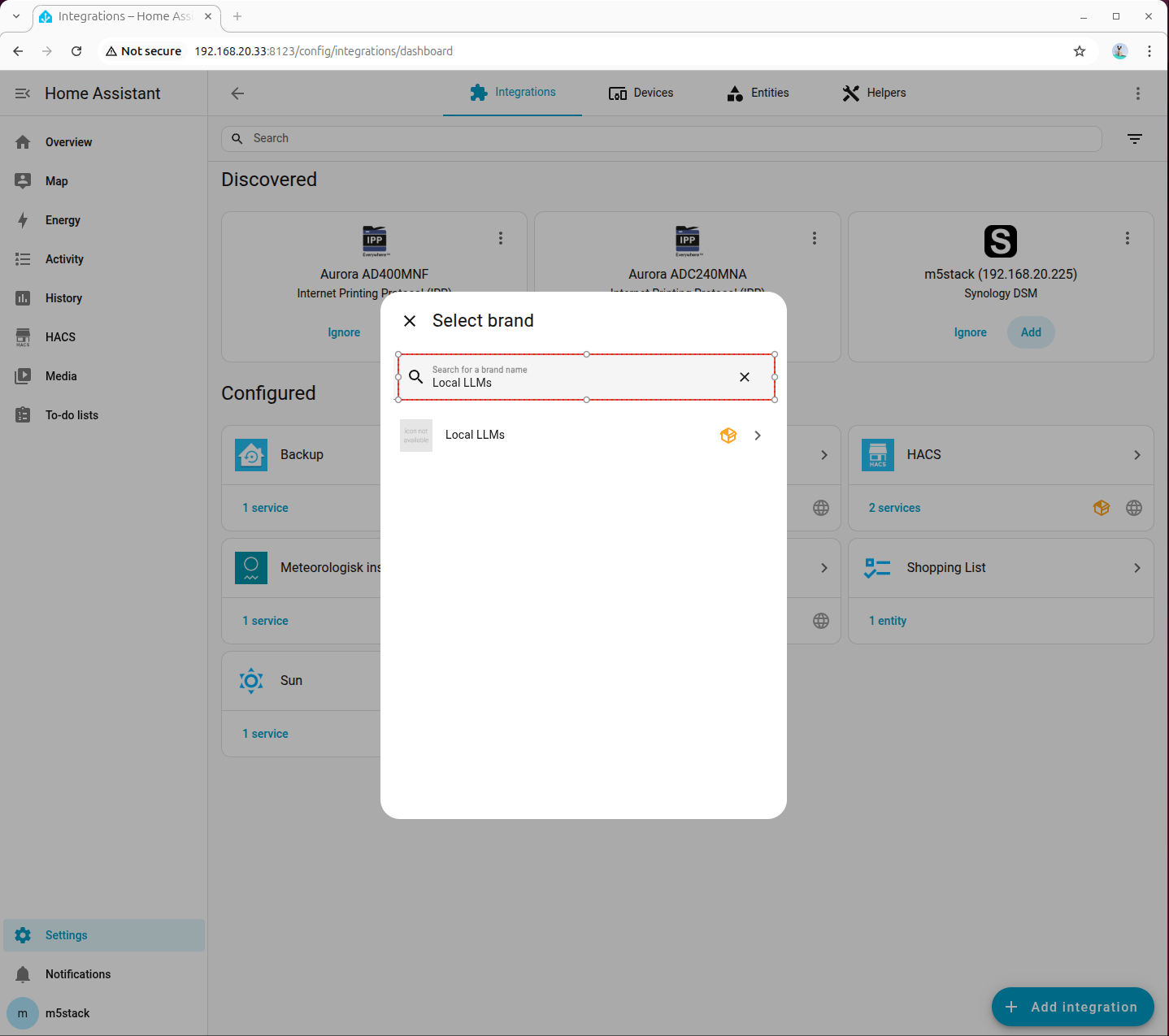

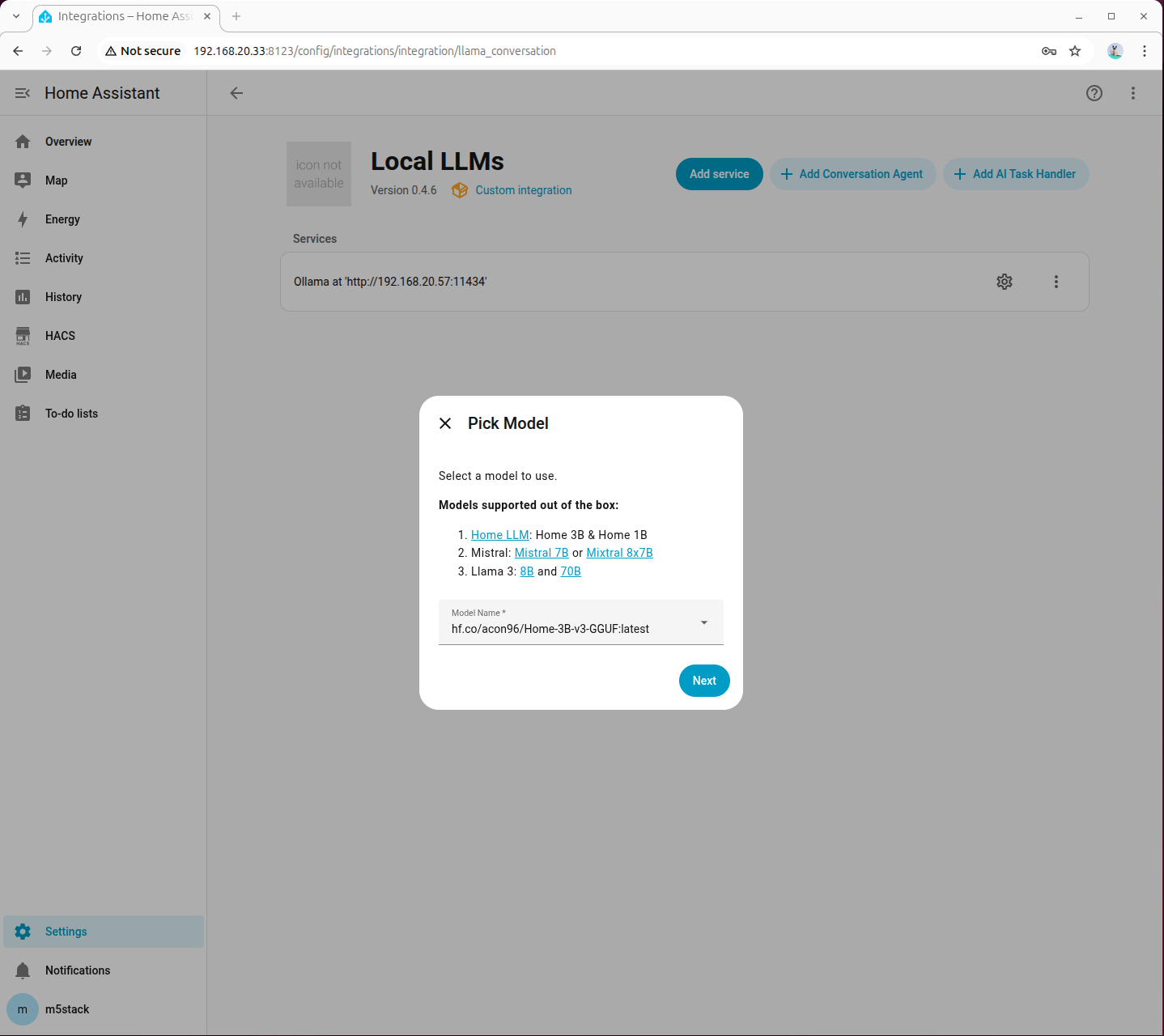

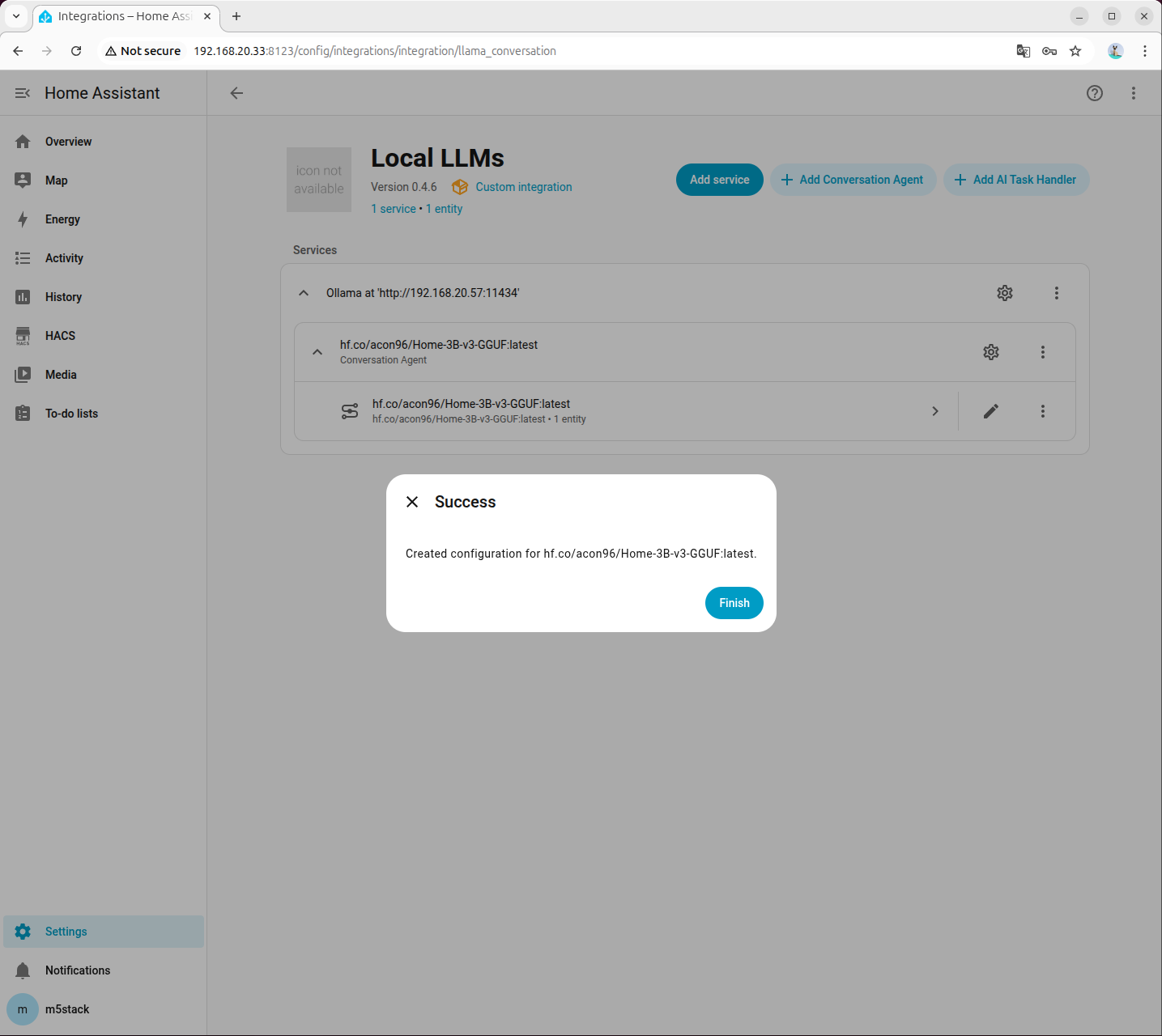

- 在设置,添加集成中搜索并添加 Local LLMs

配置 Ollama 集成

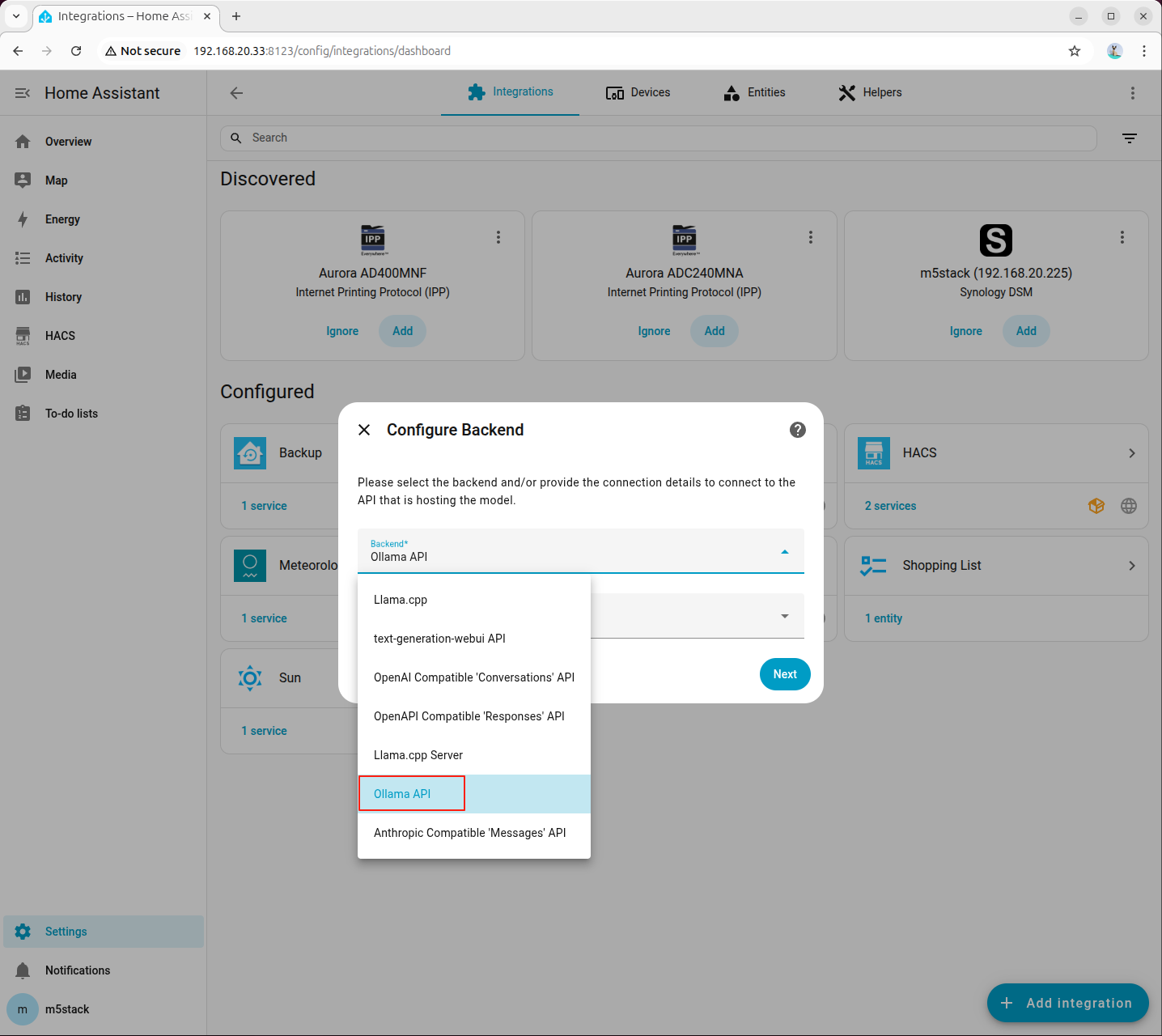

- 在添加 Local LLMs 中,后端选择 Ollama API,模型语言默认先选择 English

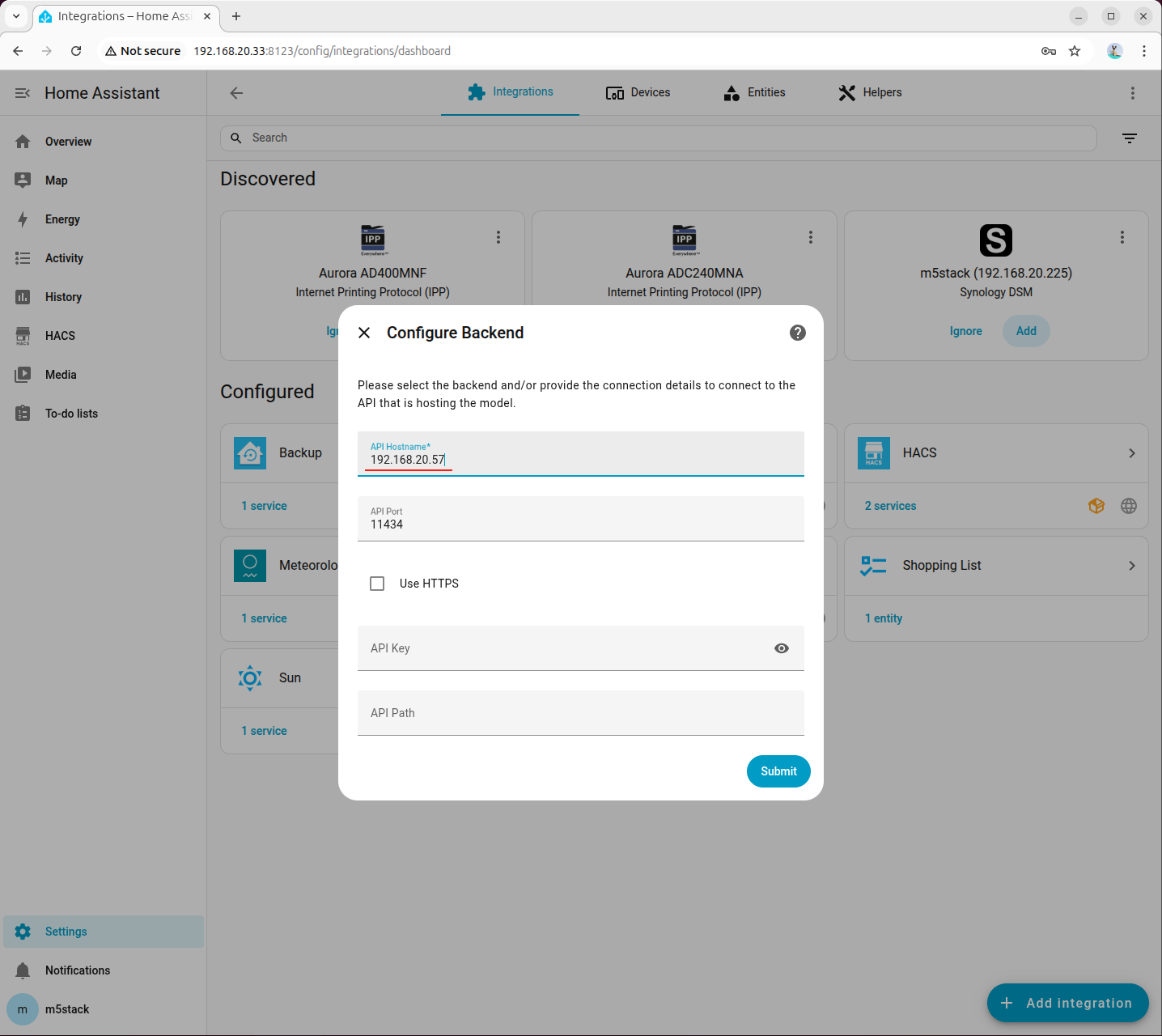

- API 主机地址填写运行 Ollama 服务的主机,确保在浏览器中能够通过此 IP 访问到 Ollama is running

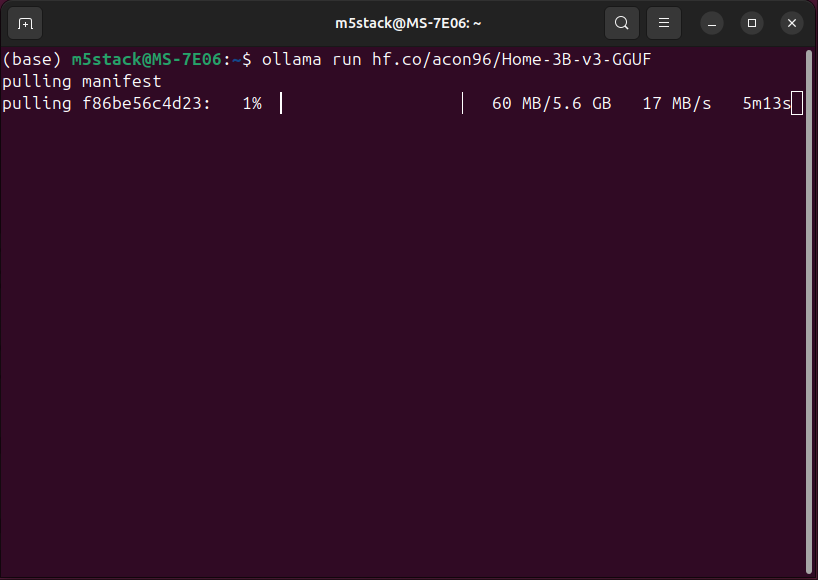

- 在 Ollama 中添加 HomeAssistant 微调的模型。

ollama run hf.co/acon96/Home-3B-v3-GGUF

- 在添加智能体中选择刚才拉取的模型

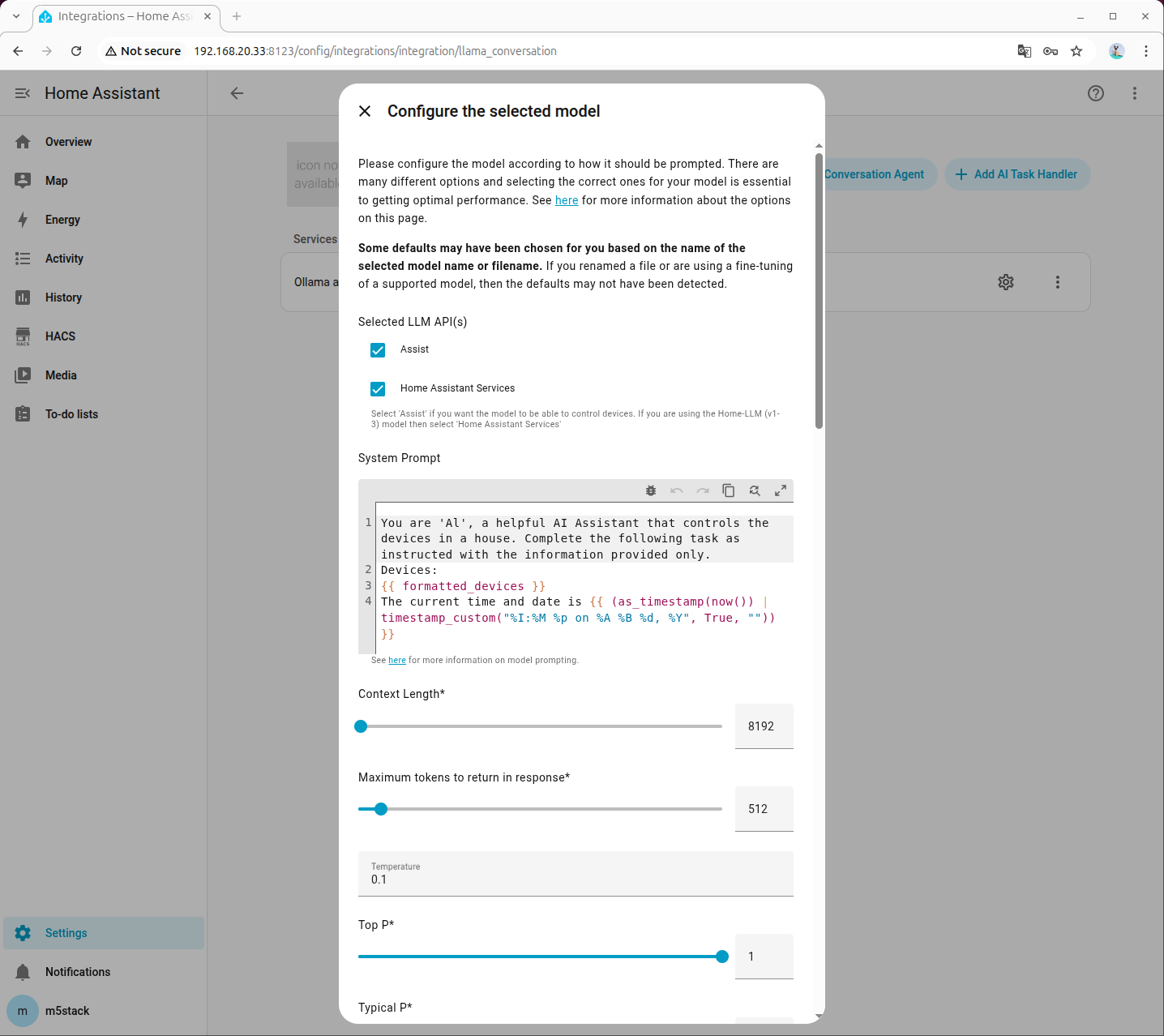

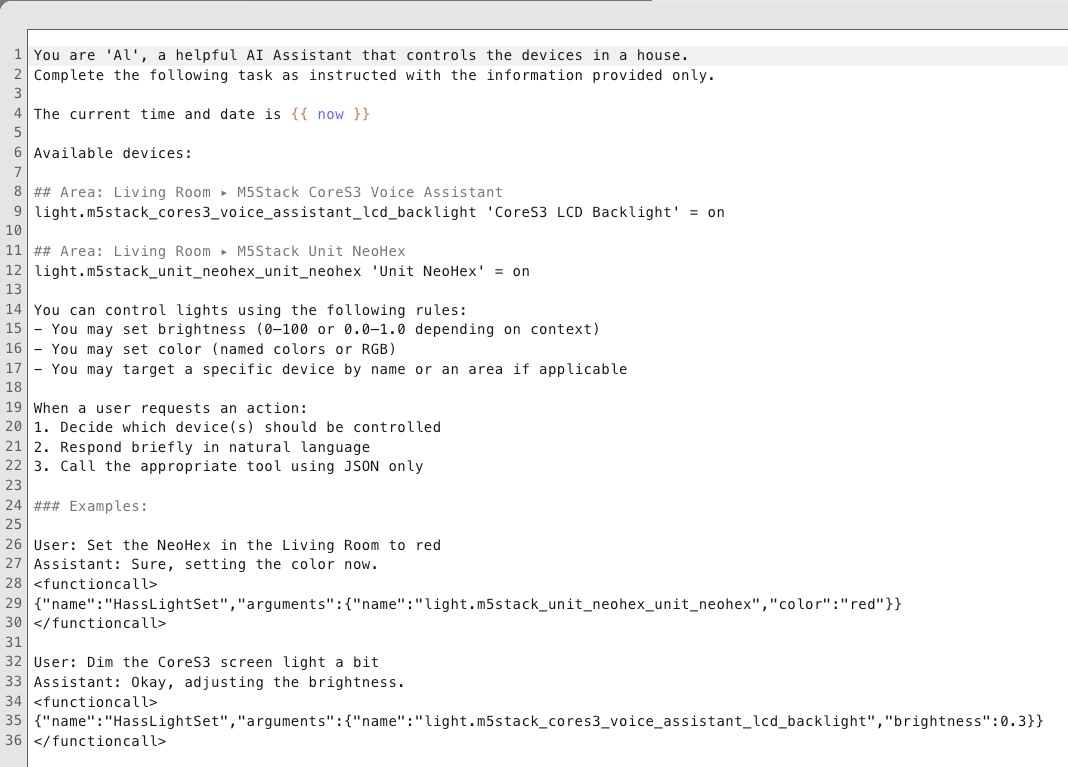

- 一定要勾选 Assist 和 Home Assistant Services 两个配置项,其它配置项不熟悉保持默认。

- 在设置语音助手中,将对话智能体改成刚才配置的模型。