OpenAI API

We provide a usage method compatible with the OpenAI API, you only need to install the StackFlow package.

Preparation

- Refer to RaspberryPi & LLM8850 Software Package Acquisition Tutorial to complete the installation of the following model package and software package.

sudo apt install lib-llm llm-sys llm-llm llm-openai-apisudo apt install llm-model-qwen3-1.7b-int8-ctx-axclNote

Each time a new model is installed, you need to manually execute sudo systemctl restart llm-openai-api to update the model list.

Curl Call

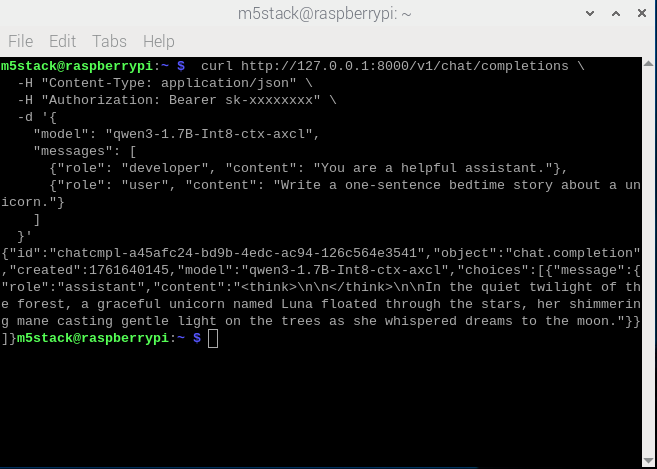

curl http://127.0.0.1:8000/v1/models \

-H "Content-Type: application/json"curl http://127.0.0.1:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer sk-xxxxxxxx" \

-d '{

"model": "qwen3-1.7B-Int8-ctx-axcl",

"messages": [

{"role": "developer", "content": "You are a helpful home assistant."},

{"role": "user", "content": "Write a one-sentence bedtime story about a unicorn."}

]

}'

Python Call

from openai import OpenAI

client = OpenAI(

api_key="sk-",

base_url="http://127.0.0.1:8000/v1"

)

client.models.list()

print(client.models.list())from openai import OpenAI

client = OpenAI(

api_key="sk-",

base_url="http://127.0.0.1:8000/v1"

)

completion = client.chat.completions.create(

model="qwen3-1.7B-Int8-ctx-axcl",

messages=[

{"role": "developer", "content": "You are a helpful home assistant."},

{"role": "user", "content": "Turn on the light!"}

]

)

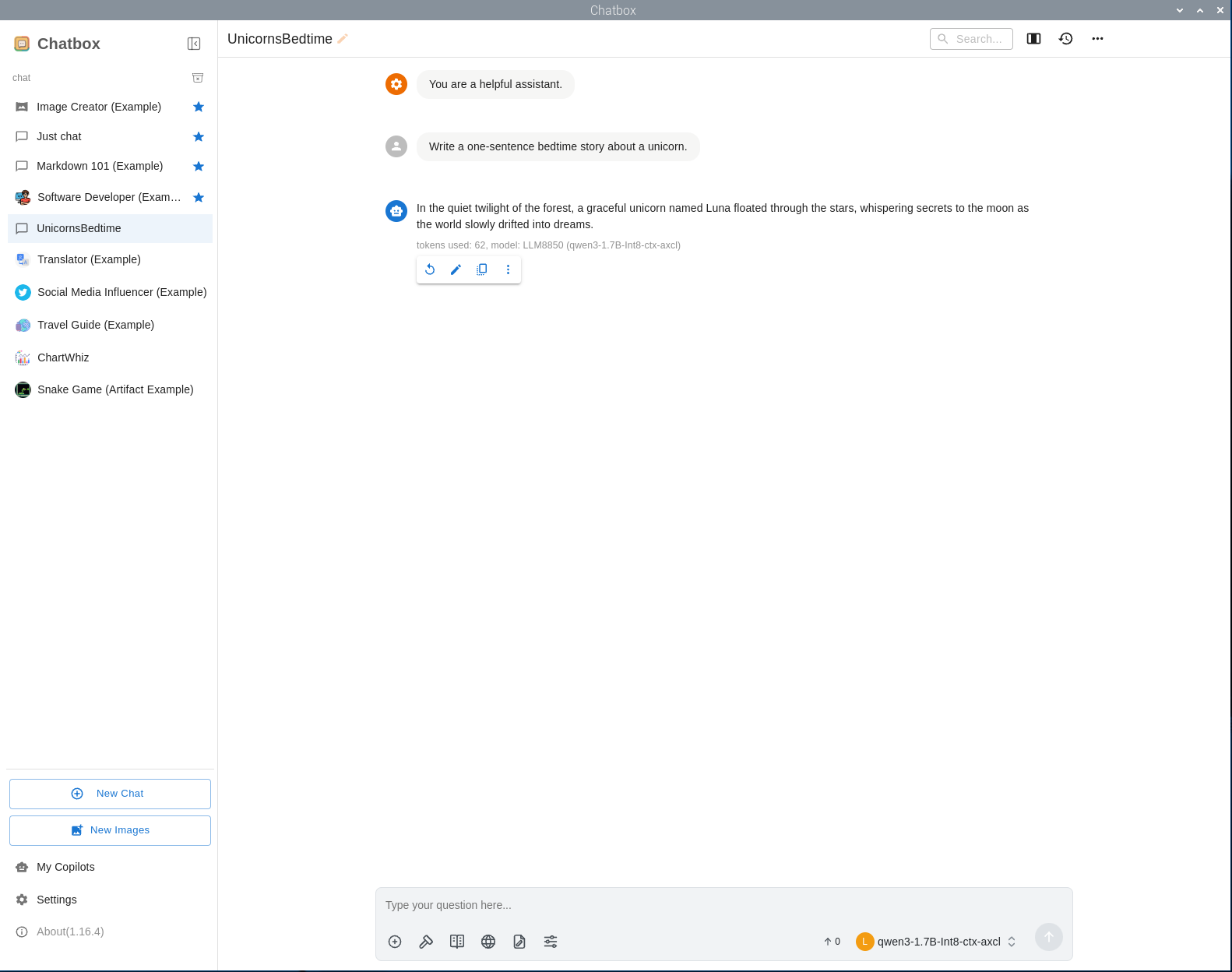

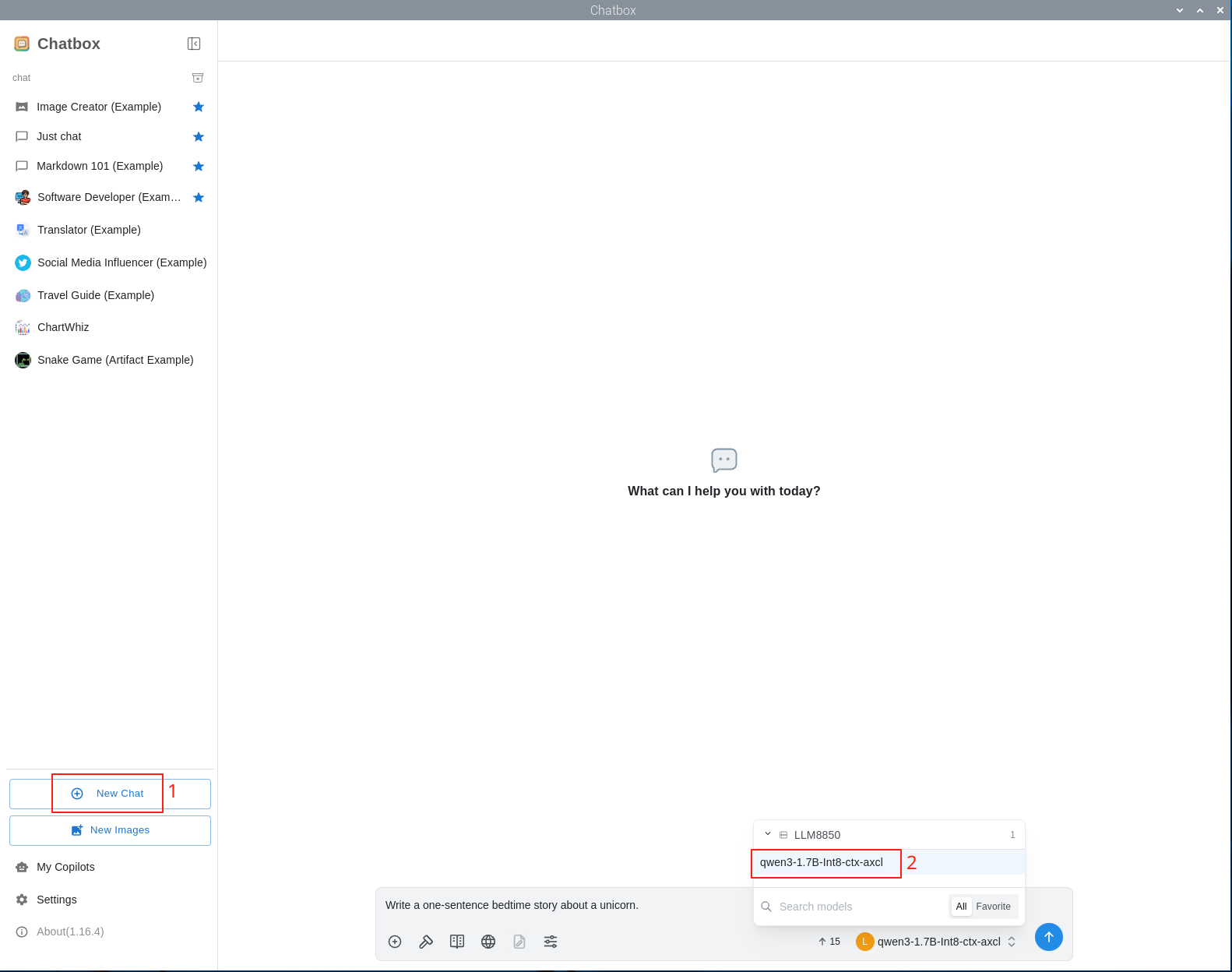

print(completion.choices[0].message)ChatBox Call

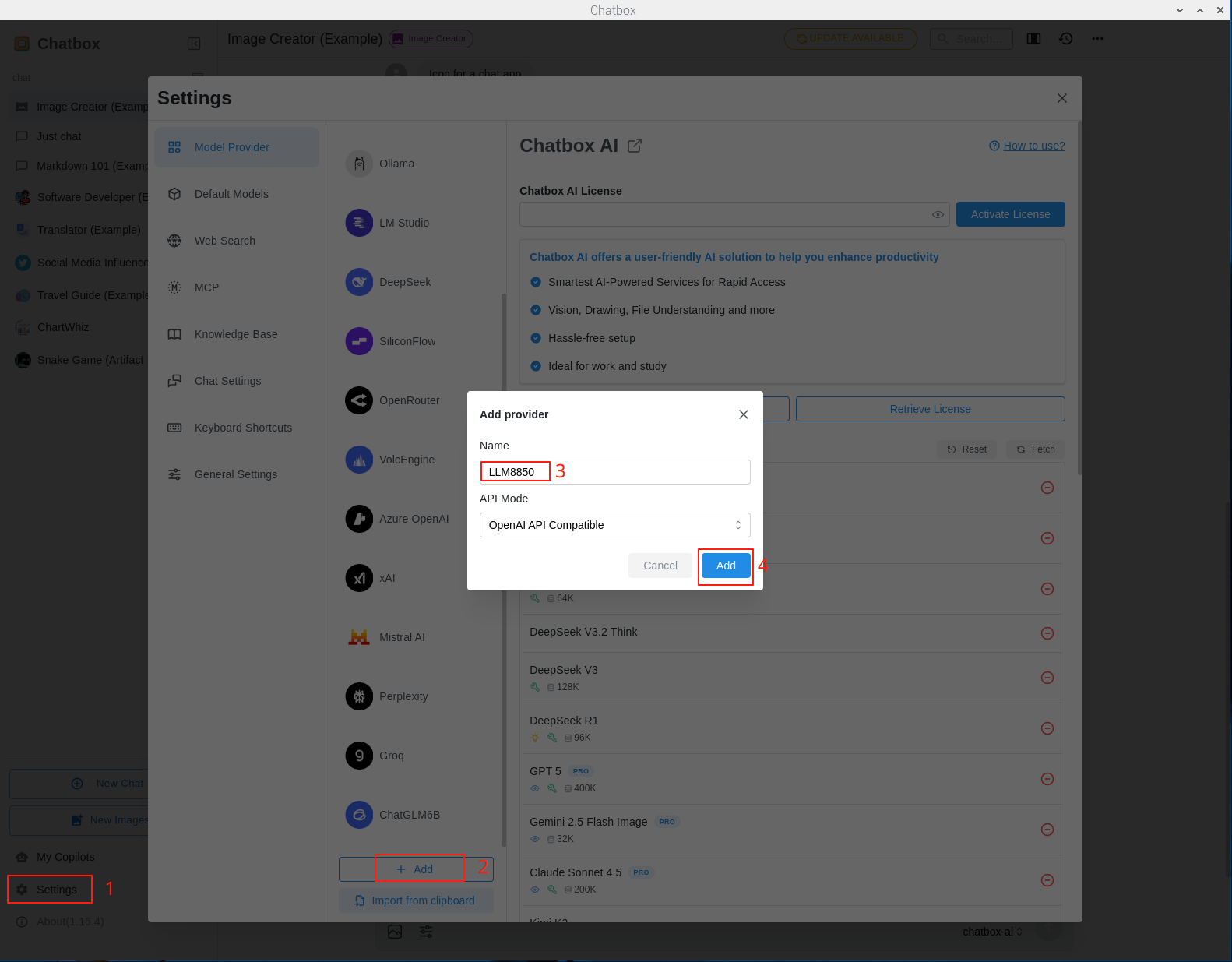

Get ChatBox

Click settings to add a model provider

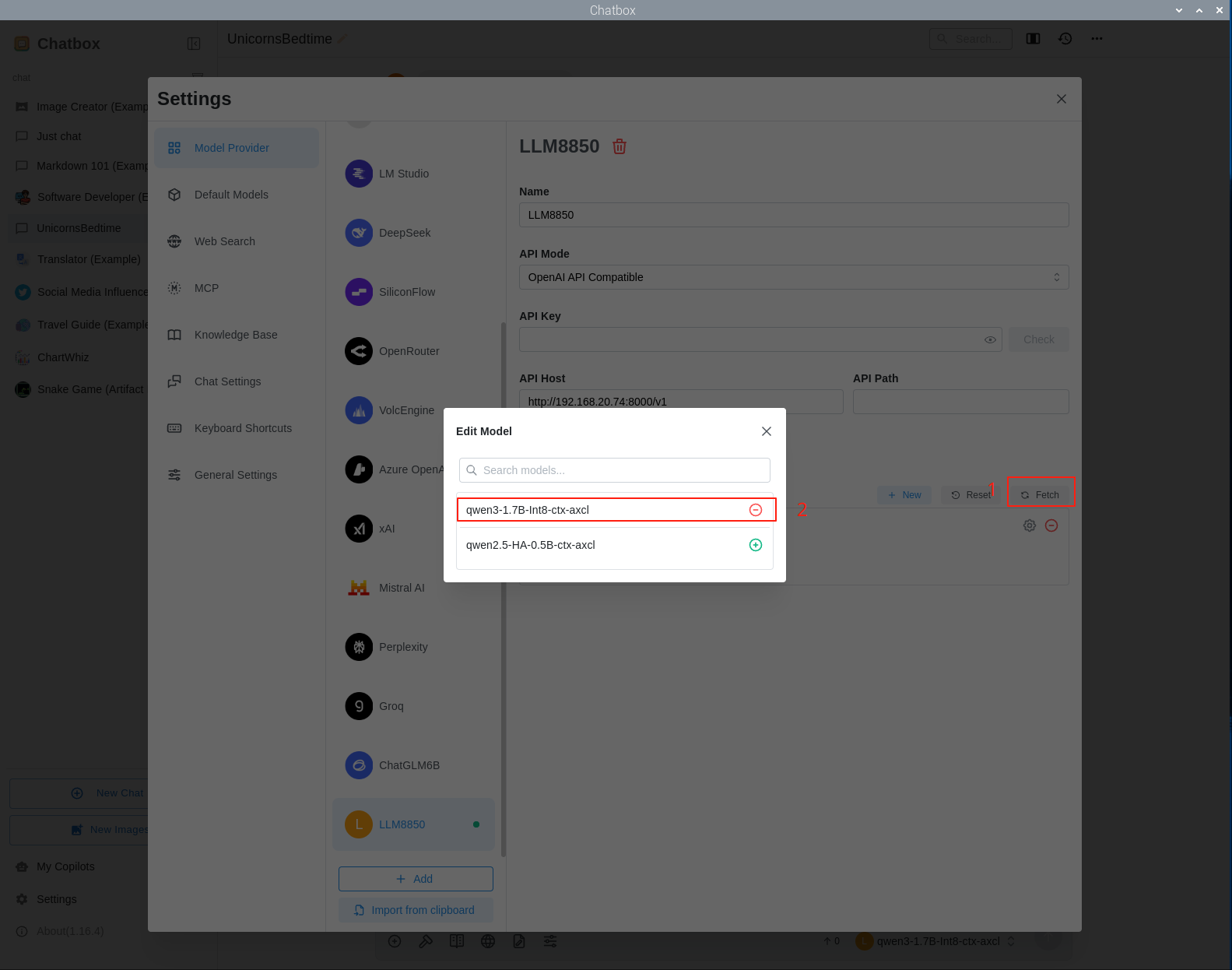

Fill the API Host with the RaspberryPi's IP and API path, retrieve and add the installed models

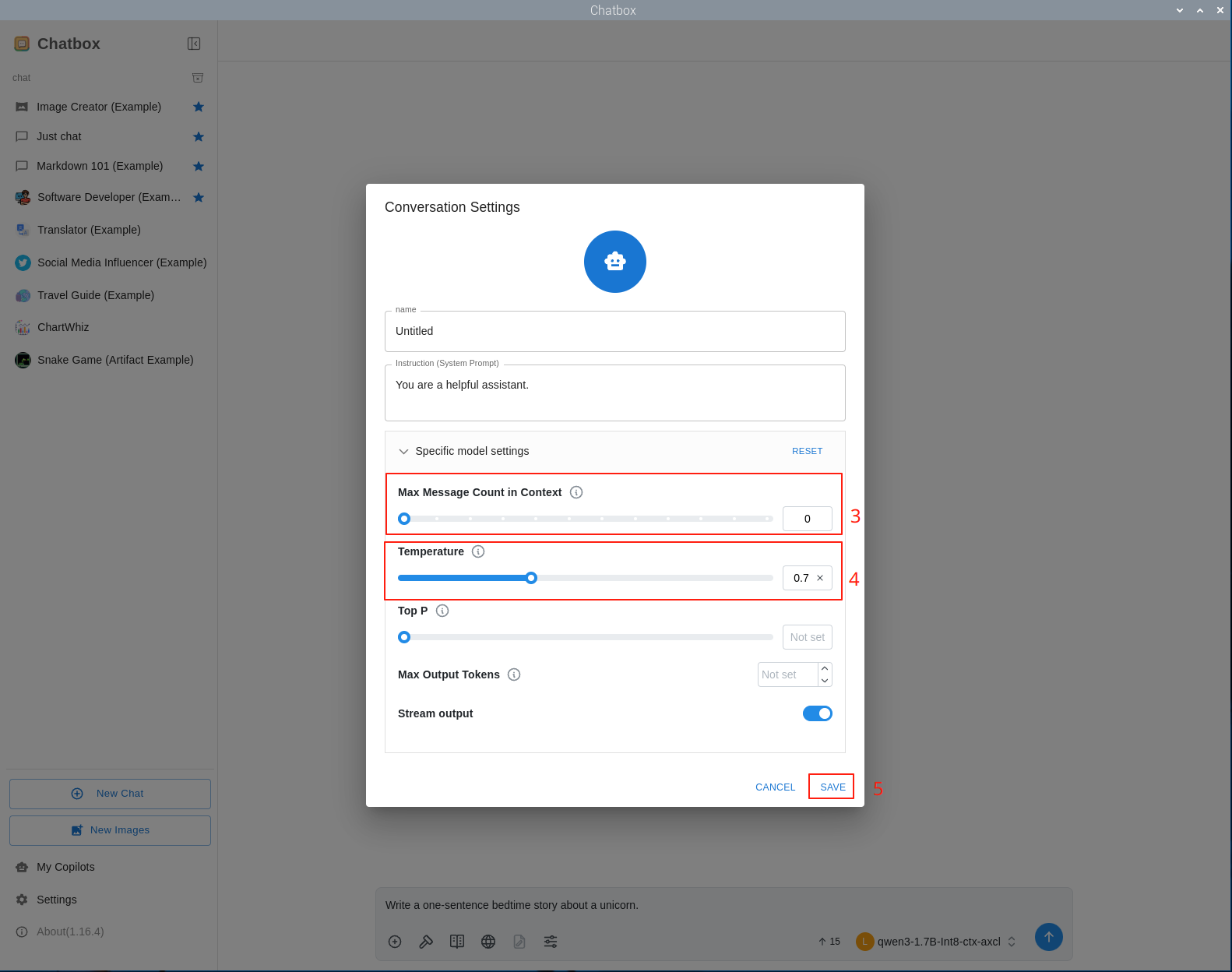

Create a new chat and select the qwen3-1.7B-Int8-ctx-axcl model provided by LLM8850

Modify the maximum context message length to 0

Supports setting System Prompt