lcm-lora-sd

- Manually download the model and upload it to raspberrypi5, or pull the model repository with the following command.

Tip

If git lfs is not installed, please first refer to git lfs installation instructions for setup.

git clone https://huggingface.co/AXERA-TECH/lcm-lora-sdv1-5File Description:

m5stack@raspberrypi:~/rsp/lcm-lora-sdv1-5 $ ls -lh

total 100K

drwxrwxr-x 2 m5stack m5stack 4.0K Aug 11 17:10 asserts

-rw-rw-r-- 1 m5stack m5stack 0 Aug 11 17:09 config.json

-rw-rw-r-- 1 m5stack m5stack 1.2K Aug 11 17:09 Disclaimer.md

-rw-rw-r-- 1 m5stack m5stack 1.5K Aug 11 17:09 LICENSE

drwxrwxr-x 4 m5stack m5stack 4.0K Aug 11 17:14 models

-rw-rw-r-- 1 m5stack m5stack 5.7K Aug 11 17:09 README.md

-rw-rw-r-- 1 m5stack m5stack 117 Aug 11 17:09 requirements.txt

-rw-rw-r-- 1 m5stack m5stack 23K Aug 11 17:09 run_img2img_axe_infer.py

-rw-rw-r-- 1 m5stack m5stack 23K Aug 11 17:09 run_img2img_onnx_infer.py

-rw-rw-r-- 1 m5stack m5stack 7.8K Aug 11 17:09 run_txt2img_axe_infer_new.py

-rw-rw-r-- 1 m5stack m5stack 7.3K Aug 11 17:09 run_txt2img_axe_infer.py

-rw-rw-r-- 1 m5stack m5stack 7.4K Aug 11 17:09 run_txt2img_onnx_infer.py- Create a virtual environment

python -m venv sd- Activate the virtual environment

source sd/bin/activate- Install dependencies

sudo apt install cmake -y

pip install -r requirements.txt

pip install https://github.com/AXERA-TECH/pyaxengine/releases/download/0.1.3.rc2/axengine-0.1.3-py3-none-any.whl- Text-to-Image

python run_txt2img_axe_infer.pyRunning result:

(sd) m5stack@raspberrypi:~/rsp/lcm-lora-sdv1-5$ python run_txt2img_axe_infer.py

[INFO] Available providers: ['AXCLRTExecutionProvider']

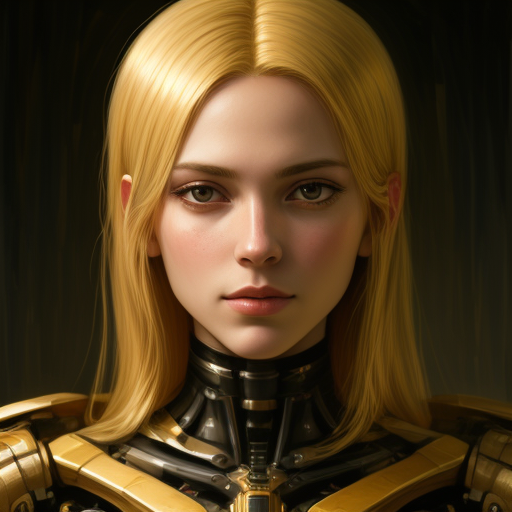

prompt: Self-portrait oil painting, a beautiful cyborg with golden hair, 8k

text_tokenizer: ./models/tokenizer

text_encoder: ./models/text_encoder

unet_model: ./models/unet.axmodel

vae_decoder_model: ./models/vae_decoder.axmodel

time_input: ./models/time_input_txt2img.npy

save_dir: ./txt2img_output_axe.png

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.4 9215b7e5

text encoder take 4466.2ms

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.3 972f38ca

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.3 972f38ca

load models take 22582.9ms

unet once take 433.6ms

unet once take 433.5ms

unet once take 433.3ms

unet once take 433.5ms

unet loop take 1736.7ms

vae inference take 914.2ms

save image take 254.3ms

- Image-to-Image

python run_txt2img_axe_infer.pyRunning result:

(sd) m5stack@raspberrypi:~/rsp/lcm-lora-sdv1-5$ python run_txt2img_axe_infer.py

[INFO] Available providers: ['AXCLRTExecutionProvider']

prompt: Self-portrait oil painting, a beautiful cyborg with golden hair, 8k

text_tokenizer: ./models/tokenizer

text_encoder: ./models/text_encoder

unet_model: ./models/unet.axmodel

vae_decoder_model: ./models/vae_decoder.axmodel

time_input: ./models/time_input_txt2img.npy

save_dir: ./txt2img_output_axe.png

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.4 9215b7e5

text encoder take 2962.1ms

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.3 972f38ca

[INFO] Using provider: AXCLRTExecutionProvider

[INFO] SOC Name: AX650N

[INFO] VNPU type: VNPUType.DISABLED

[INFO] Compiler version: 3.3 972f38ca

load models take 14483.6ms

unet once take 433.6ms

unet once take 433.3ms

unet once take 433.2ms

unet once take 433.4ms

unet loop take 1736.4ms

vae inference take 914.0ms

save image take 233.2ms